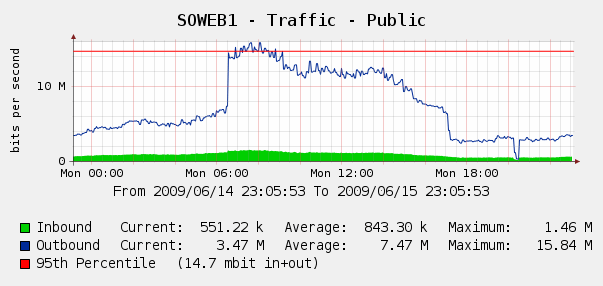

We noticed something unusual on our Cacti graphs today. Can you spot it?

Yes! The light gray of the graph background does seem a few shades lighter than normal! I see it too!

No, no, of course I'm talking about that massive traffic spike from 06:00 to 15:00 PST (server time). In the words of The Office's David Brent:

I think there's been a rape up there!

Bandwidth isn't usually a problem for us, as we are heavily text-oriented and go to great lengths to make sure all our text content is served up compressed. This is almost 3x our normal peak traffic level. And for what?

Geoff ran a few queries in Log Parser and found that this is yet another instance of a perfect web spider storm. Here are the top 3 bandwidth consumers in the logs for that day:

IPUser-AgentRequestsBytes Served72.30.78.240 Yahoo! Slurp/3.056,331 1,124,861,78066.249.68.109 Googlebot/2.156,579 773,418,83466.249.68.109 Mediapartners-Google 30,519 671,904,609

As I mentioned, this has happened to us before -- and we've considered dynamically blocking excessive HTTP bandwidth use. But first we politely asked the Yahoo and Google web spider bots to play a bit nicer:

- We updated our robots.txt to include the Crawl-delay directive, like so: User-agent: Slurp Crawl-delay: 1 User-agent: msnbot Crawl-delay: 1

- We went to Google Webmaster Tools and told Google to send no more than 2 Googlebot search engine spider requests per second.

That was a week ago. Obviously, it isn't working.

Now we'll have to do this the hard way. Fortunately, Geoff (aka Valued Stack Overflow Associate #00003) has a "spare" Cisco PIX 515E laying around that we plan to put in front of the web servers, so we can dynamically throttle the offenders. But we can't do that for a week or so.

In the meantime, since Yahoo (via Slurp!) is about 0.3% of our traffic, but insists on rudely consuming a huge chunk of our prime-time bandwidth, they're getting IP banned and blocked. I'm a bit more sympathetic to Google, since they deliver almost 90% of our traffic, but it sure would be nice if they'd allow me to at least schedule the massive web spider storms for off-peak hours...