Last month, Joe wrote about the Welcome Wagon work that we are doing to make Stack Overflow more welcoming and inclusive. Our current work involves projects across domains from asking questions to framing community standards and more; one project we have been working on is understanding how comments are used and misused on Stack Overflow. We are a data engineer (Jason) and data scientist (Julia). As folks who code for a living and use Stack Overflow, as well as work here, we have certainly experienced and witnessed unwelcoming behavior in Stack Overflow comments first hand, whether through condescension, snark, or sarcasm. Our goal with this specific project is to understanding these issues so that we can start to address them. This blog post outlines our initial findings, what we could learn with more data, and next steps.

Classifying comments

I (Jason) wrote The Stack Overflow Comment Evaluator 5000™, a simple application that presents you with a comment thread from a post on Stack Overflow and asks you to rate each comment in the thread as Fine, Unwelcoming, or Abusive. Comments on Stack Overflow can already be flagged for being rude or abusive, but this flag is typically used only for the most egregious and toxic comments, which are thankfully rare. We're looking here to characterize comments that are unwelcoming in a way that isn't flagrant hate or abuse but would still make you think twice about participating in our community. Things we thought might fall into this category would include condescension, snark, sarcasm, and the like.

We estimated how many comments we could rate, given the number of folks we have internally and the time we would be asking of them. Then we loaded up the right number of comment threads into the application and invited all of our community managers, designers, developers, executives, site reliability engineers, and product managers to spend an hour rating comments. We had 57 participants who made 13,742 ratings on 3,992 comments.

Prevalence of comment categories

If we take a majority vote on the rating of each comment (with ties going to the worse rating) comments on Stack Overflow break down like so... Rating % of comments Fine 92.3% Unwelcoming 7.4% Abusive 0.3% According to those of us deeply involved here and familiar with Stack Overflow, about 7% of comments on Stack Overflow are unwelcoming. What did some unwelcoming comments look like? These combine elements of real comments to show typical examples.

- "This is becoming a waste of my time and you won't listen to my advice. What are the supposed benefits of making it so much more complex?"

- "Step 1. Do not clutter the namespace. Then get back to us."

- "The code you posted cannot yield this result. Please post the real code if you hope to get any help."

- "This error is self explanatory. You need to check..."

- "I have already told how you can... If you can't make it work, you are doing something wrong."

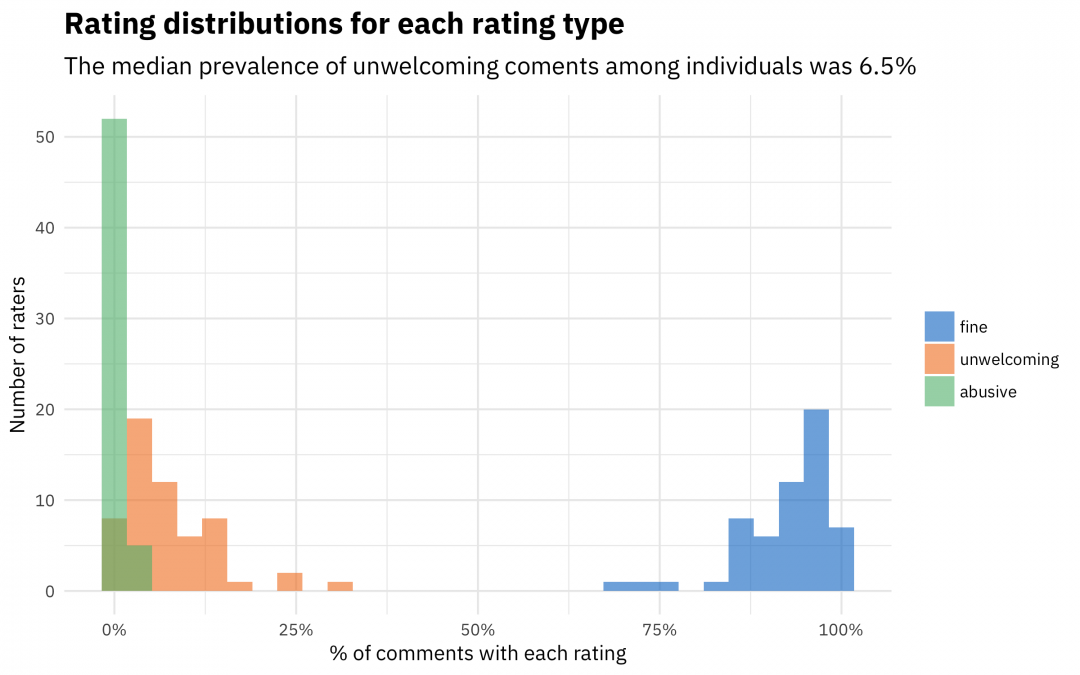

This stuff isn't profane, hate, or outright abuse, but it's certainly unwelcoming. Looking at majority voting is one approach, but the experience of being not welcomed is not a majority vote kind of thing; it's deeply personal. What if we looked at the distributions of the ratings by individual?

Among the 57 individuals who participated, the median result for comments that are neutral or fine was 93.2% and the median result for unwelcoming comments was 6.5%. We can see from this graph that there is considerable variety in people's experience of the site with respect to the comments they saw; the histograms have a broad shape. Take a look at just the distribution for the unwelcoming ratings. Four of us didn't find anything unwelcoming, and three of us thought that in excess of 1 in 5 comments were unwelcoming. This speaks to the variability of experience; for example, what an experienced, professional developer from a privileged background finds unwelcoming may not be the same as for a more junior, or less privileged developer.

What can we learn from our initial rating task?

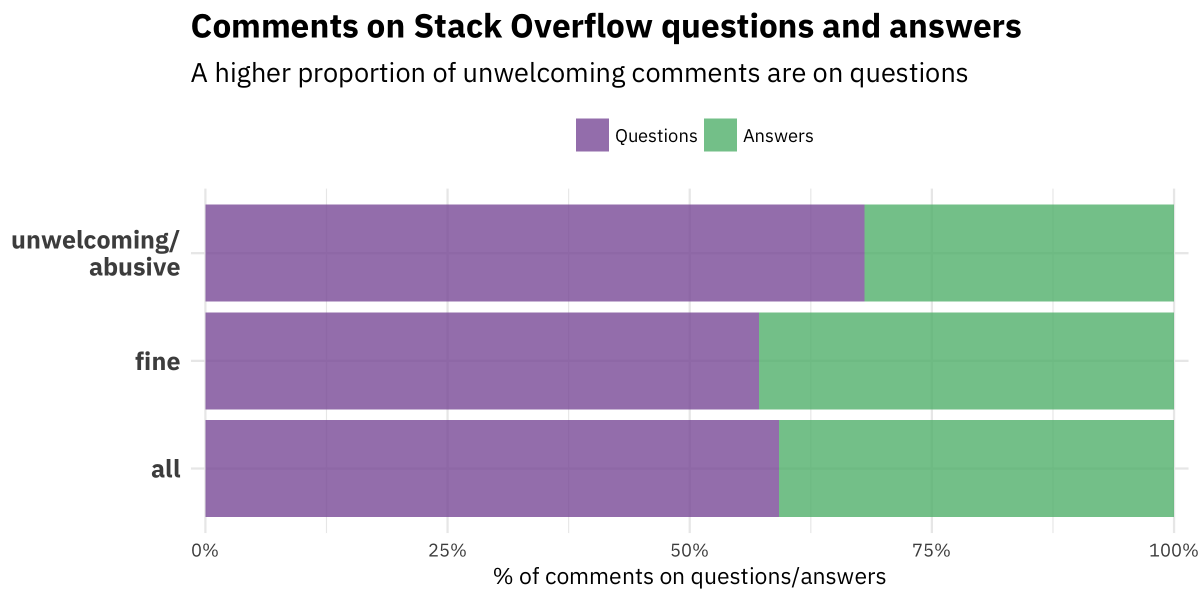

In this first attempt at rating comments, we have been able to measure the prevalence of unwelcoming comments, as perceived by experienced Stack Overflow community members/employees, as well as how much that varies. This first group of raters includes people from underrepresented groups in tech such as women, people of color, gay folks, and trans folks. We do not see evidence that having an identity in one or more of these groups led to an individual labeling comments as unwelcoming at a higher rate, at least not among the small group of ~60 participants in our initial task. It is possible that we would see a statistical difference with a larger sample size of raters. There are enough comments in this first rating task for us to assess whether unwelcoming comments are more prevalent on questions or answers on Stack Overflow. Let's compare all the comments from the month the rated comments were drawn from, along with the rated comments.

The proportions of comments that were classified as fine by the raters are consistent on questions and answers with the population of comments overall. We see a shift for the comments classified as unwelcoming or abusive, however; those comments are more prevalent on questions than answers, with a relative percentage shift of almost 10%. When we think through what is contributing to unwelcoming comments in our community and what to do next, this is important to keep in mind. Indeed, the dynamics at play here are part of why we are revamping our workflow for new askers. We also would like to look at how much this sample of raters agree with each other with some measure of inter-rater reliability. You might guess from the histogram of rating distributions that the inter-rater reliability is not going to be high; we labeled comments as unwelcoming at different rates. Given the kind of rating data we have (not every person saw every comment) we can use Krippendorff's alpha to measure reliability; this is a measure that ranges from zero (nobody agrees) to one (perfect agreement). For comments that were rated by at least three people, Krippendorff's alpha for this initial dataset is 0.39. This would be too low for qualitative research in an academic study like those done in the social sciences. If you have been around Stack Overflow a long time, you might be interested to know that this is much better than the reliability for the comment classification project done using Amazon Mechanical Turk about 5 years ago. What does a reliability measure like this mean? It reflects the real diversity in how we all experience the Stack Overflow community, based on our experiences and personalities. People who work at Stack Overflow agree more about what is unwelcoming than the Mechanical Turk workers did, but even the 60 or so of us do not agree enough that we could code comments reliably enough to be used in academic work. What this diversity of thought does not mean, to be clear, is that it is hopeless to address issues in how comments are being used for our community as a whole. We can take what we are learning here and move forward.

Impact of unwelcoming comments

So about 7% of comments on Stack Overflow are unwelcoming, depending on who you ask. What does that mean? First of all, this is not good enough for us. Stack Overflow is a place for developers to help each other; our goal is to be a professional space that makes our industry, our profession, and yes, the internet a better place. Everyone who codes should feel welcome to participate here. Second of all, a prevalence between 5% and 10% can have a big impact on a community. Let's sketch out a back-of-the-napkin estimate. If a typical developer visits Stack Overflow once or twice a week to solve a problem, the question they visit has an answer, and each post (question and answer) has two comments (keep in mind that comments are more visible to visitors than answers), we would conservatively estimate that a developer visiting Stack Overflow would see 1 to 3 condescending, unwelcoming comments every single month of their coding lives. Will one unwelcoming comment a month drive everyone away? Clearly not, as Stack Overflow still works for many. But it will convince some that it's not worth it to contribute here, and the next month’s comment will convince a few more, and so on. And this only considers the readers of these comments; those who the comments are directed at will naturally feel more dramatic effects. So, where does that bring us now?

- This is the first step for us in addressing how comments are used at Stack Overflow, and we are exploring options for moving forward. We believe strongly both that human moderators are key and that human-in-the-loop machine learning can offer us powerful tooling.

- It takes care to be understood well online, and people have different reactions to the same words. Remember that many more people than the post owner may read your comments, so write for posterity and make a conscious effort. When you see unwelcoming behavior, please flag it.

- We at Stack Overflow want to more clearly frame our expectations around our community standards. Watch for updates about the evolution of our "Be Nice" policy into a fully articulated code of conduct.

- We will be fielding this comment classification task more broadly soon, in order to learn more about how our community understands interaction via comments. Look for further work from us on this in the near future.