This past summer, we wrote our first blog post about comments on Stack Overflow, focusing on our initial work rating comments internally at Stack Overflow and what we learned. Since then, we've fielded this comment rating task more broadly in our community. This blog post shares some of what we are learning.

Engaging our community

I (Jason) wrote a web application that presents a user with a comment thread from a post on Stack Overflow and asks the user to rate each comment in the thread as fine, unwelcoming, or abusive. Our first blog post shared results from when we asked employees at Stack Overflow, including developers, product managers, and executives, to rate comments. In August, we rolled out our new Code of Conduct, along with new flags for comments that align with these categories, one flag for rude/abusive and one flag for unfriendly/unkind. This fall, we extended our comment classification task beyond our employees to our larger community. We invited individuals from three groups to rate comments.

- Moderators on Stack Overflow and other Stack Exchange sites

- Individuals who responded to our blog post in April, indicating they want to help make Stack Overflow more welcoming

- A sample of registered users from our general research list (you can opt in/out of our research list in your Stack Overflow email settings)

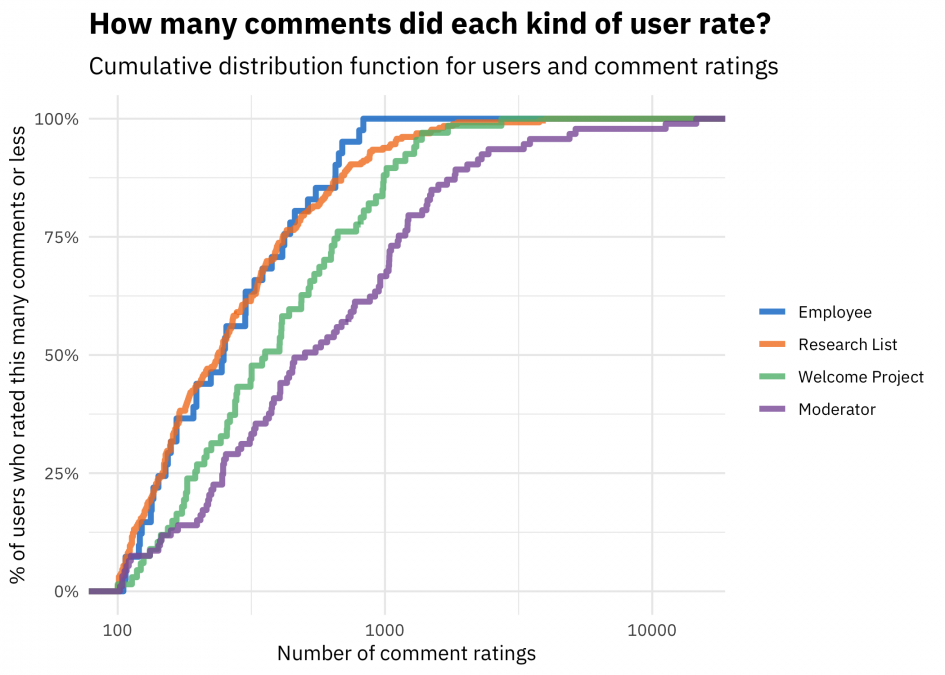

To log in to this web app and record data, each user needed a Stack Overflow account, so users had to make an account if they didn't have one already. We asked participants to invest at least one hour in rating comments, and to not work for more than 20 minutes at one sitting. What kind of response did we get? Overall, there were 525 users who spent at least 15 minutes or more rating comments. They made 253,807 ratings of 40,358 distinct comments. How many users and comment ratings did we have, for each kind of user? [table id=4 /] The moderators demonstrated their enormous commitment to our community through this project, as they do consistently day in, day out; moderators who participated in this project rated an average of over 1,000 comments each. Folks who responded to our blog post expressing interest in welcome/inclusion on Stack Overflow also invested a great deal of time, rating over 500 comments each. We see can see this visually by looking at the cumulative distribution functions for each kind of user; this kind of plot shows, for each number of comment ratings, the percentage of users who rated that many comments or lower.

If you're not used to interpreting this kind of graph, take a look at x = 1000, the location on the x-axis that corresponds to 1,000 comments. The line for the moderators is the lowest, indicating that more moderators submitted more comment ratings compared to the other groups.

Group differences

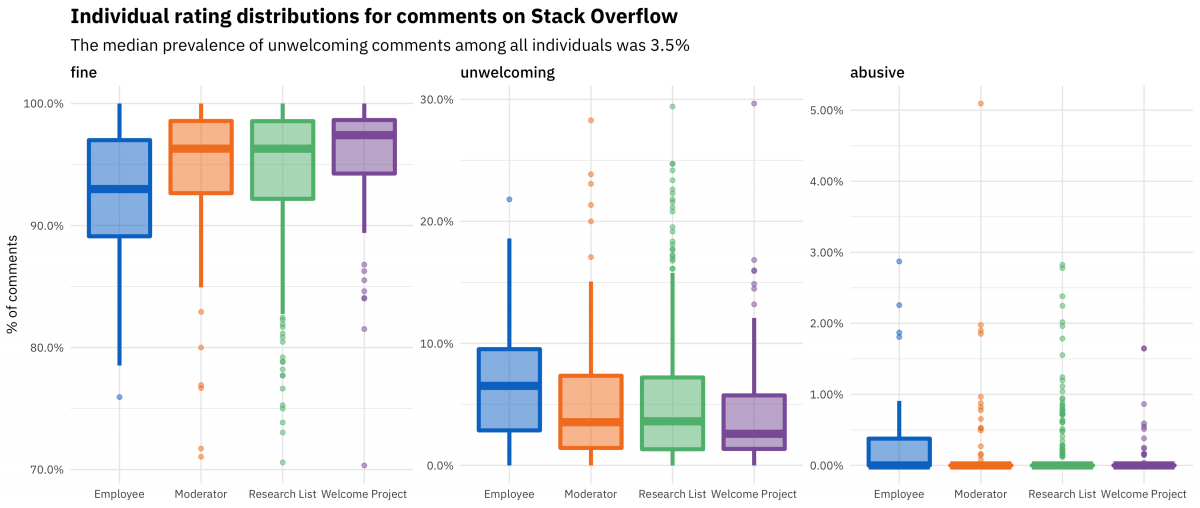

Different kinds of people experience Stack Overflow in different ways. If we look at all the ratings made by the different types of users aggregated, how do the different kinds of groups perceive these comments on Stack Overflow? [table id=5 /] The highest rates of unwelcoming comments were identified by the internal employees at Stack Overflow, followed by Stack Exchange moderators. We trust and support our moderators, and in this specific project, moderators demonstrated their understanding of unfriendly and unwelcoming behavior in comments. Regular registered users from our research list perceived the next lowest rate of unfriendly comments, and users who responded to our blog post about making Stack Overflow more welcoming found the lowest rates of unfriendly comments of all. How can we interpret this? We specifically invited users who may not consider themselves active participants in our community in order to gain outside perspective, but then these users saw the lowest rates of unwelcoming behavior. A possible explanation is that we are seeing a real effect of deep experience with our site; it appears the more invested an individual is here at Stack Overflow, the more sensitive they are to problematic behavior. What do these unfriendly comments look like? The following combine elements of real comments to show typical examples.

- "Why do you want to do this? You have conflated at least three problems here."

- "It will be very hard to help you with such a trivial bug. It could come from any line in your code, and we have to guess."

- "How exactly is this going to solve my problem?!"

- "You don't understand how to use this site. Here nobody codes for you; read the docs and then show us."

- "What are you actually trying to achieve? Please learn how to use a debugger."

Our project showed that the more deeply an individual is connected to Stack Overflow (as an employee, or a moderator), the more they are likely to see problems in comments like these. This effect is robust to comparing groups who were shown the same comments, who rated the same number of comments, and other analytical approaches.

Individual differences

What do the distributions of ratings for each individual look like?

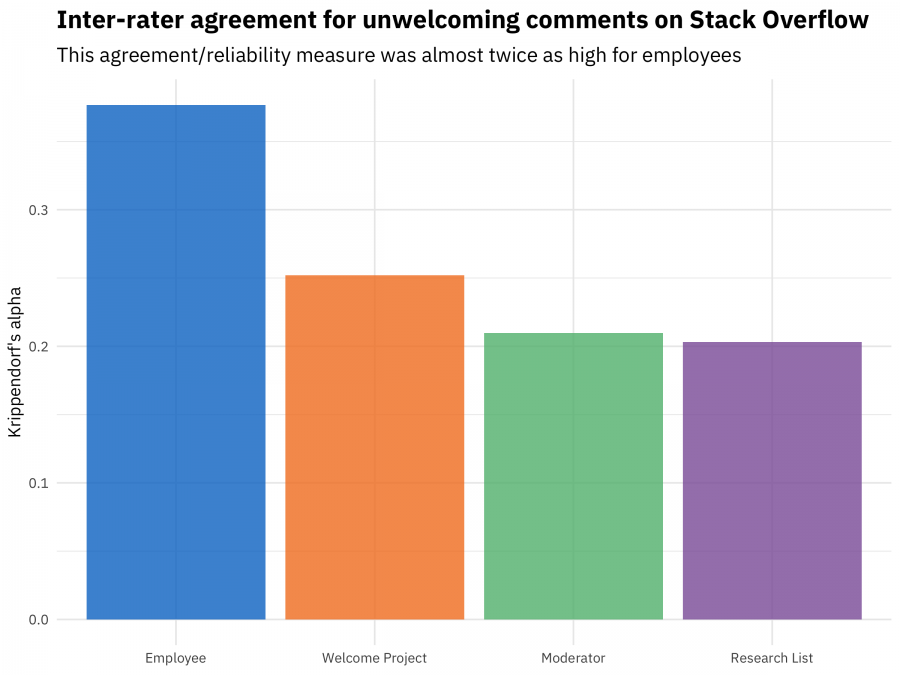

Each individual did not rate the same set of comments and worked for different lengths of time, so we expect variability in the results for each individual. Overall, the median percentage of perceived unwelcoming comments per individual was 3.5%, quite a bit lower than the median percentage for employees of 6.5%. To understand how much agreement there is between raters, we can again look at Krippendorff's alpha, a measure that ranges from zero (nobody agrees) to one (perfect agreement). This measure accounts for the number of raters, so we can compare agreement among employees to the groups with more raters. What is Krippendorff's alpha, for comments that were rated by at least three people?

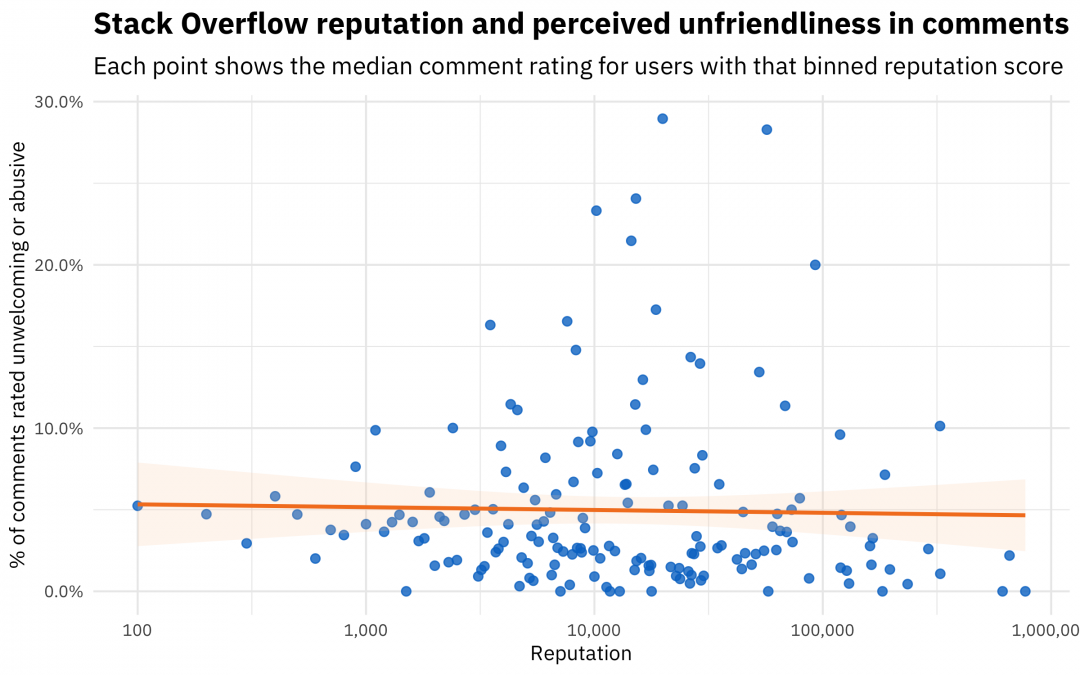

These values for alpha are low compared to what social scientists would use to draw reliable conclusions based on the ratings; social scientists look for values close to 0.8 or more. Notice that Stack Overflow employees rated more comments as unwelcoming than other groups but agreed with each other about what is unwelcoming and abusive at higher rates at the same time. The rate of agreement among moderators and registered users was lowest (although still much higher than for people unfamiliar with Stack Overflow), and the rate of agreement for the users who volunteered to help make Stack Overflow more welcoming was a bit higher. Remember that these were the users who rated the lowest overall levels of unfriendliness; some spot-checking indicates these users identified only the clearest examples of problematic text. Another factor that impacts interactions on Stack Overflow is reputation. Do we see any difference in how raters perceived unwelcoming behavior with their own reputation? This can help us understand if "power users" (distinguished from moderators) may be driving problems with site culture.

There is no clear evidence in this plot for a relationship, indicating that high-reputation users perceive unfriendly behavior at about the same rate as low-reputation users. We have seen effects similar to this before, for example, in our annual Developer Survey. When asked what the worst or most annoying thing about Stack Overflow is, developers of all experience levels and self-reported activity levels on Stack Overflow mentioned issues with harsh interactions and site culture. All together, this begins to paint a complex and interesting picture of who understands unwelcoming behavior and in what ways. Moderators and high-reputation users are just as likely, or even more likely, to identify unwelcoming comments compared to new users. Stack Overflow employees identify more comments as problematic and agree with each other more about what is a problem compared to the other kinds of users in this project.

Next steps

So where do we go from here? For starters, we as employees learned that we don't always perceive problems in the same way as other members of our community. We will keep this in mind as we move forward with plans to make Stack Overflow a better place for developers to learn and share knowledge. We plan to use this dataset to investigate how comments are used on questions and answers, toward users of different experience levels, in different communities, and more. Look for more blog posts on these issues in upcoming months. We will continue to use the results from this project in product changes on Stack Overflow, as well as directly using appropriate subsets of this data in machine learning models. Also, in 2019 we will release this dataset (comment IDs, comment ratings, and anonymized/randomized rater ID) upon request so that other people in our community and beyond can explore this data for themselves. All of this would not be possible without the investment of time and energy of the individuals who participated in this project, and we want to acknowledge each of you who volunteered to help us understand this aspect of our site better. Thank you, for your care and time. Community is central to our identity at Stack Overflow, and we are committed to making Stack Overflow a healthy, inclusive place for developers to learn and share knowledge.