SPONSORED BY ARM

Cloud-native infrastructure is the hardware and software that supports applications designed for the cloud. For compute infrastructure, traditionally the underlying hardware has been x86-based cloud instances that containers and other IaaS (Infrastructure-as-a-Service) applications are built on. In the last few years, developers had a choice in deploying their cloud-native applications on a multi-architecture infrastructure that provides better performance and scalability for their applications and ultimately better overall cost of ownership for their end customers.

In computing, CPU architecture reflects the various instruction sets that CPUs use to manage workloads, manipulate data, and turn algorithms into compiled binaries. Initially, cloud infrastructure was standardized around legacy architectures, such as the x86 instruction set (the 64-bit version is also referred to as AMD64, x86-64, or x64). Today, cloud providers and server vendors offer Arm-based platforms (also referred to as ARM64 or AArch64). For a broad set of cloud-native applications, the Arm architecture offers increased application performance and scalability. Transitioning workloads from legacy architectures to Arm also results in achieving better sustainability due to the energy efficiency of the Arm architecture. The overall solution provides better total cost of ownership.

The two primary architectures, x86 and Arm, take different approaches to performance and efficiency. Traditional x86 servers grew as an extension to PCs where a wide range of software was run on a single computer by a single user. They use symmetric multithreading (SMT) to increase performance. Arm servers are designed for cloud-native applications made up of microservices and containers and do not use multithreading. Instead, Arm servers use higher core counts to increase performance. Designing for cloud-native applications results in less resource contention, consistent performance, and better security compared to multithreading. It also means you don’t need to overprovision compute resources. The Arm architecture also offers efficiency advantages resulting in lower power consumption and increased sustainability. The Arm architecture has been used in more than 270 billion Arm-based chips shipped to date.

Because of these advantages, software developers have embraced the opportunity to develop cloud-native applications and workloads for Arm-based platforms. This allows them to unlock the platform benefits of better price performance and energy efficiency. Arm-based hardware is offered by all major cloud providers—Amazon Web Services (AWS) (Graviton), Microsoft Azure (Dpsv5), Google Cloud Platform (GCP) (T2A), and Oracle Cloud Infrastructure (OCI) (A1). AWS Graviton processors are custom-built by Amazon Web Services using 64-bit Arm Neoverse cores to deliver the best price-performance for your cloud workloads running in Amazon EC2. Azure and GCP offer Arm-based virtual machines in the cloud based on Ampere Altra and Ampere One processors. Recently, Azure also announced their own custom-built Arm-based processor called Cobalt 100.

The shift to multi-architecture infrastructure is driven by several factors, including the need for greater efficiency, cost savings, and sustainability in cloud computing. To gain these advantages, developers must follow multi-architecture best practices for software development, add Arm architecture support to containers, and deploy these containers on hybrid Kubernetes clusters with x86 and Arm-based nodes.

Best practices for multi-architecture infrastructure

All major OS distributions and languages support the Arm architecture, such as Java, .NET, C/C++, Go, Python, and Rust.

- Java programs are compiled to bytecode that can run on any JVM regardless of underlying architecture without the need for recompilation. All the major flavors of Java are available on Arm-based platforms including OpenJDK, Amazon Corretto, and Oracle Java.

- .NET framework 5 and onwards support Linux and ARM64/AArch64-based platforms. Each release of .NET brings more optimizations for Arm-based platforms.

- Go, Python, and Rust with their latest releases offer performance improvements for various applications.

In this continuously evolving world of containers and microservices, multi-architecture container images are the easiest way to deploy applications and hide the underlying hardware architecture. Building multi-architecture images is slightly more complex compared to building single-architecture images. Docker provides two ways to create multi-architecture images: docker manifest and docker buildx.

- With

docker manifest, you can build each architecture separately and join them together into a multi-architecture image. This is slightly more involved approach that requires a good understanding of how manifest files work docker buildxmakes it super easy and efficient to build multi-architectures with a single command. An example of howdocker buildxbuilds multi-architecture images is presented in the next section.

Another important aspect of a software development lifecycle are continuous integration and continuous delivery (CI/CD) tools. CI/CD pipelines are crucial in automating the build, test, and deployment of multi-architecture applications. You can build your application code natively on Arm in a CI/CD pipeline. For example, GitLab and GitHub have runners that support building your application natively on Arm-based platforms. You can also use tools like AWS CodeBuild and AWS Code Pipelines as your CI/CD tools. If you already have an x86-based pipeline, you can start by creating a separate pipeline with ARM64 build and deployment targets. Identify if any unit tests are failing. Use blue-green deployments to stage your application and test the functionality. Alternatively, use canary-style deployments to host a small percentage of traffic on Arm-based deployment targets for your users to try.

An example project

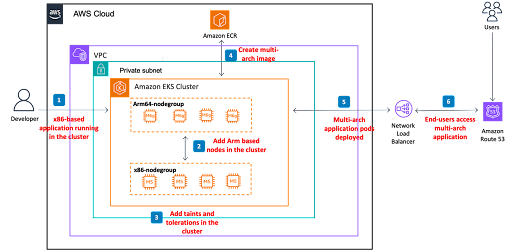

Let’s look at an example of a cloud-native application and infrastructure stack and what it takes to make it multi-architecture. The figure below depicts a scenario of a Go-based web application deployed in an Amazon EKS cluster and high-level steps on how to make it multi-architecture.

- Create a multi-architecture Amazon EKS cluster to run the x86/amd64 versions of the application.

- Add Arm-based nodes to the cluster. Amazon EKS supports node groups of multiple architectures running in a single cluster. You can add Arm-based nodes to the cluster, example EC2 instances are m7g and c7g. This will result in a hybrid EKS cluster with both x86 and ARM64 nodes.

- Add taints and toleration in the cluster. When starting with multiple architectures, you’ll have to configure the Kubernetes Node Selector. A node taint (or a node selector) lets the Kubernetes scheduler know that a particular node is designated for one architecture only. A toleration lets you designate pods that can be used on tainted nodes. In a hybrid cluster setup with nodes from different architectures (x86 and ARM64), adding a taint on the nodes avoids the possibility of scheduling pods on incorrect architecture.

- Create a multi-architecture docker file, build a multi-arch docker image, and push it to a container registry of your choice – Docker registry, Amazon ECR, etc.

- Deploy this multi-arch application image in the hybrid EKS cluster with both x86 and Arm-based nodes.

- Users access the multi-architecture version of the application using the same Load Balancer.

Let’s see how you can implement each of these steps in an example project. You can follow this entire use case with an Arm Learning Path.

Create a multi-arch Amazon EKS cluster directly via AWS console or use the following yaml file:

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: multi-arch-cluster

region: us-east-1

nodeGroups:

- name: x86-node-group

instanceType: m5.large

desiredCapacity: 2

volumeSize: 80

- name: arm64-node-group

instanceType: m6g.large

desiredCapacity: 2

volumeSize: 80

On executing the following command, you should see both architectures printed on the console:

kubectl get node -o jsonpath='{.items[*].status.nodeInfo.architecture}'

To add taints and tolerations to the cluster use the following nodeSelector block and add it to the x86/amd64 or ARM64 version of the Docker file. This will ensure that the pods are scheduled on the correct architecture.

nodeSelector:

kubernetes.io/arch: arm64For more details, follow the Arm learning path mentioned above.

Create a multi-architecture Docker file. For the example application, you can use source code files located in the GitHub repository. It’s a Go-based web application that prints the architecture of the Kubernetes node its running on. In this repo, let’s look at the Docker file below

ARG T

#

# Build: 1st stage

#

FROM golang:1.21-alpine as builder

ARG TARCH

WORKDIR /app

COPY go.mod .

COPY hello.go .

RUN GOARCH=${TARCH} go build -o /hello && \

apk add --update --no-cache file && \

file /hello

#

# Release: 2nd stage

#

FROM ${T}alpine

WORKDIR /

COPY --from=builder /hello /hello

RUN apk add --update --no-cache file

CMD [ "/hello" ]

Observe the RUN statement in the “Build 1st stage” of the Docker file

RUN GOARCH=${TARCH}

Adding this argument tells the Docker engine to build an image according to the underlying architecture. That’s it! That’s all it takes to convert this application to run on multi-architectures. While we understand that some complex applications may require some additional work, with this example you can see how easy it is to start with multi-architecture containers.

You can use docker buildx to build a multi-architecture image and push it to the registry of your choice.

docker buildx create --name multiarch --use --bootstrap

docker buildx build -t <your-docker-repo-path>/multi-arch:latest --platform linux/amd64,linux/arm64 --push .

Deploy the multi-architecture Docker image in the EKS (Kubernetes) cluster.

Let’s use the following Kubernetes yaml file to deploy this image in our EKS cluster.

apiVersion: apps/v1

kind: Deployment

metadata:

name: multi-arch-deployment

labels:

app: hello

spec:

replicas: 6

selector:

matchLabels:

app: hello

tier: web

template:

metadata:

labels:

app: hello

tier: web

spec:

containers:

- name: hello

image: <your-docker-repo-path>/multi-arch:latest

imagePullPolicy: Always

ports:

- containerPort: 8080

env:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

resources:

requests:

cpu: 300m

To access the multi-architecture version of the application, execute the following in a loop to see a variety of arm64 and amd64 messages.

for i in $(seq 1 10); do curl -w '\n' http://<external_ip>; done

Conclusion

For software developers trying to learn more about technical best practices for building cloud-native applications on Arm, we provide multiple resources such as Learning Paths that provide technical how-to information on a wide variety of topics. Also, check out our Arm Developer Hub, to gain exposure to how other developers are approaching multi-architecture development. Here you get access to on-demand webinars, events, Discord channels, training, documentation, and more.