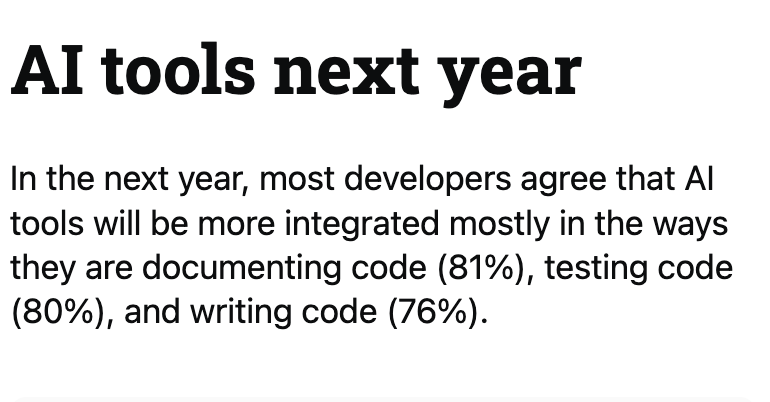

In our 2024 Developer Survey, lots of coders highlighted the fact that they were using AI-powered tools in their workflows. While generating code is the most common use case today, many saw testing and documentation as the big areas where they will utilize AI in the year to come.

We wanted to explore this result in more depth. Why do programmers think AI will become useful for testing specifically? Is that ultimately a more valuable application of GenAI (at least for now) than writing the software that powers your app or service? We chatted with three entrepreneurs who write code and manage teams, gathering perspectives on how this application of GenAI fits into individual and organizational workflows.

Two key takeaways emerged:

- More lines of code isn’t necessarily better, but lines of code with fewer bugs is.

- Developers want AI to handle the work they enjoy less, and save the most creative and fulfilling parts for themselves.

“There’s no replacement for self-discipline and quality of work, which is why it’s best to focus these tools on the parts of our job where they can be reliably helpful,” says Ben Halpern, founder of Dev.to and co-founder of Forem. “Attempting to shoehorn generated code into parts of one’s workflow that do not benefit from what the tool does best can be like taking one step forward and two steps back.”

Halpern has made AI-generated tests a regular part of his workflow. “Testing code has wound up being a really effective use case for generative AI. It’s effective in the face of current limitations, but is also an area where future evolutions likely also benefit,” he explained. “Testing zealotry aside, most of the work that goes into writing tests is a matter of writing boilerplate, considering important high level logic, and following patterns established in the test suite.”

This approach works particularly well with modern Test Driven Development, or TDD for short, where the test is written before the code itself. “The most important part of testing is that it gets done, and done thoroughly. When practicing proper TDD, I can save myself a lot of energy by describing the functionality I need and allowing the boilerplate to generate,” says Halpern. “If I’m writing tests after the fact, AI helps me generate thorough tests, so I’m not tempted to cut corners.”

In our conversations with programmers, a theme that emerged is that many coders see testing as work they HAVE to do, not work they WANT to do. Testing is a best practice that results in a better final outcome, but it isn’t much fun. It’s like taking the time to review your answers after finishing a math test early: crucial for catching mistakes, but not really how you want to spend your free time. For more than a decade, folks have been debating the value of tests on our sites. While no one tries to deny its importance, plenty of folks complain about it becoming an ever-larger part of their everyday workload.

The dislike some developers have for writing tests is a feature, not a bug, for startups working on AI-powered testing tools. CodiumAI is a startup which has made testing the centerpiece of its AI-powered coding tools. “Our vision and our focus is around helping verify code intent,” says Itamar Friedman. He acknowledges that many devs see testing as a chore. “I think many developers do not tend to add tests too much during coding because they hate it, or they actually do it because they think it's important, but they still hate it or find it as a tedious task.”

The company offers an IDE extension, Codiumate, that acts as a pair programmer while you work: “We try to automatically raise edge cases or happy paths and challenge you with a failing test and explain why it might actually fail.” Itamar says that he often meets with teams or CTOs that view testing as a matter of coverage. Whether you approach that as an issue of size or style can make a big difference, he says: “When you're generating tests, you make sure that you cover important behaviors of your software. It's not how many lines you cover, rather how many important behaviors.”

In a similar fashion, Itamar says AI code generation needs to focus less on quantity and more on quality. “Two different customers told me they're using code generation. In the beginning it's a lot of excitement, but when they really try to analyze the effectiveness, they think it kind of sums to zero.” This raises an important question: what is the real ROI on AI systems that can generate a lot of code quickly, with some portion of that code being subpar? “Our first goal is not helping you generate more code,” says Itamar, “but rather making it easier for you to think about your code and verify your code. Maybe you generate less code, but it’s higher-quality.”

In the GenAI era, companies undoubtedly have the ability to generate code more quickly. Lines of code, however, don’t necessarily equate to better business logic or product performance. “One thing everyone can agree on, is that having code with fewer bugs is a long term benefit,” says Itamar. Think of it this way: rather than building a bigger airplane, build one that costs less over time to maintain and repair. Rather than using GenAI to double the amount of code you produce, generate the same amount of code, but ensure that what you have is far less likely to fail, given failures can be enormously costly.

Syed Hamid is the founder and CEO of Sofy, which has built a product to help developers with TDD, unit testing, and visual testing. We interviewed him for our podcast and discussed AI testing. “What people do today is write a bunch of scripts with CI/CD integration. They write a bunch of scripts to set up environments. You have the manual testing and you have the automated testing,” says Hamid. “We have taken a little bit of a different approach. How we see the future is more of an intelligent testing, where the software can analyze itself and generate useful tests. You might say to the system, ‘Hey, for a given product change, tell me what's impacted.’”

Sofy’s system can identify a new feature and create the test or even write the test based on what you’re planning to do. “I can now actually look at your functional spec or a story in your Confluence page and I can actually generate the test case’s route out of that and map it to what I have tested before,” says Hamid. “It's amazing what we can accomplish today. Obviously, it's not going to fully replace people, but it's going to augment.”

Stack Exchange user Esoterik makes the point that writing tests is a way to pay it forward. “I remember from a software engineering course, that one spends ~10% of development time writing new code, and the other 90% is debugging, testing, and documentation. Since unit-tests capture the debugging, and testing effort into (potentially automate-able) code, it would make sense that more effort goes into them; the actual time taken shouldn't be much more than the debugging and testing one would do without writing the tests. Finally tests should also double as documentation!”

This last point is key. Our customers use Stack Overflow for Teams as a way to organize knowledge and ensure that code documentation is accurate and up-to-date. With OverflowAI, we’ve built an extension that sits inside the IDE (Visual Studio Code for now, with more IDEs coming soon) where a developer is working, allowing them to capture important information about the software and tests they are writing and ensuring a blueprint is available for the coworkers who will have to wrangle their codebase in the years to come.