One of the most striking things about today's generative AI models is the absolutely enormous amount of data that they train on. Meta wrote, for example, that its Llama 3 model was trained on 15 trillion tokens, which is equal to roughly 44 TERABYTES of disk space. In the case of large language models this usually means terabytes of text from the internet, although the newest generations of multimodal models also train on video, audio, and images.

The internet, like the oceans of planet Earth, has always been viewed as an inexhaustible resource. Not only is it enormous to begin with, but billions of users are adding fresh text, audio, images, and video every day. Recently, however, researchers have begun to examine the impact this data consumption is having.

“In a single year (2023-2024) there has been a rapid crescendo of data restrictions from web sources,” write the authors of a paper from the Data Provenance Initiative, a volunteer collective of AI researchers from around the world, including experts from schools like MIT and Harvard, and advisors from companies like Salesforce and Cohere. For some of the largest and most popular collections of open data typically used to train large AI models, as much as 45% has now been restricted. “If respected or enforced, these restrictions are rapidly biasing the diversity, freshness, and scaling laws for general-purpose AI systems.”

If you’re thinking about this in terms of your organization, there are some questions you might start to consider:

- If you’re training your own in-house model, will you continue to have access to a large source of high quality public data from the open web?

- As you scale the size of your model and its training data, it becomes slower and more expensive each time you respond to a user’s query. How can you find the best tradeoff between improving your model and delivering fast, affordable results?

A bottleneck on the horizon

The observation that AI models need ever more data, and that public sources of data are being increasingly restricted in response, raises a critical question: can the production of new data keep up with the ever growing demands of ever larger AI models? A research paper from Epoch AI, which describes itself as “a team of scientists investigating the future of AI,” found that as soon as 2026 AI models may already consume the entire supply of public human text data as part of their training.

One of the seminal essays in the field of AI research is The Bitter Lesson, which states that the most productive approach to improving AI over the last four decades boils down to two simple axes: more data and more compute. If AI models reach a point where progress requires more data to scale than humanity has ever uploaded to the internet, what happens?

“There is a serious bottleneck here,” Tamay Besiroglu, one of the authors of Epoch’s research paper, told the Associated Press. “If you start hitting those constraints about how much data you have, then you can’t really scale up your models efficiently anymore. And scaling up models has been probably the most important way of expanding their capabilities and improving the quality of their output.

Synthetic data, generational loss, and model collapse

One option for AI companies hitting this data bottleneck will be the use of “synthetic data.” This refers to data created by AI systems to mimic data created by humans. The concern here is that data created by an AI might imitate or even increase the number of errors found in the human training data, as it will have both the imperfections of the original data generated by humans and new hallucinations of its own.

Another concern is that the internet is quickly being flooded with new content generated not by human beings, but by AI systems themselves. When AI is trained on AI generated data, an interesting effect emerges.

What happens if you post a photo to Instagram, copy that image, and then upload the copied image as a new post? While it might be difficult to tell the difference the first three or four times, as you go on, the image begins to degrade before your eyes.

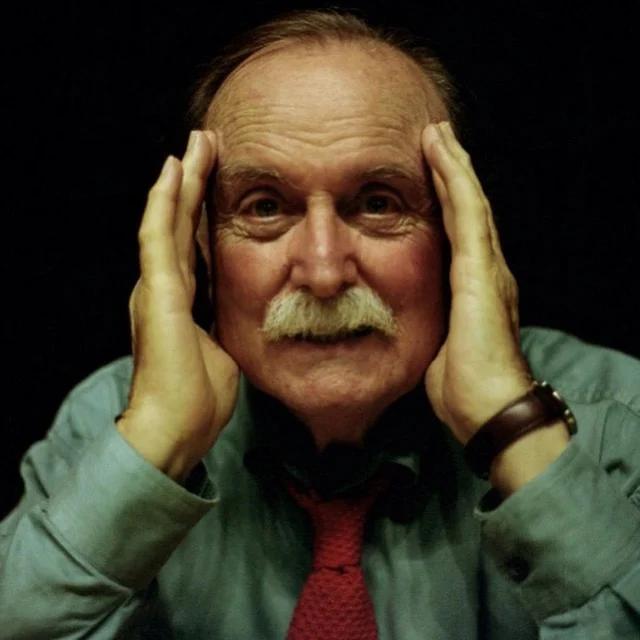

The original image posted by the artist Pete Ashton looked like this:

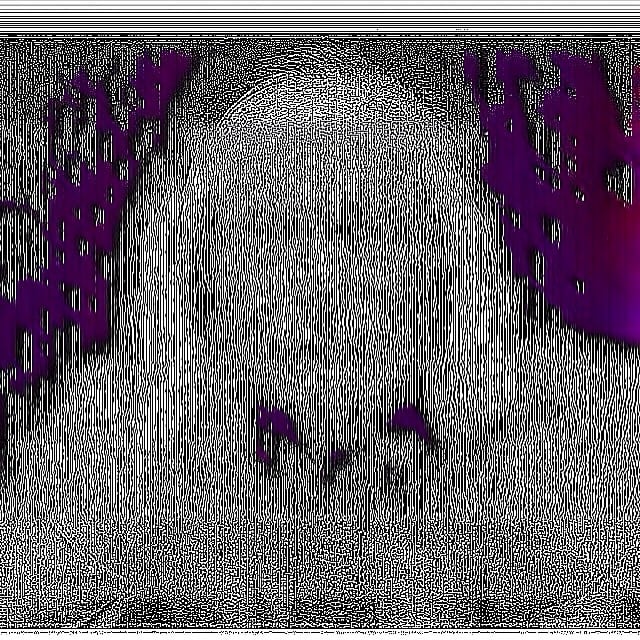

The 90th copy looked like this:

This effect is known as generational loss, and it showcases a loss in quality between the original data and the copies or transcodes you make. This effect is not limited to visual images. In 1969, the composer Alvin Lucier made a recording of himself reading a paragraph of text. He then played that recording on tape and recorded the recording, a process he repeated over and over again. As the author and music scholar Edward Strickland wrote, the original language becomes more and more difficult to understand, eventually degrading into unintelligible sounds that are overshadowed by the resonant room tone where the recording was made.

A question that has emerged with the rise of large language models is what will happen if they run out of fresh data to train on, or if a larger and larger portion of the data they consume from the web was itself created by an LLM-powered GenAI system. As an article in the NY Times noted, AI trained on too much synthetic data can experience what is known as model collapse, a serious degradation in its capabilities.

Teaching from the textbook

The better the training data, the smarter the model

There are examples of training with synthetic data, however, that offer hope for the future. Until recently, it seemed as though the capability of an AI model was in some way intrinsically connected to its size, with their capabilities growing alongside their scale. As Rich Sutton wrote in The Bitter Lesson, researchers wanted to find clever ways to improve AI’s knowledge, but were repeatedly confronted with breakthroughs that emerged mostly from adding more data and compute. The most powerful models were trained on millions, then billions, then hundreds of billions of parameters. As Jonathan Frankel of MosaicML said on our podcast, this was a bit disheartening for the academic and open source community, as it appeared you needed a massive amount of data and compute (and thus time and money) in order to achieve cutting-edge results.

But recently, smaller and smaller models have been able to compete with massive, foundational models on a number of the benchmark tests. A trio of papers from Microsoft research have highlighted this potential path. The most recent example, Phi-1, is especially interesting to the folks here at Stack Overflow, as it uses data from our community as part of its training set...well, sort of.

This model is 1.3 billion parameters, so roughly 1% the size of the model behind the original ChatGPT, far smaller than GPT4, and compact enough to be run on a high-end smartphone. Rather than relying on a massive training set—say, all the text on the internet—the authors of this paper focused on data quality. This followed earlier work that found that training on a very small corpus of short stories, crafted explicitly to fine tune towards a certain goal, allowed a very small model to gain a surprising level of reasoning and linguistic ability.

In the case of Phi-1, it relied on coding examples, but not just the usual text from the big, openly available datasets. As the authors explain, the standard datasets used for training models on code have several drawbacks:

- Many samples are not self-contained, meaning that they depend on other modules or files that are external to the snippet, making them hard to understand without additional context.

- Typical examples do not involve any meaningful computation, but rather consist of trivial or boilerplate code, such as defining constants, setting parameters, or configuring GUI elements.

- Samples that do contain algorithmic logic are often buried inside complex or poorly-documented functions, making them difficult to follow or learn from.

- The examples are skewed towards certain topics or use cases, resulting in an unbalanced distribution of coding concepts and skills across the dataset.

As they write, “One can only imagine how frustrating and inefficient it would be for a human learner to try to acquire coding skills from these datasets, as they would have to deal with a lot of noise, ambiguity, and incompleteness in the data. We hypothesize that these issues also affect the performance of language models, as they reduce the quality and quantity of the signal that maps natural language to code. We conjecture that language models would benefit from a training set that has the same qualities as what a human would perceive as a good “textbook”: it should be clear, self-contained, instructive, and balanced.”

To improve the data quality, the authors took code examples from The Stack and Stack Overflow as their base, then asked GPT3.4 and GPT4 to identify which snippets provided the best "educational value for a student whose goal is to learn basic coding concepts." In other words, they used the best bits of data from sources like Stack Overflow to help one of today’s cutting-edge foundational models generate synthetic data that could be used to train a much smaller, more accessible model.

Quality and structure can matter as much as quantity

GPT3.5, Replit, Palm2, and others on a benchmark coding challenge. This led Andrej Karpathy, a veteran of Tesla’s AI program who now works at OpenAI, to remark, “We'll probably see a lot more creative ‘scaling down’ work: prioritizing data quality and diversity over quantity, a lot more synthetic data generation, and small but highly capable expert models.”

The findings in the paper validate another one of Stack Overflow’s central beliefs about this new era of GenAI: the importance of quality over quantity. The one and a half decades our community has spent asking questions, providing answers, and maintaining the highest quality knowledge base makes our data set uniquely valuable.

Learning how to shape great questions and answers about code turns out to be a uniquely valuable skill in the era of GenAI. As Mark Zuckerberg said on a recent podcast interview:

“The thing that has been a somewhat surprising result over the last 18 months is that it turns out that coding is important for a lot of domains, not just coding. Even if people aren't asking coding questions, training the models on coding helps them become more rigorous in answering the question and helps them reason across a lot of different types of domains. That's one example where for LLaMa-3, we really focused on training it with a lot of coding because that's going to make it better on all these things even if people aren't asking primarily coding questions.”

What does all of this mean for your organization? The central takeaway is that, even if you’re not investing heavily in putting GenAI into production now, the best way to lay a foundation for working with this technology in the future is to find ways to organize and optimize the information inside your company, making it easier for AI assistants of the future to help your employees be both productive and accurate.

If you want to learn more about how we organize knowledge inside our company, or how we help clients like Microsoft and Bloomberg to build and curate their internal knowledge bases, check out Stack Overflow for Teams.