In this day and age, us developers have grown accustomed to having the perfect development environment. From finely tuned vim/emacs setups to these massive AI-powered suites that make software development just so convenient. Built-in language servers, linters, automatic documentation generation, syntax highlighting, even version control. All of these are artefacts of a modern software development ecosystem. We are all living the dream!

However...It was not always like this. Even if we go back just a few years, things looked a bit different. But what if we go back 20 years? How about 40? How about 70? Would even be able to recognize the way software was being built back then? Let's explore, let's see what the history of software development looked like, and what we can expect in the future.

Do what with the card?

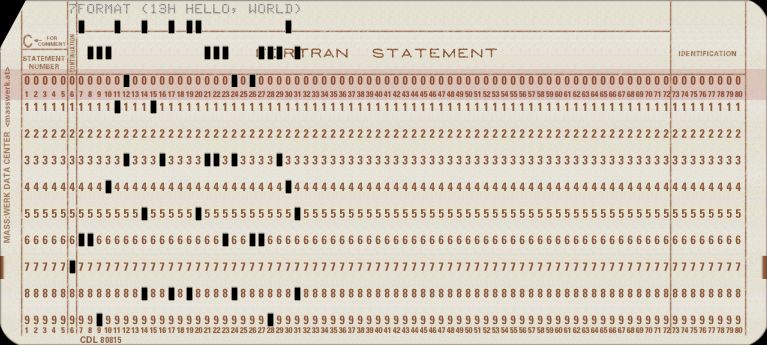

Do you know why some text editors and developers like to break their code into a new line after they have reached 80 columns? Even Python's own PEP 8 Style guide suggests the same! Well, it has to do with the punch card. Yes, punch cards, the thing we all think about when we try to imagine how computers were in the 1960s. Back then, our fellow software developers used punch cards to store and execute code on big mainframe computers.

The actual development was not done on the card itself, rather the program was written on something called a coding sheet. A wonderful piece of (usually) green and white piece of paper, where a programmer would write the code and debug the code. These sheets can actually take multiple lines of code. You can see an example here:

Then it would be shipped out to a "punch card department" where they would be converted into the actual punch cards. This was usually done on machines such as the IBM 029. The most you could fit on this card was 80 characters (including spaces). That's it. Anything more than that, you needed a new card. This means that your single coding sheet could result in multiple cards.

For any significant piece of software back then, you needed stacks of punch cards. Yes, 1000 lines of code needed 1000 cards. And you needed to have them in order. Now, imagine dropping that stack of 1000 cards! It would take me ages to get them back in order. Devs back then experienced this a lot—so some of them went ahead and had creative ways of indicating the order of these cards.

So what happens with these punch cards, how are they used? Usually they were used in batch processing. Meaning you would drop off your stack of cards at a computing center, and have them run your software when compute time becomes available. This was a one and done type of thing, if it fails—it fails. You have to debug on paper and try again. Sounds horrible, but this was revolutionary at the time.

If you want to try to write your own punch card, someone made this excellent web based emulator, give it a try!

We have programming at home

Let's step back from the world of business and mainframes, and let's go home. Let's meet our bedroom coder. By the mid 1970s affordable home computers were starting to become a reality. Instead of a computer just being a work thing, hobbyists started using computers for personal things—maybe we can call these, I don't know...personal computers. The world of Altair 8800, IMSAI 8080, Commodore PET, Apple II, and TRS-80 was coming to developers.

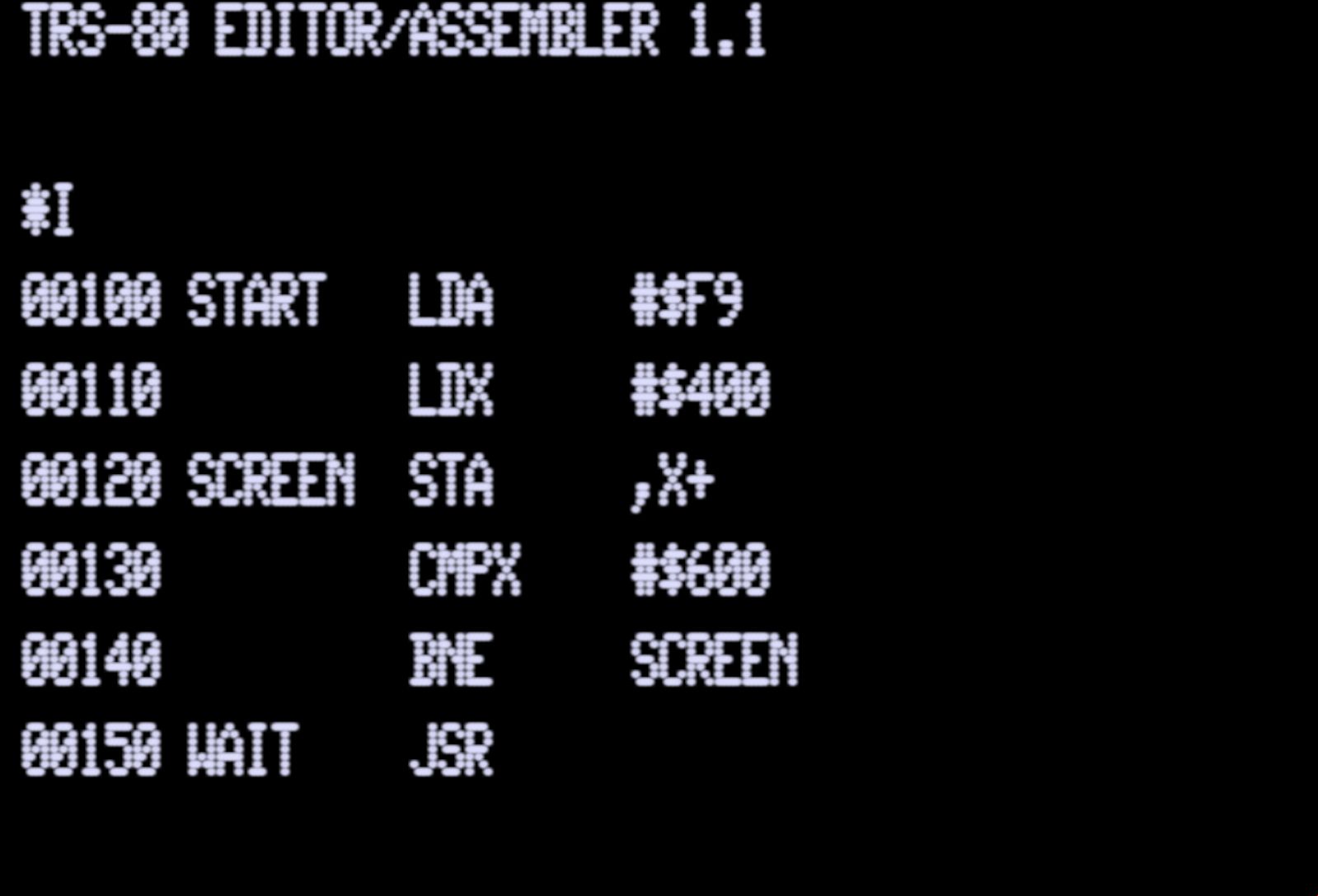

With these computers, we have both stepped back in time and into the future. Due to their limited capabilities, any serious software had to be written in either machine code or assembly (or in some cases, literally flipping switches). This allowed the developers to fully harness the power of the hardware by moving bits around. The syntax of the assembly would depend on the architecture the computer uses (Z80, MOS 6502, Intel 8080 ...), and sometimes even down to the assembler being used.

NOTE:

Assembler and assembly tend to be used interchangeably. But are in reality two different things. Assembly would be the actual language, syntax—instructions being used and would be tightly coupled to the architecture. While the assembler is the piece of software that assembles your assembly code into machine code—the thing your computer knows how to execute.

For example, on the Commodore 64 ADC $c, assembly code gets converted into $69 $0c machine code by the assembler.

What did the development environment look like here? Let's take an example of the Tandy TRS-80 Model 1. The dev (it feels so weird calling developers from this era 'devs') would load a tool called EDTASM via cassette tape, write their assembly code, save it back to the tape, then assemble it and run it. Sounds simple right? Well, remember when I said "...by moving bits around..."? With assembly, you deal with memory addresses. Meaning, you hardcode the memory locations where you store your data. But what about the editor? Does the editor also exist in the same memory? Yes it does, and you have to keep that in mind as it may exist in the range of addresses that you want to use in PROD (again, this feels so weird).

For example, when I wrote some assembly for the Commodore 64, I would use two different ORG definitions (my start memory location), depending if I am currently writing the software or if I am pushing it to PROD (I need to stop saying this). During development, my assembler and editor would get in the way. Huh... This must have been the first case of "but it works on my machine"

Not everything was assembly, many of us (I am looking at you dear reader) have started with a little language known as BASIC (Beginners' All-purpose Symbolic Instruction Code). This was the entry point into the world of programming for a lot of people. And while it would allow us to create painfully slow software, it was still software and it was accessible to anyone. My first ever line of code was in BASIC on the Commodore 64.

Try out this simple bit of code in your favorite C64 emulator

10 PRINT CHR$(205.5+RND(1));

20 GOTO 10Now I'm C-ing the future

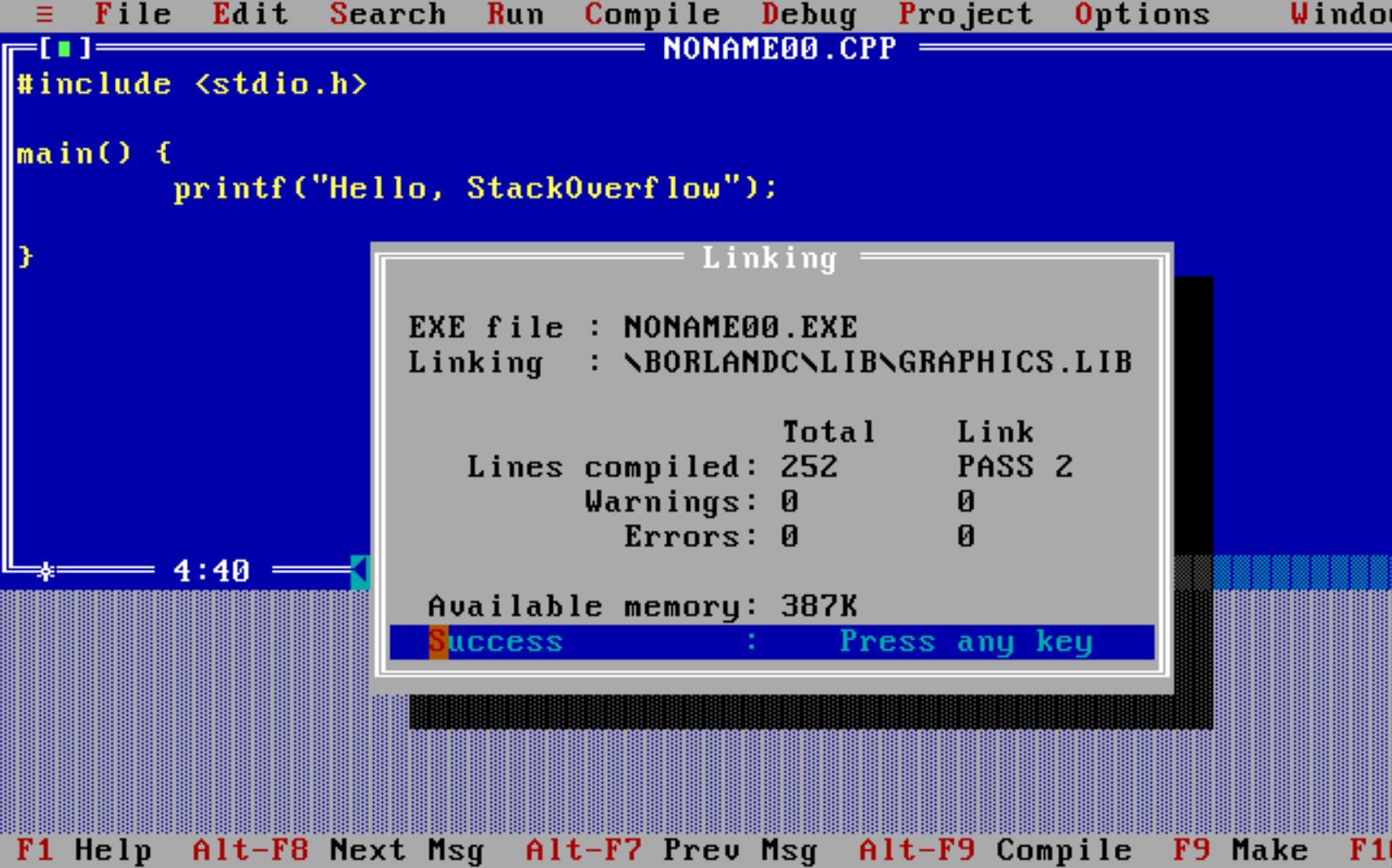

Ah, C. The language that is just everywhere. Even in the year 2025, you will still see C all over the place. From embedded development to even some software written in Rust containing unsafe C code. I had my first taste of C, back in high school. This was in 2004 and we used the wonderful Borland C (no not C# or C++... C). C was created in 1972-73 by Dennis Ritchie over in Bell Labs. It was initially made to build utilities running on Unix (apparently you can still find the source code of an incredibly early C compiler out on the interwebs). C as a language has only gotten widespread popularity in the 1980s.

If you wanna write C, you need to know about "K&R C". This is actually a book, written by Dennis Ritchie and Brian Kernighan that has become THE BOOK of C. It's considered the lowest common denominator in the C language, no matter what version of C you decide to use, the stuff from this book must work. This is one of the books I got back in high school, and it's what made me really like C.

Okay, so how did developers build software back then (or even now) with C? How did they even learn what needed to be done?

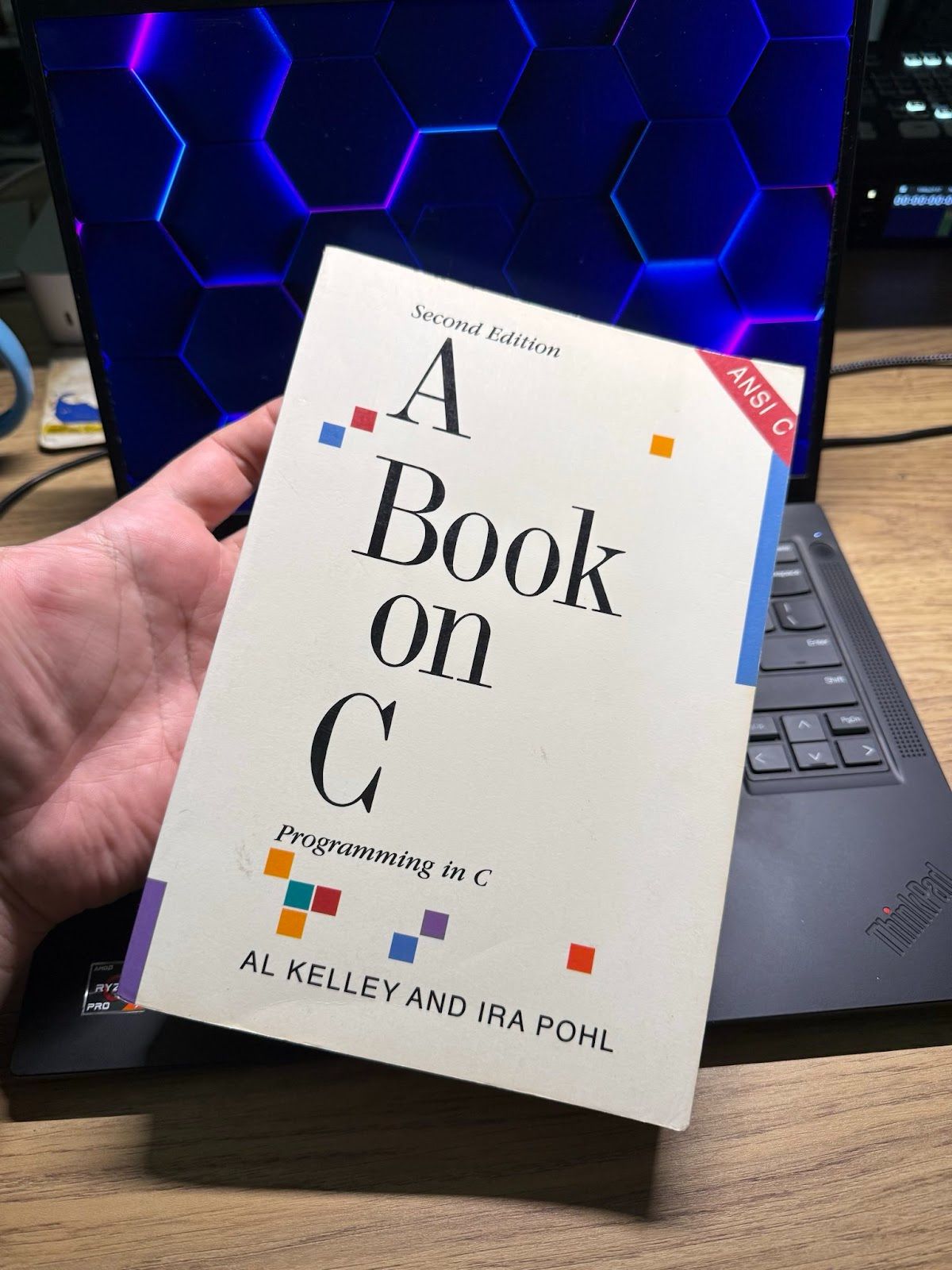

Let's start with learning... books. Yeah, books! Since the language (and it's frameworks) were rather simple (there were no 7^32 JavaScript frameworks), software engineers back then could just get it all from a handful of books.

These were not just reference documentation, but books that would show what is possible with C. Then you just take an eager software engineer and let their mind wander with the possibilities this amazing new(ish) language has to offer! I've experienced something similar when trying to learn assembly—there is not a lot to the syntax, but with experimentation and creativity, you can get really really far.

What about writing the software? Did they use git back then? No, git only came out in 2005, so back then software version control was quite the manual effort. From developers having their own way of managing source code locally to even having wall charts where developers can "claim" ownership of certain source code files. For those that were able to work on a shared (multi-user) system, or have an early version of some networked storage—Source code sharing was as easy as handing out floppy disks.

Back then, iteration was slow. Software was released (mostly) as physical media, and over-the-air updates were not that common (certain software had options to be updated via modem, but that was not a standard practice for most users). So experiencing user feedback, as we do today, was not as rapid.

If you have never had a chance to work with C, drop that MicroPython implementation on your ESP32, and give C a chance. It will take you a bit of time to get used to it, but since many of today's languages source their inspiration from C, you should be able to fit in just perfectly. Just be careful around all that garbage.

Stop and smell the 90s

Okay, I've yapped long enough on this post. Let's pause here and reflect on how far we have come in 30+ years. From green paper and punch cards, to moving bits and compiling C. Hmm... Not that far actually. If you look at C, it's "hello world" is not that far off from FORTRAN.

#include <stdio.h>

int main() {

printf("Hello, World!\n");

return 0;

}The thing is, the change in the way we do software development will massively speed up in the next couple of decades. From Object Oriented Programming to the Web. From JVMs to LLMs. All that and more, in PART 2 of this blog.

I will be posting PART 2 in the next few weeks over on AWS Builder Center. Please make sure to give me a follow there!

See you soon!