Welcome to November's installment of Stack Overflow research updates! This month marks one year since my colleagues in UX research and I started sharing bite-size updates about the quantitative and qualitative research we use to understand our communities and make decisions.

In recent months, we have invested time and energy in improving the question-asking experience on Stack Overflow, one of the most fundamental interactions on our site. In August, I outlined what we learned from the question wizard, our first major change to the question-asking workflow in a decade. In September, Lisa shared the results of her qualitative research that has informed our next steps. Today, I want to present the results of A/B testing for the changes currently live on the site.

Wizards and a unified experience

The question wizard represented a move in the right direction in terms of question quality and interactions via comments, but some of the decisions made for the wizard turned out to be brittle and inappropriate for our scale. For both technical and design reasons, we have chosen to pursue a single question design with modals specific to different kinds of users, not a two-mode workflow based on reputation.

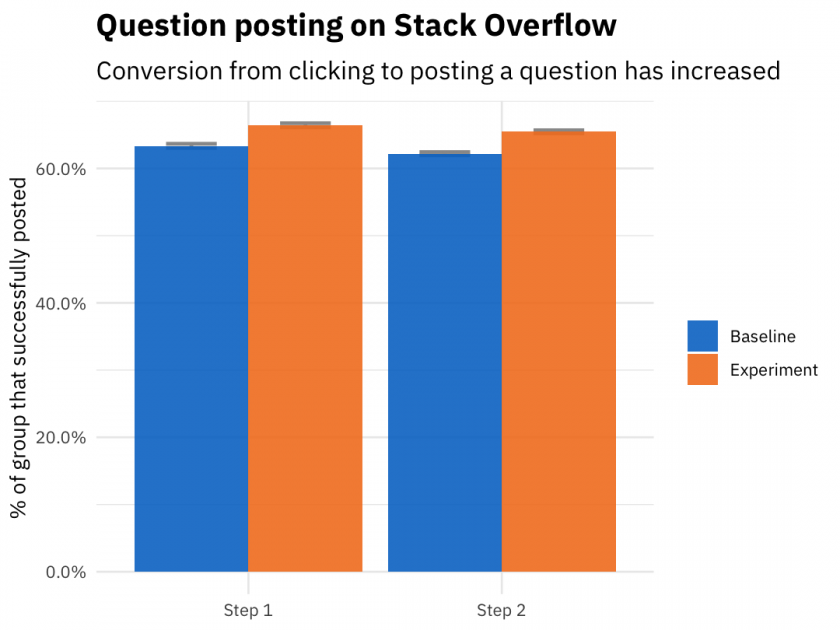

To measure the impact of changes to the question workflow, we use A/B testing. People in the baseline arm of the test had the old version of the question workflow as it already existed. We shipped changes to the question workflow iteratively so that people in the experiment arm of the test experienced a new workflow; these iterative changes in the experiment arm were necessary because the changes we wanted to test against the old workflow were so extensive and had complex dependencies. For simplicity, we can summarize the changes in two "steps":

- Step 1: The first group of changes launched in September and included pretty dramatic UI changes, along with a welcome modal and what-to-expect modal for new users.

- Step 2: The next group of changes launched in October and focused mostly on a review interface, consolidating and organizing validation warnings.

People in the baseline arm did not see any of these changes but had the old question workflow only.

Posting your question

One of the most important metrics for us when we work with the question workflow is the conversion from clicking the "Ask Question" button to finally posting a question. The new question workflow, compared to the old, allows users to be more successful in this task, with increases of 3% in this conversion throughout the entire process (both Step 1 and Step 2). Adding the review interface did not impact the ease of use of the new question form, as measured by this conversion from initial click to final post.

It may be difficult to see in this graph because they are so small, but the gray errorbars show the uncertainty on how we have measured the proportion here.

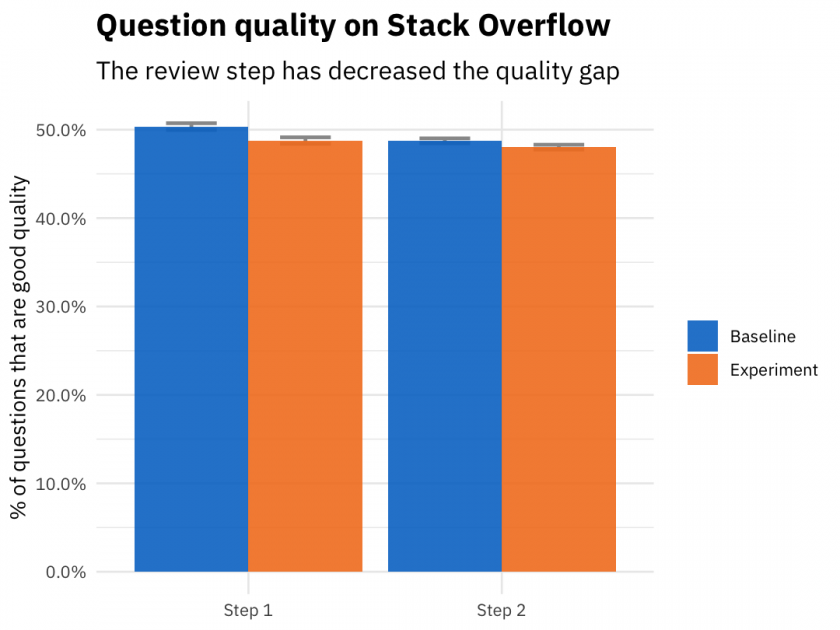

Another important metric for the question workflow is question quality, which we define and explain here. More questions are being asked with the new workflow, but what are these questions like?

During Step 1 (the major UI changes plus modals for new question askers), we saw a 1.5% decrease in good quality questions. Not great news! During that part of this major revamp of the question-asking experience, we had increased the number of questions (and the overall number of good questions) but the proportion of questions that were good was down slightly.

Fortunately, one of the main reasons we are redesigning the question workflow is that our new approach is more flexible and easier to iterate on. In fact, that's exactly what we did next. Step 2 of our rollout focused on consolidating and organizing validation warnings, and during this step, the quality gap between the baseline and experiment groups decreased to virtually zero. We fixed the regression in question quality by iterating in this more flexible framework. We see similar results during the test if we measure bad quality instead of good quality.

Next steps

As of today, the new question workflow performs better in terms of task success (people who intend to ask a question successfully posting their question) and the same in terms of question quality. From a technical perspective, the new workflow is easier to maintain and build on moving forward. Our next steps will include more iteration to continue improving question quality, along with other concerns of all kinds of users, from the most to the least experienced. We have graduated this new question workflow and in the future, we'll be testing any further changes against this new baseline. The next time you ask a question on Stack Overflow, look for the results of these carefully planned and tested changes!