When Flipp, my employer, first started as a scrappy, small team, we had only a handful of systems. Every dev had the context they needed to work with those systems and the skills to do so (we were honestly lucky to have the right skillsets in the team). The same couple of people handled the software development, deployment, and troubleshooting.

As we grew, the number of things we handled grew with us. We could no longer have one or two really smart people handle everything. We started splitting up into a "development" team and an "infrastructure" team (aka infra) to handle what many people call operations.

By "operations," I mean tasks involving the actual production infrastructure. Typically this includes the following:

- Provisioning and managing servers, storage, operating systems, etc.

- Deploying software

- Monitoring, alerting, and dealing with production issues

This worked, kind of, for a time. But we quickly realized that our infrastructure team, though they knew lots about Linux and firefighting, knew less and less about the actual systems we had and what they did as those systems expanded and changed. This impacted all three operations aspects:

- For provisioning, infra became more of a gatekeeper and bottleneck. To ensure they understood the use cases, teams had to provide multiple-page questionnaires and have sync-up meetings for new servers. The process could take weeks.

- Deployments became rubber-stamps on code that the team had already decided to deploy. The infra team had to be "the person to push the button" but had no insight into what they were deploying.

- Production issues multiplied because infra did not understand the systems. They largely ended up just calling the team whenever anything went wrong. Because skillsets differed, the infra and application teams often had to fix problems together, leading to finger-pointing during post-mortems.

Something had to change. And that's when we made our big decision.

One team to rule them all

At this point we could have gone down two paths. We could have doubled down on communication and process, ensured that the dev and infra teams communicate more effectively, spent time writing detailed playbooks, heavily involved infra in new projects, and expanded the infra team so they were less of a bottleneck.

Instead, we went the opposite route. We decided that we need to empower our development teams to do their own operations.

Why?

- Keep the expertise for a given system within the team. We can have "specialists" on a team who are "better" at infrastructure-y things, but only with the goal of spreading that knowledge out to the team at large.

- Ensure that devs have production experience when building systems. Without it, they simply don't know enough about how to build the system in the first place.

- Lower roadblocks to making changes or adding features to a system. If you don't have an operations "gateway," you're empowered to make your own changes on your own timelines.

- Lower roadblocks to creating new systems. This ensures we can move fast when building new functionality.

- Eliminate finger-pointing. Because the system is owned by a single team, there is only one point of accountability and there is no opportunity to accuse other teams of causing problems.

- Motivate the team to fix problems. Give the motivation to actually fix problems to the people who know how to fix them. If the team is getting woken up at night by alerts, it's on them to put in improvements to either the system or the alerts, to reduce the amount of times wakeups happen.

How did we do it?

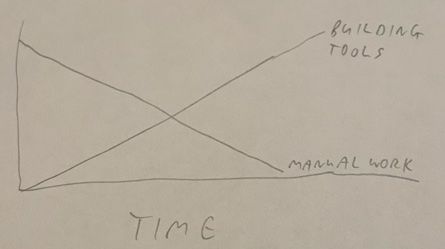

The major key to this was changing the role and goals of the infrastructure team. We rebranded it as Engineering Capabilities (Eng Cap), and their goal was to actually build tools to enable teams to do the sorts of things that the infrastructure team used to be responsible for. This way, as time goes on, the Eng Cap team becomes less and less responsible for manual work and transitions towards yet another development team with their own systems.

Key aspects of our plan included:

- Build a systems platform: This dovetailed nicely with our goal to move towards a microservices architecture. We invested years of work into building a scalable platform using containers and load balancers so that creating a new system could be done with a click of a button. This took all the "smarts" out of what infrastructure did and put it in a system so teams could be confident that the template they chose would work for their use case.

- Use the cloud: Cloud services helped immensely and took many of the tasks we would do on our own (such as managing instances and databases) and handled them externally. We could focus on what our company was good at—our content and features—and leave the infrastructure and systems know-how to the companies that are built around that.

- Spread the knowledge: Rather than keeping all the systems knowledge in one team, we slowly spread it out to the teams that need it. In some cases we would "plant" someone strong in systems skills into a team, then ensure they shared their knowledge with everyone else, both through training and playbooks.

- Embrace continuous deployment: Having a continuous integration tool do the deployments helped reduce fear of deploys and gave more autonomy to the teams. We embraced the idea that the merge button replaced the deploy button. Whoever merges the code to the main branch implicitly approves that code to go live immediately.

- Widespread monitoring and alerting: We embraced a cloud provider for both monitoring (metrics, downtime, latency, etc.) and alerting (on-call schedules, alerts, communication) and gave all teams access to it. This put the accountability for system uptime on the team themselves—and allowed them to manage their own schedules and decide what is and isn't considered an "alert."

- Fix alerts: Teams were encouraged to set up a process around reviewing and improving alerts, making sure that the alerts themselves were actionable (not noisy and hence ignored) and that they put time towards reducing those alerts as time went on. We ensured that our product team was on board with having this as a team goal, especially for teams that managed legacy systems that had poor alert hygiene.

Note that Eng Cap was still available as "subject matter experts'' for infrastructure. This means that they could always provide their expertise when planning new systems, and they made themselves available as "level two" on-call in case more expert help was needed in an emergency. But we switched the responsibility—now teams have to call Eng Cap rather than the other way around, and it doesn't happen often.

Roadblocks and protests

Like any large change, even a long-term one, there's no silver bullet. When you're dealing with dozens of devs and engineering managers used to the "old way," we had to understand the resistance to our plans, and either make improvements or explain and educate where needed.

Security and Controls:

- Q: If there is no separate team pressing the "deploy button", doesn't that violate segregation of duties and present issues with audits?

- A: Not really. Especially since those pressing the "deploy button" don't have context into what they're allowing, all it does is add needless slowdown. A continuous deployment process has full auditability (just check the git log) and should satisfy any reasonable audit.

Developer Happiness:

- Q: Devs don't want to wake up in the middle of the night. Won't moving this responsibility from a single team to all teams mean devs will be more miserable and increase turnover?

- A: By moving the pain into the dev teams, we are giving them the motivation to actually fix the problems. This may cause some short-term complaints, but because they are given more autonomy, in the long-term they have a higher satisfaction with their work. However, it is crucial to make sure our product roadmap gives time and effort into fixing them. Without that, this step would likely cause a lot of resentment.

Skill Gap:

- Q: Learning how to handle servers and databases are specialized skills. If we make the application teams responsible, won't we lose those skills?

- A: Teaching teams how to handle specific technologies is actually much easier than trying to teach an infrastructure team how every single system works. Spreading the knowledge out slowly, with good documentation, is much more scalable.

Where are we now?

This was a five-year process, and we're still learning and improving, especially for our legacy systems, a few of which are still on our old platform. But for the most part, the following statements are now true:

- Teams are now empowered and knowledgeable about their systems, how they behave in production, how to know when there is a problem, and how to fix those problems.

- They are motivated to build their own playbooks to spread the knowledge, so that new team members can share the load of being on call.

- They are motivated to make their alerts "smart" and actionable, so that they know when systems have problems and can remove noisy alerts.

- They are motivated and empowered to fix things that cause alerts by building it into their product roadmap.

- Often, the number of alerts, time to fix, validity, etc. are part of sprint metrics and reports.

- Alerts and emergencies are generally trending downwards and are not part of everyday life, even for legacy systems. As a whole, when an alert happens, it's nearly always something new that the team hasn't seen before.

Conclusion

Empowering your teams to do their own operations can pay big dividends down the road. Your company needs to be in the right place and be willing to make the right investments to enable it. But as a decision, it's one that I'd urge any company to continually revisit until they're ready to make the plunge.

---

You can visit my GitHub profile page for more articles!