If you’ve worked with unit testing, you’ve probably used dependency injection to be able to decouple objects and control their behavior while testing them. You’ve probably injected mocks or stubs into the system under test in order to define repeatable, deterministic unit tests.

Such a test might look like this:

[Fact]

public async Task AcceptWhenInnerManagerAccepts()

{

var r = new Reservation(

DateTime.Now.AddDays(10).Date.AddHours(18),

"x@example.com",

"",

1);

var mgrTD = new Mock<IReservationsManager>();

mgrTD.Setup(mgr => mgr.TrySave(r)).ReturnsAsync(true);

var sut = new RestaurantManager(

TimeSpan.FromHours(18),

TimeSpan.FromHours(21),

mgrTD.Object);

var actual = await sut.Check(r);

Assert.True(actual);

}

(This C# test uses xUnit.net 2.4.1 with Moq 4.14.1.)

Such tests are brittle. They break easily and therefore increase your maintenance burden.

Why internal dependencies are bad

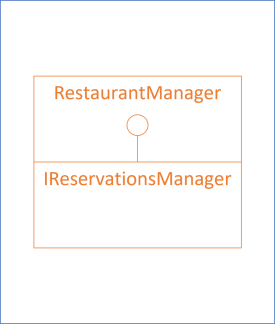

As the above unit test implies, the RestaurantManager relies on an injected IReservationsManager dependency. This interface is an internal implementation detail. Think of the entire application as a blue box with two objects as internal components:

An application contains many internal building blocks. The above illustration emphasizes two such components, and how they interact with each other.

What happens if you'd like to refactor the application code? Refactoring often involves changing how internal building blocks interact with each other. For example, you might want to change the IReservationsManager interface.

When you make a change like that, you'll break some of the code that relies on the interface. That's to be expected. Refactoring, after all, involves changing code.

When your tests also rely on internal implementation details, refactoring also breaks the tests. Now, in addition to improving the internal code, you also have to fix all the tests that broke.

Using a dynamic mock library like Moq tends to amplify the problem. You now have to visit all the tests that configure mocks and adjust them to model the new internal interaction.

This kind of friction is likely to deter you from refactoring in the first place. If you know that a warranted refactoring will give you much extra work fixing tests, you may decide that it isn't worth the trouble. Instead, you leave the production code in a suboptimal state.

Is there a better way?

Functional core

In order to find a better alternative, you must first understand the problem. Why use test doubles (mocks and stubs) in the first place?

Test doubles serve a major purpose: They enable us to write deterministic unit tests.

Unit tests should be deterministic. Running a test multiple times should produce the same outcome each time (ceteris paribus). A test that succeeds on a Wednesday shouldn't fail on a Saturday.

By using a test double each test can control how a dependency behaves. In Working Effectively with Legacy Code, Michael Feathers likens a test to a vise. It's a tool to fix a particular behavior in place.

Test doubles, however, aren't the only way to make tests deterministic.

A better alternative is to make the production code itself deterministic. Imagine, for example, that you need to write code that calculates the volume of a frustum. As long as the frustum doesn't change, the volume remains the same number. Such a calculation is entirely deterministic.

Write your production code using mostly deterministic operations. For example, instead of the above RestaurantManager, you can write an immutable class with a method like this:

public bool WillAccept(

DateTime now,

IEnumerable<Reservation> existingReservations,

Reservation candidate)

{

if (existingReservations is null)

throw new ArgumentNullException(nameof(existingReservations));

if (candidate is null)

throw new ArgumentNullException(nameof(candidate));

if (candidate.At < now)

return false;

if (IsOutsideOfOpeningHours(candidate))

return false;

var seating = new Seating(SeatingDuration, candidate.At);

var relevantReservations =

existingReservations.Where(seating.Overlaps);

var availableTables = Allocate(relevantReservations);

return availableTables.Any(t => t.Fits(candidate.Quantity));

}

This example, like all code in this article, is from my book Code That Fits in Your Head. Despite implementing quite complex business logic, it's a pure function. All the helper methods involved (IsOutsideOfOpeningHours, Overlaps, Allocate, etc.) are also deterministic.

The upshot is that deterministic operations are easy to test. For instance, here's a parametrized test of the happy path:

[Theory, ClassData(typeof(AcceptTestCases))]

public void Accept(MaitreD sut, DateTime now, IEnumerable<Reservation> reservations)

{

var r = Some.Reservation.WithQuantity(11);

var actual = sut.WillAccept(now, reservations, r);

Assert.True(actual);

}

This code snippet doesn't show the test case data source (AcceptTestCases), but it's a small helper class that produces seven test cases that supply values for sut, now, and reservations.

This test method is typical of unit tests of pure functions:

- Prepare input value(s)

- Call the function

- Compare the expected outcome with the actual value

If you recognize that structure as the Arrange Act Assert pattern, you're not wrong, but that's not the main point. What's worth noticing is that despite non-trivial business logic, no test doubles (i.e. mocks or stubs) are required. This is one of many advantages of pure functions. Since they are already deterministic, you don't have to introduce artificial seams into the code to enable testing.

Writing most of a code base as deterministic functions is possible, but requires practice. This style of programming is called functional programming (FP), and while it may require effort for object-oriented programmers to shift perspective, it's quite the game changer—both because of the benefits to testing, and for other reasons.

Even the most idiomatic FP code base, however, must deal with the messy, non-deterministic real world. Where do input values like now and existingReservations come from?

Imperative shell

A typical functional architecture tends to resemble the Ports and Adapters architecture. You implement all business and application logic as pure functions and push impure actions to the edge.

At the edge, and only at the edge, you allow impure actions to take place. In the example code that runs through Code That Fits in Your Head, this happens in controllers. For example, this TryCreate helper method is defined in a ReservationsController class:

private async Task<ActionResult> TryCreate(

Restaurant restaurant,

Reservation reservation)

{

using var scope =

new TransactionScope(TransactionScopeAsyncFlowOption.Enabled);

var reservations = await Repository

.ReadReservations(restaurant.Id, reservation.At)

.ConfigureAwait(false);

var now = Clock.GetCurrentDateTime();

if (!restaurant.MaitreD.WillAccept(now, reservations, reservation))

return NoTables500InternalServerError();

await Repository.Create(restaurant.Id, reservation)

.ConfigureAwait(false);

scope.Complete();

return Reservation201Created(restaurant.Id, reservation);

}

The TryCreate method makes use of two impure, injected dependencies: Repository and Clock.

The Repository dependency represents the database that stores reservations, while Clock represents some kind of clock. These dependencies aren't arbitrary. They're there to support unit testing of the application's imperative shell, and they have to be injected dependencies exactly because they're sources of non-determinism.

It's easiest to understand why Clock is a source of non-determinism. Every time you ask what time it is, the answer changes. That's non-deterministic, because the textbook definition of determinism is that the same input should always produce the same output.

The same definition applies to databases. You can repeat the same database query, and over time receive different outputs because the state of the database changes. By the definition of determinism, that makes a database non-deterministic: The same input may produce varying outputs.

You can still unit test the imperative shell, but you don't have to use brittle dynamic mock objects. Instead, use Fakes.

Fakes

In the pattern language of xUnit Test Patterns, a fake is a kind of test double that could almost serve as a “real” implementation of an interface. An in-memory “database” is a useful example:

public sealed class FakeDatabase :

ConcurrentDictionary<int, Collection<Reservation>>,

IReservationsRepository

While implementing IReservationsRepository, this test-specific FakeDatabase class inherits ConcurrentDictionary<int, Collection<Reservation>>, which means it can leverage the dictionary base class to add and remove reservations. Here's the Create implementation:

public Task Create(int restaurantId, Reservation reservation)

{

AddOrUpdate(

restaurantId,

new Collection<Reservation> { reservation },

(_, rs) => { rs.Add(reservation); return rs; });

return Task.CompletedTask;

}

And here's the ReadReservations implementation:

public Task<IReadOnlyCollection<Reservation>> ReadReservations(

int restaurantId,

DateTime min,

DateTime max)

{

return Task.FromResult<IReadOnlyCollection<Reservation>>(

GetOrAdd(restaurantId, new Collection<Reservation>())

.Where(r => min <= r.At && r.At <= max).ToList());

}

The ReadReservations will return the reservations already added to the repository with the Create method. Of course, it only works as long as the FakeDatabase object remains in memory, but that's sufficient for a unit test:

[Theory]

[InlineData(1049, 19, 00, "juliad@example.net", "Julia Domna", 5)]

[InlineData(1130, 18, 15, "x@example.com", "Xenia Ng", 9)]

[InlineData( 956, 16, 55, "kite@example.edu", null, 2)]

[InlineData( 433, 17, 30, "shli@example.org", "Shanghai Li", 5)]

public async Task PostValidReservationWhenDatabaseIsEmpty(

int days,

int hours,

int minutes,

string email,

string name,

int quantity)

{

var at = DateTime.Now.Date + new TimeSpan(days, hours, minutes, 0);

var db = new FakeDatabase();

var sut = new ReservationsController(

new SystemClock(),

new InMemoryRestaurantDatabase(Grandfather.Restaurant),

db);

var dto = new ReservationDto

{

Id = "B50DF5B1-F484-4D99-88F9-1915087AF568",

At = at.ToString("O"),

Email = email,

Name = name,

Quantity = quantity

};

await sut.Post(dto);

var expected = new Reservation(

Guid.Parse(dto.Id),

at,

new Email(email),

new Name(name ?? ""),

quantity);

Assert.Contains(expected, db.Grandfather);

}

This test injects a FakeDatabase variable called db and ultimately asserts that db has the expected state. Since db stays in scope for the duration of the test, its behavior is deterministic and consistent.

Using a fake is more robust in the face of change. If you wish to refactor code that involves changes to an interface like IReservationsRepository, the only change you'll need to make to the test code is to edit the fake implementation to make sure that it still preserves the invariants of the type. That's one test file you'll have to maintain, rather than the shotgun surgery necessary when using dynamic mock libraries.

Architectural dependencies

To recap: A functional core needs no dependency injection to support unit testing, because functions are always testable (being deterministic by definition). Only the imperative shell needs dependency injection to support unit testing.

Which dependencies are required? Every source of non-deterministic behavior and side effects. This tends to correspond to the actual, architectural dependencies of the application in question, with the possible addition of a clock and a random number generator.

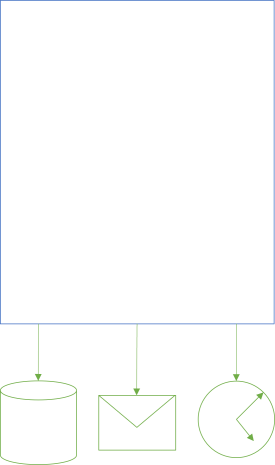

The sample system in Code That Fits in Your Head has three “real” dependencies: Its database, an SMTP gateway, and the system clock:

Apart from the system clock, these dependencies are components you'd also draw when illustrating the overall architecture of the system. The application is an opaque box, its internal organization implementation details, but its “real” dependencies represent other processes that may run somewhere else on the network.

These dependencies are the ones you may consider to explicitly model. These dependencies you can hide behind interfaces, inject with Constructor Injection, and replace using test doubles. Not mocks, stubs, or spies, but fakes.

Conclusion

Which dependencies should be present in your code base?

Those that represent non-deterministic behavior or side effects. Adding rows to a database. Sending an email. Getting the current time and date. Querying a database.

These tend to correspond to the architectural dependencies of the system in question. If the application requires a database in order to work correctly, you'll model the database as a polymorphic dependency. If the system must be able to send email, then a messaging gateway interface is warranted.

In addition to such architectural dependencies, system time and random number generators are the other well-known sources of non-determinism, so model those as explicit dependencies as well.

That's it. Those are the dependencies you need. The rest are implementation details, likely to make your test code more brittle.

The implication is that a typical system will only have a handful of dependencies.