I'm just about out of patience with LinkedIn posts where a freshly-minted tech CEO says "instead of a week-long interview process and several assessments, I just have a conversation with the candidate. I can tell in about five minutes if they're right for the job."

No, they can't. Although shorter interview processes are a good idea (excessively long ones will scare away the most sought-after candidates), the "conversation over coffee" interview approach is no better than choosing candidates at random. And that's pretty bad.

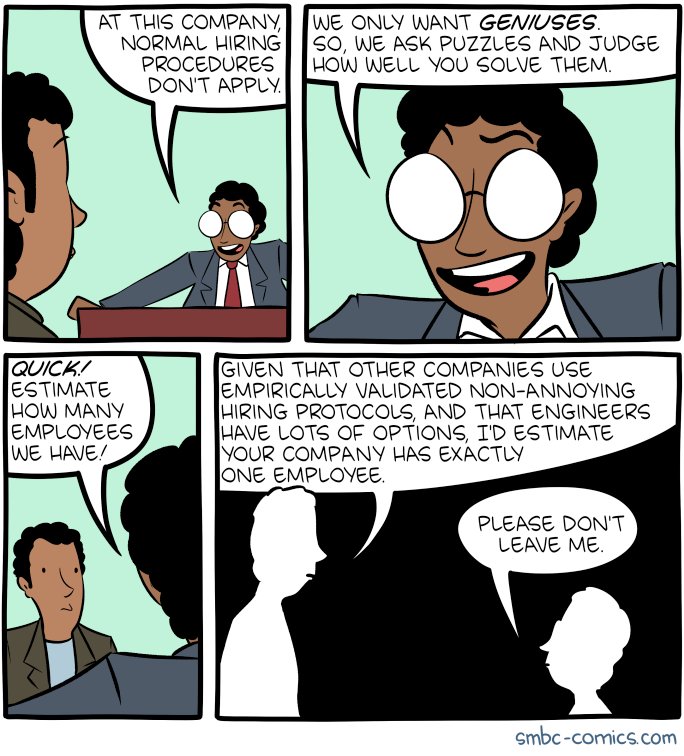

What we're witnessing from LinkedIn CEOs is not extraordinary intuition or social skills. It's the Dunning-Kruger effect: people tend to overestimate their own competence at a task, and the worse they are at it the more they overestimate. Interviewing is one of the most well-studied examples. There's not a single person in the world who can assess someone's job skills in an unscripted five-minute conversation—or, for that matter, a much longer one. Unscripted conversations aren't interviewing; they're speed dating. Yet employers stubbornly believe they're the most effective way to select high-performing candidates.

Study after study after study has proven them wrong. Unstructured interviews increase multiple types of bias, open the door to interviewer idiosyncracies, and reduce hiring accuracy by over half. They're worse than useless, and not just by a little. And the impact of hiring mistakes is massive: the wrong candidate can cost up to a quarter of a million dollars and cause significant delays in the company's projects.

Programming and evidence of ability

For programmers, bad interviews are especially frustrating. Programming is a task that produces self-evident results. You can't assess the build quality of a bridge or the preparation of a meal just by looking at it, but with code there's nothing under the surface: what you see is all there is. You can demonstrate your ability to write good code in real-time without any complex setup or tangible risk. Yet companies go out of their way to either avoid such a demonstration or ask for one that's completely tangential to the job they're hiring for.

Most of us have been there and done that. You're interviewing for your first programming job and they want to know what element on the Periodic Table best describes your personality. You're an experienced front-end developer but the interview is an hour-long "gotcha" quiz on closures and hoisting. You've built several .NET applications from scratch but they want you to invert a binary tree. You've contributed to the Linux kernel but now you have to guess the number of ping-pong balls that would fit in the interview room. The whole thing has been memed to death.

This is especially bewildering in context of the job market. Programmers are worth their weight in gold. Salaries are skyrocketing throughout the industry. Remote work has become table stakes. Tech startups are offering four-day work weeks to make themselves more appealing. Recruiters are second only to car warranty scammers in their efforts to reach us. But it all goes to waste if the candidate who gets hired isn't able to do the job. So why aren't companies investing more at the tail end of their hiring process?

An optimized interview process

Let's talk about what an ideal coding interview could look like. If someone's job is to write Java, the process might go like this:

- Disclose the salary range and benefits in the very first contact.

- Review their resume ahead of time for professional or open source experience writing Java (any other programming language would be relevant; Groovy and Kotlin would be equivalent experience).

- Watch them write Java for an hour or so in the most realistic environment possible. Grade them on predetermined, job-relevant attributes, e.g. problem-solving, null safety, error handling, readability, naming conventions, and encapsulation.

- Give a scripted, completely standardized assessment to ensure they understand the JVM and basic command-line tools.

- Let them know when to expect a followup call.

That's it. No unscripted "culture interview," no free-for-all "group quiz," no "compiler optimization test," no asking the candidate to build a 15-hour project from scratch in their spare time. You assess their job-specific skills and you're done.

It's not that I think coding ability (or any one skill) is the only important thing about a candidate. Of course you want to hire people who are reliable, smart, honest, and kind. But you have to restrict yourself to what is knowable. And in the context of a job interview, there isn't much. Most candidates act fake in job interviews and a significant percentage of them lie. Even putting honesty aside, 9 out of 10 people report having interview anxiety. If you want to know how someone behaves at work, you simply can't rely on the interview. That's not the real them. Obviously if you see any major red flags—being rude to the receptionist, say—you can throw out their resume. But those are rare.

That's part of the inherent risk of hiring: interviews just aren't as powerful as we'd like them to be. Many employers try valiantly to devise interviewing methods that predict soft skills and personality traits. But anything outside the limits of practical, measurable work is usually an illusion, albeit a compelling one—a 2013 study found that interviewers form highly confident impressions of candidates even when those candidates' responses are randomly selected! People tend to trust their first impressions regardless of how accurate or inaccurate they are, which leads to mistakes in the hiring process. Any time you ask humans to intuit facts about other humans, you decrease the accuracy of your process. The only thing you can measure with any accuracy at all is task competence.

Methods for testing coding ability

So, if it's the best we can do, what's the ideal way to measure programming skills? Several commercial products offer a way to test candidates with pre-written, auto-graded tasks in a code playground. Although they're likely much better than the norm, I'm not here to evangelize any of them. Why would you pay for another company's general-purpose assessment when your entire development team does specific, real-world "assessments" all day long? The last time I was asked to devise a coding test for an interview I looked over my recent work, picked a section of code that went well beyond "glue code" but didn't get into anything proprietary, tweaked it so it would stand on its own, and copied the function signature into LINQPad (a code playground). The interviewee's task was to implement the function according to nearly the same requirements I'd needed to meet. And when they were finished, I knew if they could do the job because they had just done it. It's hard to get a more realistic assessment than that.

On the other side of the table, by far the best interview process I've ever gone through was with a startup who put me on a one-week contract (an "audition" as some companies call it) to work with their team. They assigned me a task; I cloned their repo, wrote code over the course of a couple evenings, and submitted a PR; the rest of the team gave feedback; I did some more work to bring the PR up to their standards; and they merged it and paid me at my regular rate. Even though I didn't ultimately accept their job offer, everyone walked away happy—they got some extra work done and I got a paycheck. If I had accepted, there would have been no uncertainty about my skills or ability to work with the team. That kind of organizational competence is hard to come by. Years later, they're still on my shortlist if I'm ever on the hunt for a job.

Maybe that doesn't fit your definition of "interview." I say it's something much better than an interview. Can you imagine trading that experience for 15 minutes shooting the breeze with their CEO? It's a ridiculous proposition. Their CEO doesn't even code.

For security or policy reasons, some teams may not be able to offer such a highly practical interview process. In those situations, consistency is a close second to realism. If you can't make the interview look like the job, at least make one interview look like the next—give yourself the ability to compare candidates on the same metrics by following the same script every time.

Helping every candidate be at their best

A consistent, scientific interview process doesn't have to be completely rigid. You may actually hurt yourself by trying to standardize things that aren't relevant to what you're measuring; every candidate has different needs. For example, immunocompromised candidates need to have the option to interview remotely to protect their health. Candidates with physical or neurological conditions may fare better if the interview is split into short segments with frequent breaks rather than a three-hour marathon. Some candidates with interview anxiety may appreciate a moment or two before the interview to establish a friendly rapport with the interviewer; for others, small talk is difficult and they'd prefer to skip that part. You should always be willing to adjust the environment or cadence of the interview to the candidate's needs. Accommodations like these are a win-win because they allow each candidate the best opportunity to demonstrate their skills. If you want to hire the best candidate, you need to see the best of each candidate.

Also, interviews are a two-way street. You're being measured just as surely as the candidate is. Neglecting to answer their questions because "it isn't in the script" would be a mistake.

How do you reconcile your need for objective, comparable data with your candidates' needs for reasonable accommodation and reciprocity? The simplest answer comes from the robustness principle, a famous rule of software design: "be conservative in what you do, be liberal in what you accept from others." To apply it here, you should assess candidates conservatively (narrowly) on the parts of the interview that are standardized and focused on relevant skills—but you should be liberal (flexible) about whatever else they may need. You'll still get the data you want and your candidate pool won't be restricted or misrepresented by unnecessary rules.

Conclusion

Structured, realistic, job-focused interviews are the gold standard according to several decades of research. So when you decide how much of the process should be unplanned or how much of the evaluation criteria should be unquantifiable, the only question you should ask yourself is how often do I want to select the wrong candidate?

Of course, sometimes the wrong candidate will slip through. That's not totally preventable. The best interviewing methods are only mostly accurate. But that shouldn't scare you into an intuition-based interview process any more than the occasional computer failure scares you into doing everything on paper.

Hiring wisely is perhaps the most powerful advantage a company can have over its competitors, and the best way to do it is to focus on what you can measure.