We’ve had a breakneck first half of our year here at Stack Overflow, with the last two quarters marked by the announcement of major partnerships, the general availability of our OverflowAI features in Stack Overflow for Teams, and many more milestones across our public platform. At a macro level, we’ve moved well past the inflection point of the AI hype cycle. The customers, partners, and technologists I engage with every day grapple with the very real questions and challenges of how to implement and use AI tools to increase productivity, improve their developer experience, build better products, and drive value. So while change is constant—and AI has certainly kicked off a super cycle in technology innovation and investment—the common thread I see is that trust is the key to building thriving knowledge ecosystems: on our internal teams, for our customers, our partners, and in our broader community.

Addressing the growing trust gap

This past July, we released our annual Developer Survey, our flagship report on the state of the software world, with over 65,000 developers from 185 countries around the globe weighing in. One of the most striking takeaways for us was the fact that the gap between the use of AI and trust in its output continues to widen. In 2024 76% of all respondents use or plan to use AI tools, up from 70% in 2023. At the same time, AI’s favorability rating decreased from 77% last year to 72% in our latest survey. Additionally, only 43% of developers say that they trust the accuracy of AI tools, and 31% of developers remain skeptical. These results highlight the fact that, while AI is here to stay, the quality of the results that AI tools produce is still very much in question by technologists.

Anecdotally, I constantly hear this in my conversations with our customers. If developers don’t completely trust the output of the AI tools they use, the whole foundation of the house you’re building is shaky. For example, if you’re a backend engineer at a major financial services institution, are you willing to stake your professional reputation on AI-generated code without human review? Most corporate customers are not willing to take that bet. This is why validated sources of data are crucial to ensure that the foundation is secure, and why we keep returning to how we can bridge this trust gap.

Introducing Knowledge-as-a-Service

Last month, Stack Overflow celebrated 16 years since the founding of our public platform, and in that time the Open Web has undergone vast changes. This past summer, I had the opportunity to take the stage for the second year in a row at the WeAreDevelopers World Congress in Berlin where I reflected on the front seat Stack Overflow has had for many of these transformations, from the rise of social media in the early aughts and into the 2010s, to the explosion of cloud computing, to the wave of societal change and adoption of remote work brought about by the pandemic, and now the meteoric shift brought about by AI. If you zoom out, GenAI is another inflection point on this continuum of major technological advances. With more than 58 million questions and answers across our site, Stack Overflow is uniquely positioned to meet this moment with our wealth of quality, accurate technical knowledge and the most trustworthy method to generate new knowledge going forward.

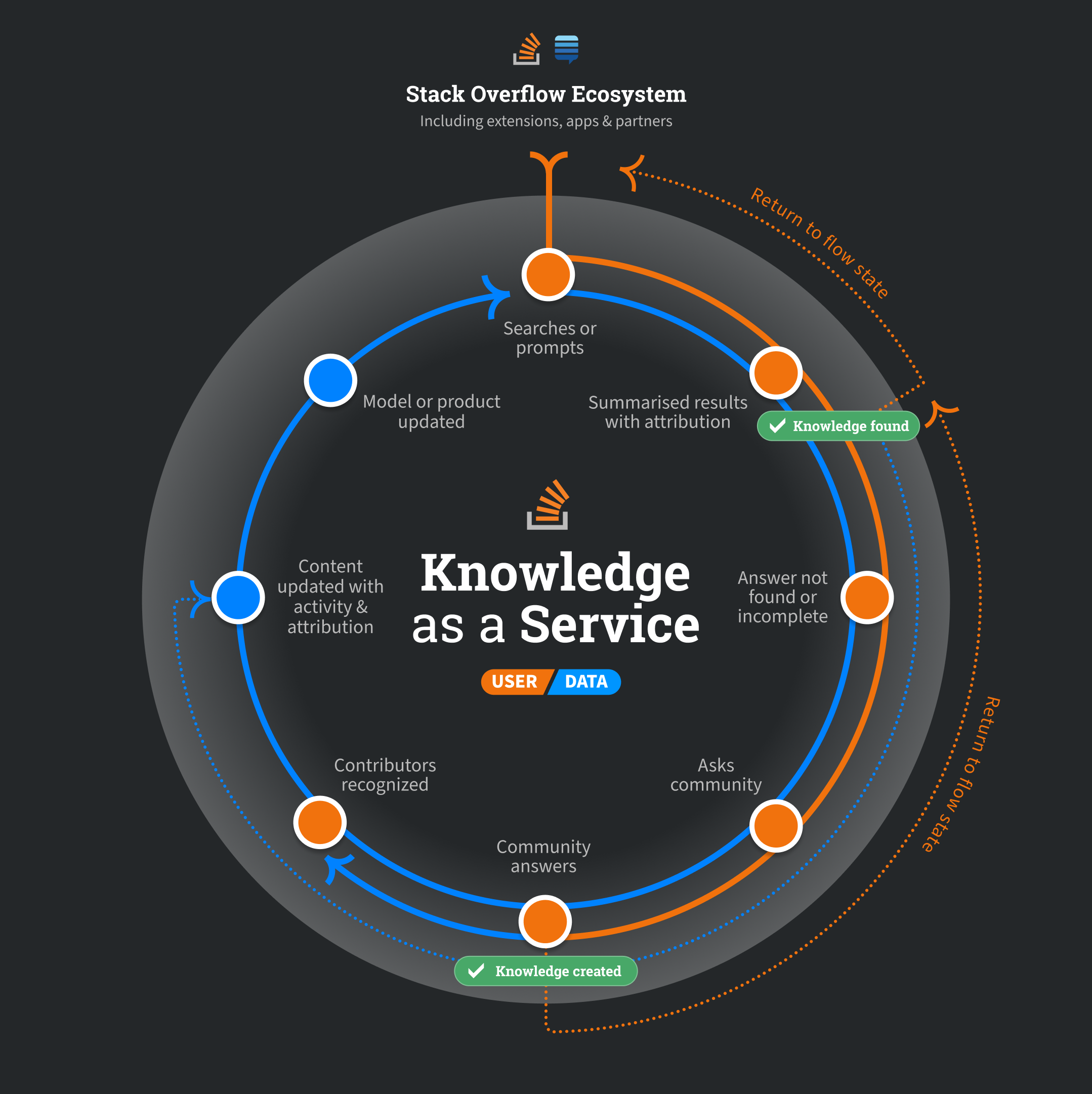

I also had the chance to preview our focus on Knowledge-as-a-Service at WeAreDevelopers, or how we at Stack Overflow view the evolution of the community business model in the current information landscape. The ways we find and share information are shifting dramatically with the rise of GenAI in the last two years, and there is more separation between sources of knowledge on the internet and how users interact with that knowledge. Due to these tectonic changes, a new set of problems have emerged. How will the proliferating number of large language models continue to improve if there are no new human generated sources of content for them to train on? What happens when an LLM doesn’t have the right context or specific knowledge to provide an appropriate answer? And of course, what do we do when we commonly use these AI tools but can’t fully trust the information they present to us?

The corpus of data and content on our public platform is constantly growing, evolving, and improving from the contributions and feedback from our users. Combined with the internal and specific knowledge from our enterprise customers, this expanded body of knowledge becomes knowledge-as-a-service, driving both innovation and sustainable community growth.

Expanding our partnerships

Promoting and engaging in a healthy knowledge ecosystem means exchanging ideas and collaborating with other players in the sandbox. To that end, we announced two milestone OverflowAPI partnerships with GoogleCloud and OpenAI this past spring. Google Cloud and Stack Overflow brings generative AI to millions of developers through Stack Overflow’s public platform, now powered by Google Cloud, and Gemini for Google Cloud provides code and answers to developers via OverflowAPI. Similarly, our partnership with OpenAI delivers high-quality technical content to strengthen the performance of the world’s most popular large language models. These strategic partners share our dedication to advancing the era of socially responsible AI.

As we laid out earlier this year, our framework around socially responsible AI means that we and our product partners who engage with our OverflowAPI product commit to providing attribution to the subject matter experts and community who contributed content and that the highest quality content is driven and vetted by humans. Technical communities and AI can be better together, with feedback from community users fueling innovation and elevating the answers from AI in a virtuous cycle. We recently added Jeff Bailey to our senior leadership team as Chief Revenue Officer (CRO) as part of our dedication to this important part of our business. A veteran technology leader with more than 30 years of experience across the SaaS and API landscapes, he will be responsible for leading the revenue organization with a strong focus on expanding upon the OverflowAPI business and Knowledge-as-a-Service enterprise product line.

Whether it’s at an API level or in providing resources for their careers, we always strive to be of service to developers. To that end, it felt like a natural joining of forces to partner with Indeed on our latest iteration of Stack Overflow Jobs, which now brings thousands of highly relevant technical jobs to developers in every stage of their career, from seasoned professionals to those just starting out in the industry.

Stay tuned for updates on more partnerships, coming soon!

Meeting developers where they are

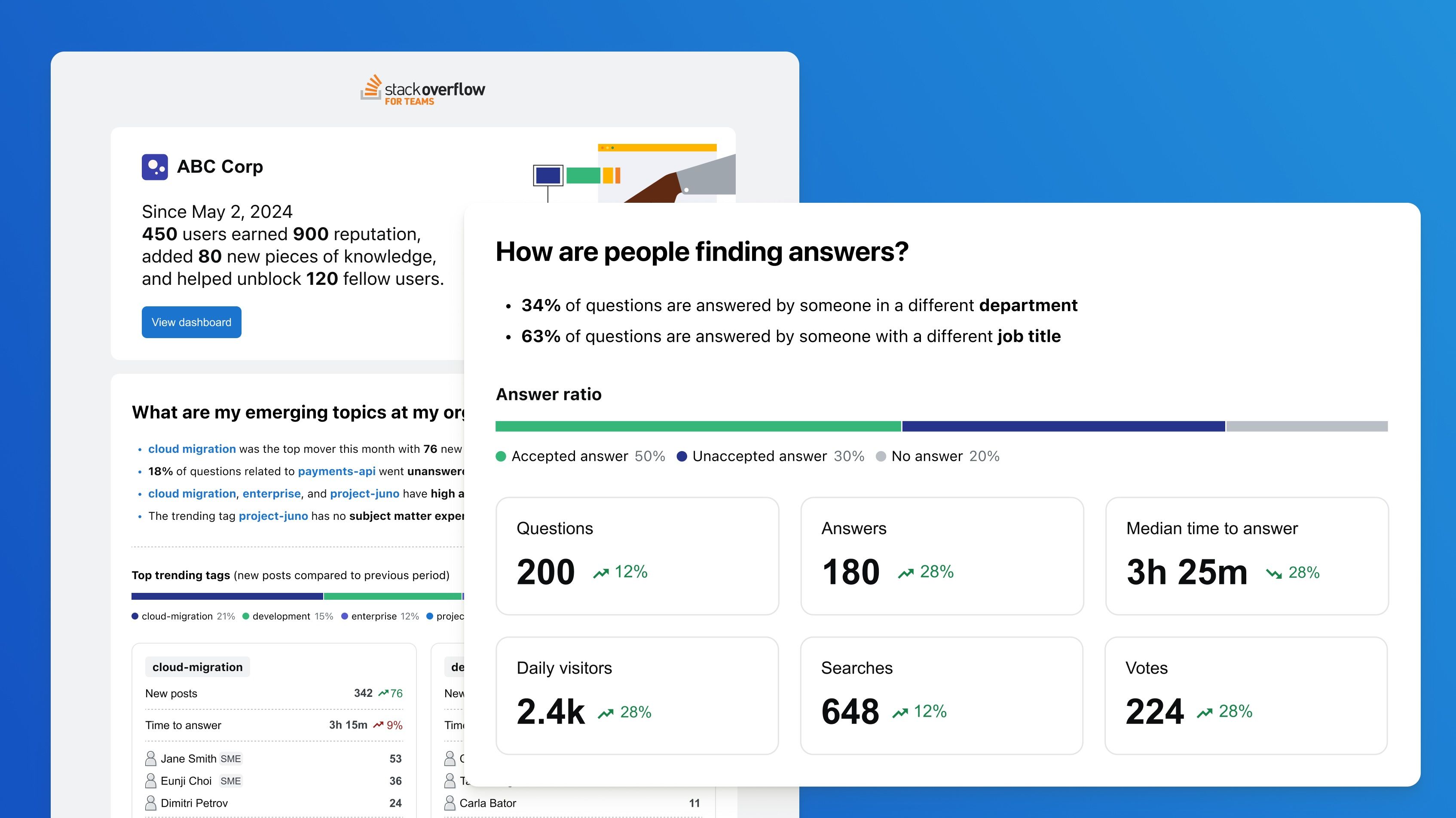

Stack Overflow for Teams, our market-leading knowledge sharing platform used by 20,000 organizations across the globe including Bloomberg, Microsoft, and National Instruments, continues to be the go-to resource for developers to find the information they need, consult subject matter experts on their teams, and collaborate asynchronously. In the spirit of always being where the developers are, we launched OverflowAI in May to general availability, a major step in integrating our GenAI offerings to elevate the developer experience even further. Whether it’s in their Stack Overflow for Teams knowledge community, their integrated development environment with the Stack Overflow for VS Code extension, or in chat apps such as Slack and Microsoft Teams with our Auto Answer App, OverflowAI represents community and AI coming together to deliver a world-class experience to developers and make their lives easier.

Our teams here at Stack Overflow are constantly iterating, soliciting feedback from our users, and making changes to ensure we meet the needs of our customers. We also were proud to ship many new features and upgrades to Stack Overflow for Teams through the spring and summer with several Enterprise releases, including a reimagined Weekly Dashboard Report to better understand and analyze insights from your knowledge community, enhancements to improve subject matter expert visibility and engagement, and the ability to search and filter via our Communities feature.

Evolving our community platform

At Stack Overflow, one of our core values is ‘Keep Community At Our Center’, and we take that ethos to heart. Our Product team here at Stack is constantly discussing ways we can give back to our global community after the trust and time they’ve put into contributing to our public platform for 16 years. One of these recent investments was the launch of Staging Ground to general availability after several successful beta launches. Staging Ground acts as a sandbox environment for new users on our public platform to connect with more experienced users, seek guidance on the question asking process, and learn about the norms of the platform. We’ve since seen improvements in question quality, and last month we released a new stats module that demonstrates and incentivizes review activity and impact.

We’re also exploring ways in which AI and ML can assist community users in writing drafts for questions and can reduce the burden of work on our mods and curators by assisting in content review and flagging out of date content. Other community initiatives we’ve been working on include reducing sign-up friction to make the process of joining our community easier, and we also recently held a week-long Community Asks Sprint aimed at resolving small feature requests and general quality of life improvements for our users.

We also know that given the shifting information landscape and the rise of AI agents, many of our community users are concerned about privacy and accessibility. Stack Exchange has a long history of publishing Q&A data, which has been used for a variety of applications such as building community tools and academic research. To that end, we brought the hosting of our quarterly data dump in-house. You can view the data dump for each network site through the user profile. The idea behind this is that these quarterly data dumps are a crucial resource for our community, but we want to put safeguards around who is allowed to download the technical content on our site, and incentivize existing and potential partners to invest back into our community and join us in our mission of building the era of socially responsible AI.

At Stack Overflow, we’re committed to our community, whether that be our public platform users, our customers, partners, and Stackers, but we also deeply believe in building a future where our larger knowledge communities can thrive in the era of AI. An essential part of that mission is preserving the trust that our community has placed in us for 16 years, and that means continuing to invest back into our knowledge ecosystem. One of the reasons I joined Stack Overflow was the opportunity to make an impact on a community that has the global scale this one does, and I’m inspired regularly by the many of you who give their time, effort, and knowledge to others. I’m grateful for the trust this community places in us to continue to carry forward our mission of empowering the next generation to develop technology through collective knowledge.