SPONSORED BY SALESFORCE

Imagine if you could talk to your Salesforce database.

When we first heard about large language models (LLM), that’s where our heads went. A significant amount of business data resides within any given Salesforce instance, and the user interfaces to that data can be both information-rich and overwhelming. We’re always trying to make it easy for users to pick out the information they need and gain insights into their processes, so a natural language interface seemed like a dream.

At first, it was. With the massive change around generative AI, we were in a unique position to make that dream a reality by providing a deep conversational experience. As we started building this experience on top of our existing Einstein AI platform, we realized we could go further and automate the process of gathering, transforming, and utilizing information within the Salesforce platform. That led to Agentforce: a suite of pre-built AI skills, topics, and actions that allow users to build and customize autonomous AI agentsThis article will talk about how Agentforce works, the tech we built in creating it, and how it supports various industry verticals. This is a huge step for our customers.

Chat CRM

Chat functionality for Salesforce was already available in multiple platforms like hyperscalers and boutique and open-source frameworks. In those stacks, data leaves the Salesforce trust boundary and exposes your valuable company information to a third party. We wanted all those interactions—the questions and the responses based on CRM data—to stay within our trust boundary.

Enterprise systems and processes can be complex and they require detailed information to manage them. Salesforce has deep integration with these enterprise processes, but for a user to navigate to the right screen, they might require multiple screens and additional clicks. Customers have always wished for a faster way to find what they need without losing the context they’ve come to expect.

Our initial foray into GenAI was a bot framework—a chat assistant and a Copilot-style helper. Einstein bots were well-defined conversational paths with user-defined intent detection and named entity recognition through traditional intent detection service. Einstein Bots used predefined rules and scripted responses to navigate conversations. They excel at following strict processes and adhering to brand messaging guidelines. But anything not scripted could not be handled.

With Agentforce, we’re exposing processes and deep workflows that were built prior to the commoditization of genAI. We are providing tools like the Prompt Builder, Model Builder, and Agent Builder which help access and use data in, data cloud, and models built using predictive AI tools included in Einstein AI (Einstein on data cloud).

When you ask Agentforce a question, it invokes the Atlas Reasoning Engine, which is the brain behind Agentforce and acts as an orchestrator for all actions. It tries to determine the user’s intent in using an LLM, then disambiguates the question into topics, which constitute individual jobs to be done. Those topics might have complicated instructions, business policies, and additional guardrails. To complete the job, Agentforce executes one or more invocable actions from our action framework. Actions are individual tasks like writing an email, querying a record, or summarizing a record.

These topics provide a lot of the magic—they store natural language instructions that can be given to the LLM as context. With this context, the LLM can create and execute actions in a way that best addresses the request. Users can clarify their requests, which will then modify the context in the topic. Because the LLM here uses CoT reasoning, it can explain why it created each action, which reduces hallucinations and builds user trust in the agent.

For the most part, users operate within Salesforce. The agents can be triggered by prompts from the user, data operations on CRM, or business processes and rules. But the agents don’t have to only use Salesforce tools. They can talk to our Data Cloud, which offers semantic search or retrieval-augmented generation (RAG), or an external data source through MuleSoft connectors or APIs. They can search the web or your organization’s internal knowledge bases. Through external connectors via MuleSoft, agents can access a vast number of systems and platforms.

We know that our customers use Salesforce for a large number of use cases, which is an endless list. To cater to this vast business need, users can create their own actions using our Apex programming language. You can integrate with our inbuilt workflow engine (Flow) and trigger complicated workflows within Salesforce. You can deploy those workflows within APIs. And you can create custom UI elements to trigger and enable agentic flows.

Trust is our number one value at Salesforce, so agents operate within the Einstein Trust Layer. This detects toxic prompts and responses and defends against prompt injection, among other features. Even with all of these guardrails, no LLM is 100% accurate and hallucination-free, so we include transferring the task to a human agent as another potential way to prevent unwarranted outcomes. Some processes are too business-critical to leave a human out of the loop.

It’s a powerful system, and we’d like to give you a glimpse into how we built it.

The brains of a new machine

Moving from a chatbot to an orchestrated agent system required architecting an event-driven and asynchronous planner: the Atlas Reasoning Engine. We needed to build something scalable, maintainable, and extendable.

Our agents needed three components:

- State: Our previous attempts lacked human-level performance because they remembered nothing about past interactions, user data, and relevant context. We would need to store them with the agent and provide this context to the LLMs that handled any requests.

- Flow: The steps and actions an agent would need to perform in order to complete the request.

- Side effects: These are lasting changes—filing tickets, updating fields—that would result from completing the actions in the flow.

For LLMs to be able to spin up such agents, we needed a declarative method to create them. Creating custom code for each use case or defining rabbit holes of conditionals was too labor-intensive for the scale we were thinking.

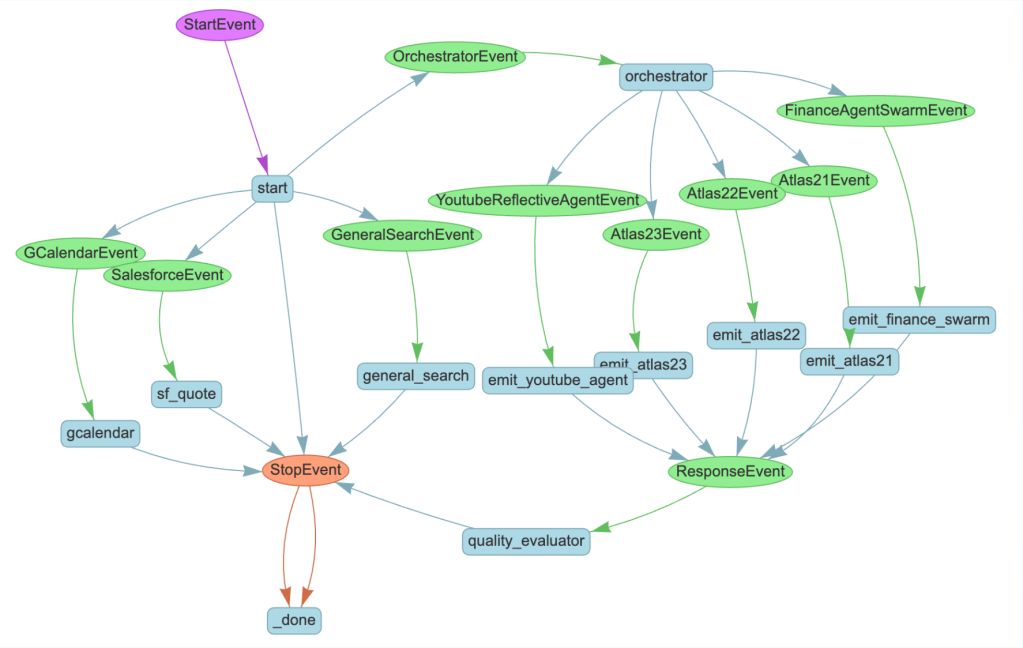

Our solution was to define agents through YAML files. Agents can specify what the agent does, what data it can access, the actions it can take, any guardrails to consider, and the domains and tools it operates within all without writing extensive code. New agents can be created just by creating a new YAML file with its actions and more. It’s as easy as adding an app to a smartphone. Actions are triggered through asynchronous events, which makes the whole system loosely coupled. In the multi-agent space, agents are also communicating with each other using events. These events could be a startEvent, stopEvent or input/output event.

The underlying framework that manages and creates these agents and processes their workflows use both “System 1” and “System 2” thinking—thinking fast and slow, in the parlance of Daniel Kahnemann—and process data at inference time.

Our agents have powerful reasoning abilities that let them parse a request into its constituent parts, ask for more information, adapt to changes, and bring in external data when needed. They can also be expensive to run.

Scaling with multiple models

This team handles the interface between LLMs and other engineering teams at Salesforce. In order to eliminate our need to forecast traffic, we partnered with them to create Inference Components (IC) that allow a single model in its own container to scale dynamically based on traffic. Then, by hosting multiple models on the same endpoint and adjusting capacity as demand changes, we were able keep costs reasonable.

For the overall architecture, we needed something that scaled well and could handle multiple processes at once. That led us to an event-driven architecture, where the Altas Reasoning Engine operates publish-subscribe queues for all agents and actions. By decoupling components and strongly typing functions, agents can operate concurrently and independently.

We’ve seen strong results from this design—our early pilots showed response relevance doubled, while end-to-end accuracy in customer service applications increased by 33% over competitor or in-house DIY solutions.

Vertical agents

Most of our customers are operating in a vertical—an industry with its own ontology, business needs, and requirements. We’ve spent a lot of time trying to serve them by building deep processes that address these verticals. For those that need it, we offer additional data models, objects, and components. Some customers, however, opted to build their own pieces. Agentforce needed to integrate with this vertical data.

With industry vertical solutions, Salesforce has provided domain-specific native data models and deep processes embedded into out-of-box offerings, which can either coexist with Sales and Service cloud or separately.

Agentforce for industry specific use cases entails providing agents which are vertical aware through vertical-specific metadata, data models, and deep APIs or workflows, which are then exposed through actions to the orchestrator.

Conclusion

We’re excited to see what our customers do with Agentforce now that it’s generally available. It’s a step up in productivity for users that will enable faster and smoother business processes without endlessly navigating tabs in search of the right piece of information.

This is just the beginning for Agentforce; with more goodness planned across future releases.. We’re working on a testing and evaluation framework to simplify the process of creating your own agents. Look for multi-intent, multimodal, and multi-agent support as we continue to multiply your productivity.

If you’re interested in building the next generation of AI agents with us, we're hiring!