I agree with the saying: “If you’re not embarrassed by your old code then you aren’t progressing as a programmer.” I began programming recreationally more than 40 years ago and professionally more than 30 years ago, so I have made a lot of mistakes. As a computer science professor, I encourage students to learn from mistakes, whether their own, mine, or famous examples. I feel it’s time to shine a light on my own mistakes to keep myself humble and in the hope that someone can learn from them.

Third Place: Microsoft C compiler

I had a high school English teacher who argued that Romeo and Juliet was not a tragedy, since the protagonists did not have tragic flaws: the reason they acted foolishly was that they were teenagers. I didn’t like the argument then but see the truth in it now, especially as it pertains to programming.

After completing my sophomore year at MIT, I was both literally a teenager and a programming adolescent when I began a summer internship at Microsoft on the C compiler team. After doing some grunt work, such as adding support for profiling, I got to work on what I consider the most fun part of a compiler: back-end optimizations. Specifically, I got to improve the x86 code generated for switch statements.

I went hog wild, determined to generate the optimal machine code in every case I could think of. If the case values were dense, I used them as indices into a jump table. If they had a common divisor, I would use that to make the table denser (but only if the division could be done by bit shifting). I had another optimization when all the values were powers of two.

If the full set of values didn’t satisfy one of my conditions, I divided the cases up and called my code recursively.

It was a mess.

I heard years later that the person who inherited my code hated me.

Lessons

As David Patterson and John Hennessy write in Computer Organization and Design, one of the great principles of computer architecture (also software engineering) is “make the common case fast”:

Making the common case fast will tend to enhance performance better than optimizing the rare case. Ironically, the common case is often simpler than the rare case. This common sense advice implies that you know what the common case is, which is only possible with careful experimentation and measurement.

In my defense, I did try to find out what switch statements looked like in practice (i.e., how many cases there were and how spread out the constants were), but the data just wasn’t available back in 1988. That did not, however, give me license to keep adding special cases whenever I could come up with a contrived example for which the existing compiler didn’t generate optimal code.

I should have sat down with an experienced compiler writer or developer to come up with our best guesses of what the common cases were and then cleanly handled only those. I would have written fewer lines of code, but that’s a good thing. As Stack Overflow co-founder Jeff Atwood has written, software developers are their own worst enemies:

I know you have the best of intentions. We all do. We’re software developers; we love writing code. It’s what we do. We never met a problem we couldn’t solve with some duct tape, a jury-rigged coat hanger, and a pinch of code….

It’s painful for most software developers to acknowledge this, because they love code so much, but the best code is no code at all. Every new line of code you willingly bring into the world is code that has to be debugged, code that has to be read and understood, code that has to be supported. Every time you write new code, you should do so reluctantly, under duress, because you completely exhausted all your other options. Code is only our enemy because there are so many of us programmers writing so damn much of it.

If I had written simple code that handled common cases, it could have been easily modified if the need arose, rather than leaving a mess that nobody wanted (or dared) to touch.

Second Place: Social Network Ads

When working on social network ads at Google (remember Myspace?), I wrote some C++ code that looked something like this:

for (int i = 0; i < user->interests->length(); i++) {

for (int j = 0; j < user->interests(i)->keywords.length(); j++) {

keywords->add(user->interests(i)->keywords(i)) {

}

}Readers who are programmers probably see the mistake: The last argument should be j not i. My unit tests didn’t catch the mistake, nor did my reviewer.

After going through the launch process, my code was pushed late one night — and promptly crashed all the computers in a data center.

It wasn’t a big deal, however. There were no outages, since code is tested in a single data center before being pushed globally. It just meant that the SREs had to stop playing pool and rollback some code. I got an email the next morning telling me this that included a stack dump of the crash. I fixed the code and added unit tests that would have caught the error. Since I followed proper procedure — and there’s no way my code would have gone live if I hadn’t — that was that.

Lessons

Some people think that making a mistake that big could cause someone to lose their job, but (a) programmers make mistakes and (b) the programmer is unlikely to make that mistake again.

Actually, I do know a programmer who was fired for a single honest mistake, despite being an excellent engineer. He was then hired (and later promoted) by Google, which didn’t care about the mistake, which my friend openly admitted during the interview process.

There’s a story about Thomas Watson, the legendary Chairman and CEO of IBM:

"A very large government bid, approaching a million dollars, was on the table. The IBM Corporation — no, Thomas J. Watson Sr. — needed every deal. Unfortunately, the salesman failed. IBM lost the bid. That day, the sales rep showed up at Mr. Watson’s office. He sat down and rested an envelope with his resignation on the CEO’s desk. Without looking, Mr. Watson knew what it was. He was expecting it.

He asked, “What happened?”

The sales rep outlined every step of the deal. He highlighted where mistakes had been made and what he could have done differently. Finally he said, “Thank you, Mr. Watson, for giving me a chance to explain. I know we needed this deal. I know what it meant to us.” He rose to leave.

Tom Watson met him at the door, looked him in the eye and handed the envelope back to him saying, “Why would I accept this when I have just invested one million dollars in your education?”"

I have a t-shirt that says “If people learn from their mistakes, I must have a Master’s degree by now.” I have a PhD.

First place: App Inventor API

To be really mortifying, a mistake should affect a large number of users, be public, exist for a long period of time, and come from someone who should have known better. My biggest mistake qualifies on all counts.

Worse is Better

I read The Rise of Worse is Better by Richard Gabriel when I was a grad student in the nineties, and I like it so much that I assign it to my students. If you haven’t read it recently, do so now. It’s short.

The essay contrasts doing “the right thing” with “the worse-is-better philosophy” along a number of dimensions, including simplicity:

The Right Thing: The design must be simple, both in implementation and interface. It is more important for the interface to be simple than the implementation.

Worse is Better: The design must be simple, both in implementation and interface. It is more important for the implementation to be simple than the interface.

Set that aside for a moment. I set it aside for years, unfortunately.

App Inventor

I was part of the team at Google that created App Inventor, an online drag-and-drop programming environment that enables beginners to create Android apps.

Back in 2009, we were rushing to release an alpha version in time for teacher workshops in the summer and classroom use in the fall. I volunteered to implement sprites, fondly remembering writing games with them on the TI-99/4 in my youth. For those not familiar with the term, a sprite is an object with a 2D representation and the ability to move and interact with other program elements. Some examples of sprites are spaceships, asteroids, balls, and paddles.

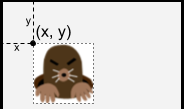

We implemented App Inventor, which is itself object-oriented, in Java, so it’s objects all the way down. Since balls and image sprites are very similar in behavior, I created an abstract Spriteclass, with properties (fields) such as X, Y, Speed, and Heading. They have common methods for collision detection, bouncing off the edge of the screen, etc.

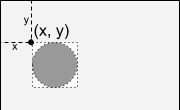

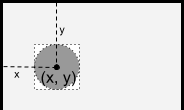

The main difference between a ball and an image sprite is what is drawn: a filled-in circle or a bitmap. Since I implemented image sprites first, it was natural to make the x- and y-coordinates specify the upper-left corner of where the image was placed on the enclosing canvas.

Once I got sprites working, I realized that it would be simple to implement a ball object with very little code. The problem was that I did so in the simplest way (from the point of view of the implementer): having the x- and y-coordinates specify the upper-left corner of the bounding box containing the ball.

What I should have done is have the x- and y-coordinates specify the center of the circle, as is done in every single math book and everywhere else circles are specified.

Unlike my other mistakes, which primarily affected my colleagues, this affected the millions of App Inventor users, many of them children or otherwise new to programming. They had to do extra work in every app they created that used the ball component. While I can laugh off my other mistakes, I am truly mortified by this one.

I finally patched the mistake just recently, ten years later. I say “patched” and not “fixed” because, as the great Joshua Bloch says, “APIs are forever”. We couldn’t make any change that would affect existing programs, so we added a property OriginAtCenter, which defaults to false in old programs and to true going forward. Users will be right to wonder why on earth the origin would ever be anywhere but the center. Answer: Ten years ago, one programmer was lazy and didn’t create the obvious API.

Lessons

If you ever develop an API (which almost all programmers do), you should follow best practices, which you can learn from Joshua Bloch’s video “How To Design a Good API And Why It Matters” or the bumper sticker summary, which includes:

APIs can be among your greatest assets or liabilities. Good APIs create long-term customers; bad ones create long-term support nightmares.

Public APIs, like diamonds, are forever. You have one chance to get it right so give it your best.

Early drafts of APIs should be short, typically one page with class and method signatures and one-line descriptions. This makes it easy to restructure the API when you don’t get it right the first time.

Code the use-cases against your API before you implement it, even before you specify it properly. This will save you from implementing, or even specifying, a fundamentally broken API.

If I’d written even a one-page proposal with a sample use case, I probably would have realized my design mistake and fixed it. If not, one of my teammates would have. Any decision that people will have to live with for years deserves at least a day’s consideration (whether or not we’re talking about programming).

The title of Richard Gabriel’s essay “Worse is Better” refers to the benefit of being first to market, even with a flawed product, rather than taking forever to create something perfect. When I looked back at the sprite code, however, I saw that doing things the right way wouldn’t have even been more code. By all measures, I made a poor decision.

Conclusion

Programmers make mistakes every day, whether it’s writing buggy code or failing to try new things that would increase their skill and productivity. While it is possible to be a programmer without making as big of mistakes as I have, it is not possible to be a good programmer without admitting and learning from your mistakes.

As a teacher, I often encounter students who fear they’re not cut out for computer science because they make mistakes, and I know that the Imposter Syndrome is rampant in tech. While I hope readers will remember all of the lessons from this article, the one I most hope you’ll remember is that whether we laugh, cry, blush, or shrug them off, all of us make mistakes. Indeed I’ll be surprised and disappointed if I can’t write a future sequel to this article.