One of the most powerful attributes of Stack Overflow (SO) is the accumulation of developers’ knowledge over time. Community members have contributed more than 18 million questions and 27 million answers. When a developer is stuck on a coding problem, they search through this vast trove of information to see if a solution to their particular conundrum has already been offered. Some use the internal SO search feature while others use search engines such as Google or Bing, narrowing down the search to the stackoverflow.com domain.

Software developers searching for answers might use natural language - “How do I insert an element array in a specific position?” - or they might choose a few important keywords relevant to the programming task at hand and use those as their query with the hope that the search engine would return the relevant solutions. A lot of the time they find the relevant code, but don’t find a clear explanation of how to implement it. Other times they find a great explanation about how one might solve the problem, but not the actual code.

Earlier this year, a team of computer science researchers published a paper with a novel solution to this problem: CROKAGE - the Crowd Knowledge Answer Generator. This service takes the description of a programming task as a query and then provides relevant, comprehensive programming solutions containing both code snippets and their succinct explanations.

The backstory for this fascinating service begins in 2017, when Rodrigo Fernandes, a PhD student in computer science at The University of Uberlandia in Brazil, was looking for a thesis topic. He decided to investigate duplications in Stack Overflow under Professor Marcelo de Almeida Maia’s supervision. After a preliminary literature review, Rodrigo came across two previous works, DupPredictor and Dupe, that detect duplicate questions on Stack Overflow. Rodrigo reproduced both works, which resulted in a paper published at SANER’2018 in Italy.

During that conference, Rodrigo was introduced to one of the Dupe authors, Professor Chanchal K. Roy from the University of Saskatchewan. They had a nice chat and envisioned an interesting idea which would turn into a collaborative research project: how to search Stack Overflow in such a way that results returned both relevant code snippets and a natural language explanation for them.

As computer scientists, this group of academics knew that developers searching for solutions to coding questions are impaired by a lexical gap between their query (task description) and the information (lines of actual code) associated with the solution that they are looking for. Given these obstacles, developers often have to browse dozens of documents in order to synthesize a full solution.

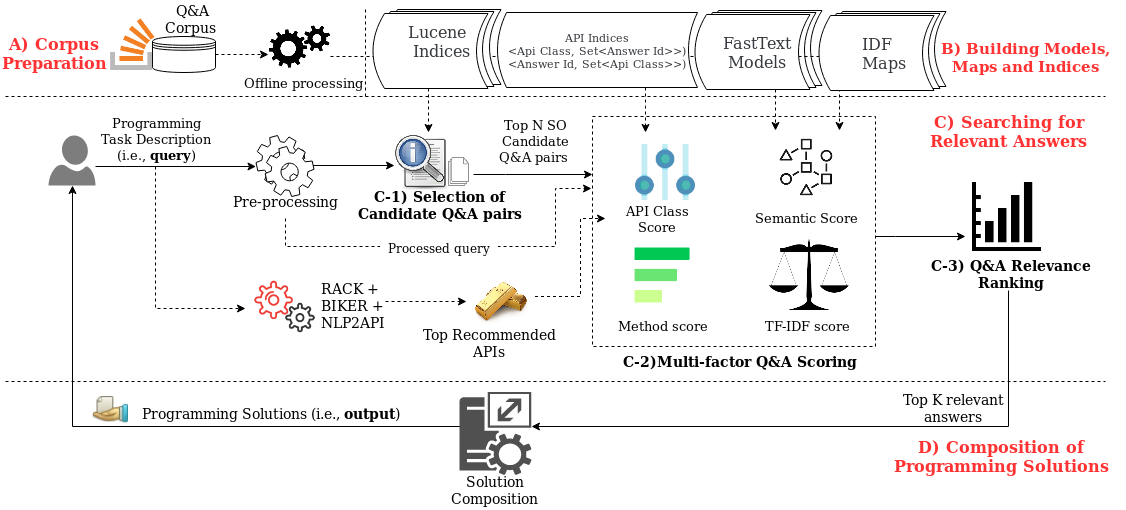

Luckily, the team did not have to start from scratch. Prof. Roy brought Rodrigo to the University of Saskatchewan as a visiting research student. One of Prof. Roy’s students at the University of Saskatchewan was Masud Rahman, a PhD candidate in computer science, who had previously researched translating a programming task written in natural language into a list of relevant API classes with the help of Stack Overflow. The team was able to build on two previous tools, RACK and NL2API, developed under Prof. Roy’s lead with Masud as the core researcher.

In order to reduce the gap between the queries and solutions, the team trained a word-embedding model with FastText, using millions of Q&A threads from Stack Overflow as the training corpus. CROKAGE also expanded the natural language query (task description) to include unique open source software library and function terms, carefully mined from Stack Overflow.

The team of researchers combined four weighted factors to rank the candidate answers. They made use of the traditional information retrieval (IR) metrics such as TF-IDF and asymmetric relevance. To tailor the factors to Stack Overflow, they also adopted specialized ranking mechanisms well suited to software-specific documents.

In particular, they collected the programming functions that potentially implement the target programming task (the query), and then promoted the candidate answers containing such functions. They hypothesized that an answer containing a code snippet that uses the relevant functions and is complemented with a succinct explanation is a strong candidate for a solution.

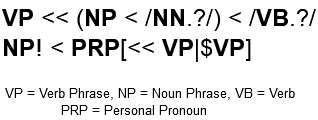

To ensure that the written explanation was succinct and valuable, the team made use of natural language processing on the answers, ranking them most relevant by the four weighted factors. They selected programming solutions containing both code snippets and code explanations, unlike earlier studies. The team also discarded trivial sentences from the explanations. CROKAGE uses the following two patterns to identify the important sentences:

These patterns ensure that each sentence has a verb, which is associated with a subject or an object. The first pattern guarantees that a verb phrase is followed by a noun phrase, while the second pattern guarantees that a noun phrase is followed by a verb phrase. They also ensure that a verb phrase is not a personal pronoun. According to the literature, sentences with the above structures typically provide the most meaningful information. In order to synthesize a high-quality code explanation, they discard several frequent but trivial sentences (e.g.,“Try this:”, “You could do it like this:”, “It will work for sure”, “It seems the easiest to me” or “Yes, like doing this”).

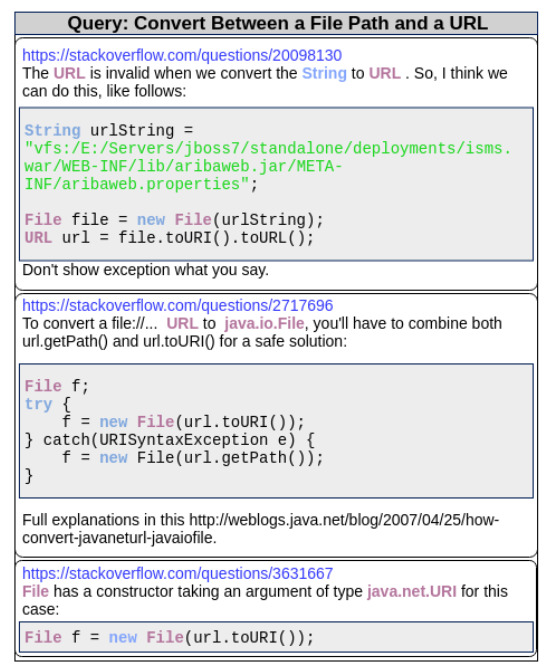

Let us consider a search query -- “Convert between a file path and url”. CROKAGE delivers the solutions shown in the figure below. Note that the tool provides a ranked list of questions and answers. Each of these solutions contains not only a relevant code snippet but also its succinct explanation. Such a combination relevant code and corresponding explanation is very likely to help a developer understand both the solution to their problem and how best to implement that code in practice.

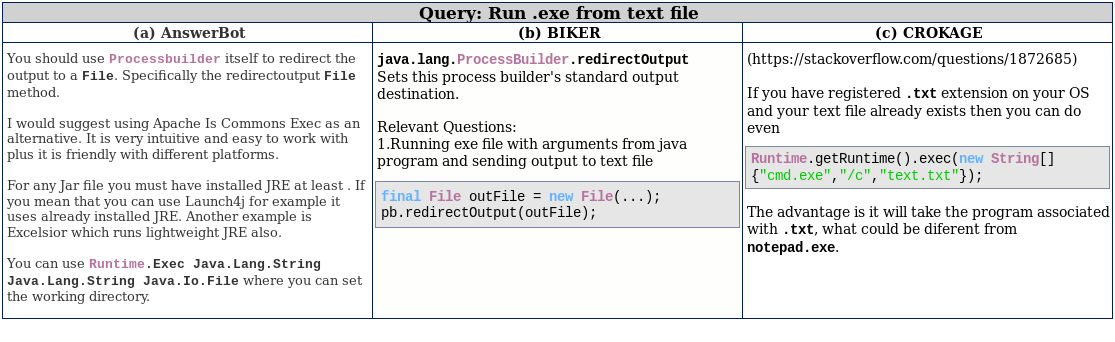

The team analyzed the results of 48 programming queries processed by CROKAGE. The results outperformed six baselines, including the state-of-art research tool, BIKER. Furthermore, the team surveyed 29 developers across 24 coding queries. Their responses confirm that CROKAGE produces better results than that of the state-of-art tool in terms of relevance of the suggested code examples, benefit of the code explanations, and the overall solution quality (code + explanation).

To be sure, CROKAGE still has some limitations. For instance, if the query is poorly formulated, the tools will not suggest on how to improve the query. Like any other search tool, the results, though encouraging, are not perfect. The team is still investigating other factors that could not only help find higher quality answers, but also improve the synthesized solution offered up as a final result.

If you would like to try CROKAGE for yourself, the service is experimentally available at http://www.isel.ufu.br:9000. It’s limited to Java queries for now, but the creators hope to have an expanded version open to the public soon.

More details about the tool can be found in the original paper. If you are interested in project source code, the authors provided a replication package available at GitHub.

The team would also like to acknowledge the work that went into tools that proceeded CROKAGE - BIKER, RACK, and NLP2API - without which their current work would not exist.

Disclaimer: As CROKAGE is a research project deployed on a university lab server, it may suffer from some network instability and server overload.