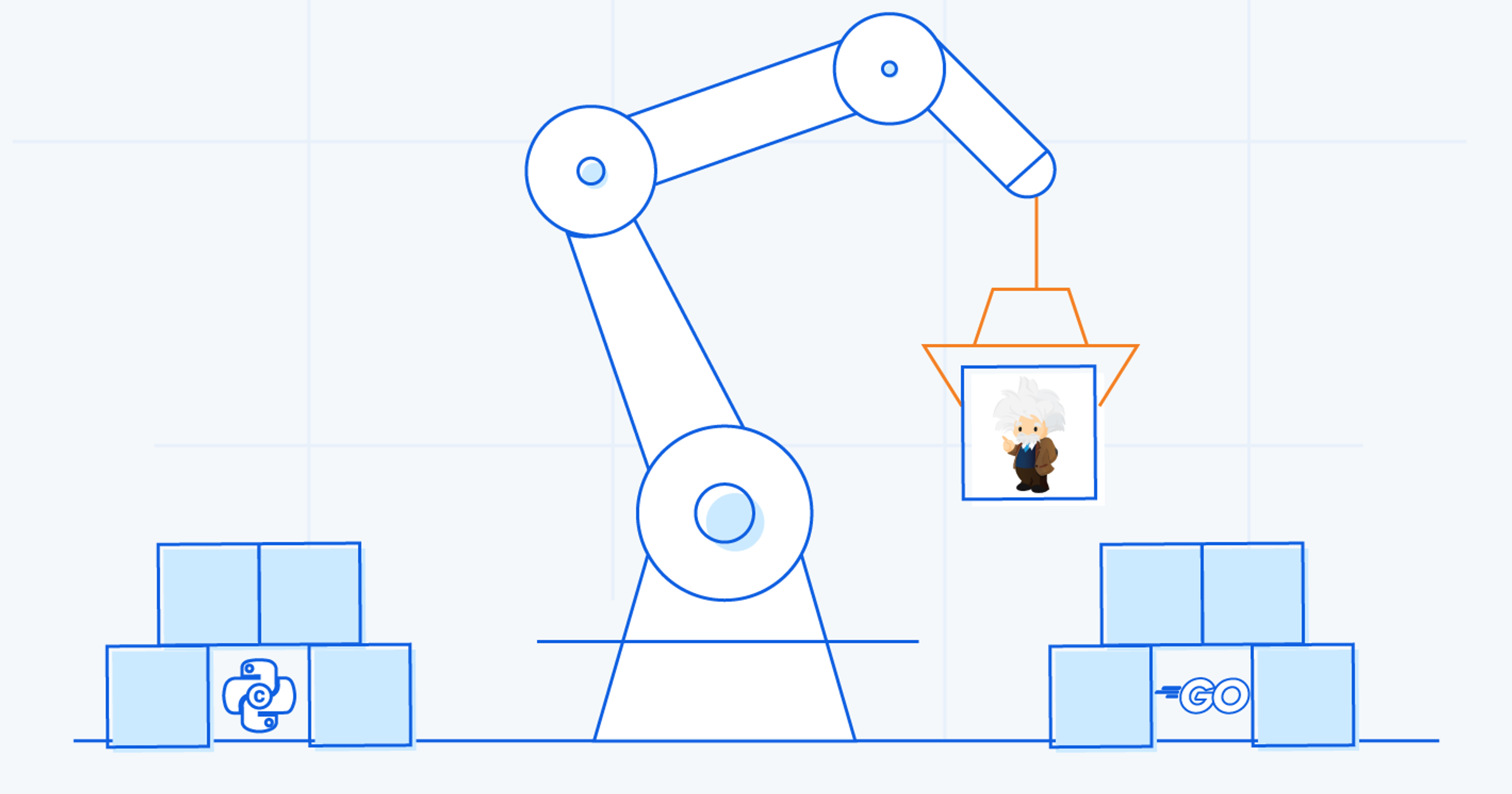

with Antonio Scaramuzzino In our 2019 Dev Survey, we asked what kind of content Stack Overflow users would like to see beyond questions and answers. The most popular response was “tech articles written by other developers.”So from now on we’ll be regularly publishing articles from contributors. If you have an idea and would like to submit a pitch, you can email pitches@stackoverflow.com. It’s rare that we get a chance to directly compare two technologies against each other for the same task. But sometimes the stars align, either because you start experiencing negative effects from your current stack, new technology appears that meets your exact needs, or the scale and feature set of your project outpaced the tech on hand. Here at Salesforce, we had just this situation arise in the past few years. We ported most of our Einstein Analytics backend from a Python-C hybrid to Go. Go is a language that Google designed for large-scale, modern software engineering. The story goes that Google engineers had the idea to create a language designed for their large-scale applications and started designing Go while they were waiting for their massive C++ projects to compile. This post will discuss our experience in moving enterprise-level software from a C-Python hybrid over to an (almost) completely Go application. Einstein Analytics adds business intelligence processing to Salesforce instances. Through cloud-based AI processing, it generates actionable insights—forecasts, pipeline reports, performance measurements—directly from Salesforce CRM data plus as much external data as the customer needs, regardless of its structure and format. Behind the scenes, a given Salesforce instance exposes Einstein Analytics functionality as part of regular Salesforce REST APIs. These link to a cluster of query servers, which each serve queries from their linked data sets cached in memory, though they can populate their cached data from any node in the cluster. To manage all these requests, we have an optimized process on each of these servers that routes requests to the appropriate node and forwards the response to the originator of the API request. To any query server reading datasets, these calls all look local. And local means fast. Larger datasets are partitioned, and a stateless query coordinator aggregates data from remote partition subqueries. Data sets are created using an ETL (extract, transform, load) batch process, then stored in a proprietary columnar database format. The query engine and the data set creation tools for the product that eventually became Einstein Analytics were originally written in C for performance with a Python wrapper that provided higher-level functionality—parsing queries, a REST API server, the expression engine, and more. In essence, the product was built to have the best of both worlds. Python is great for quickly writing higher level applications but doesn’t always deliver the high performance needed at an enterprise level. C creates highly performant executables, but adding features takes a lot more time.

First piece to Go

Initially, this combination had worked. But after building the software for years, Einstein Analytics started to show performance slow downs. That’s because any feature that wasn’t part of the core query engine was added to the Python wrapper. Features could be developed and deployed rapidly this way, but over time, they dragged the entire system down. Python doesn’t do multi-threading very well, so the more the wrapper was being asked to do, the worse it performed. The previous team was already looking at porting the wrapper over to Go, so we took a look as well. We soon realized that on an enterprise-level system, we would have two additional problems. First, Python uses loose typing, which was great for a small team rapidly developing new ideas and putting them into production—but less great for an enterprise-scale application that some customers were paying millions of dollars for. Second, we foresaw a vast dependency nightmare on the horizon, as deploying the right Python libraries, versions, and files would become a chore. So in 2014, we decided to port the Python wrapper to Go. We were initially wary about the young Go ecosystem, but when I started looking into the language’s design goals (Go at Google: Language Design in the Service of Software Engineering), I was impressed with how closely it aligned with ours. It’s built for software engineering more than language sophistication, so its strengths include solid built-in tooling, quick compiles and deploys, and easy troubleshooting. The reality of enterprise software is that you spend a lot more time reading code than writing it. We appreciated that Go makes the code easy to understand. In Python, you could write super elegant list comprehensions and beautiful code that's almost mathematical. But if you didn’t write the code, then that elegance can come at the expense of readability. The port project went very well. We were super happy with the performance and maintainability of the new wrapper. One of the few complaints we had involved a trade-off the language made in choosing scalability over raw performance to help their garbage collection: they decided to start storing primitive types in interfaces as pointers instead of values, which introduced performance overhead and additional allocations for us.

Everything must Go

But the experience was good enough that, when it came time in 2016 to write a new query engine kernel with a better optimizer and improve our data set creation tools, we decided to do them in Go. We were gaining expertise at about the same pace that the Go ecosystem was maturing, so it made sense to reduce overhead and make our code reusable in a single language. Plus, we wanted to eliminate the CGO interface overhead. The big unknown for that was performance. Go uses a lightweight “green thread” model of asynchronous IO in its Goroutines, which gave us the multi-threading advantage over Python, but C code runs as fast as you let it—it trades built-in safety for speed, plus C compilers are more mature and have better optimizations. Our team created a proof of concept (POC) that achieved near parity in performance with the C engine, but only if we used the right programming patterns:

- Buffer all IO to reduce the overhead on Go system calls. On a system call, current Goroutines yield to that call.

- When possible in tight loops, use structs instead of interfaces to minimize the interface methods indirection overhead.

- Use pre-allocated buffers within tight loops (similarly to how io.Reader works) to minimize garbage collection pressure.

- Process data rows in batches as a workaround to poor compiler inlining, as to move the actual computation closer to the data and minimize the overhead on each function call.

This rewrite was completed in 2017 and the new Go version of Einstein Analytics went to general availability in 2018. By keeping everything in the same language, we could reuse code and work more efficiently. And the cross-platform and trans-piling potential makes porting the code easy. If we ever need any of this code in a mobile app, we can cross compile it to iOS or Android and it will just work. Earlier, I said the version is (almost) completely written in Go. One exception is our cluster manager, which may seem a little odd as Kubernetes and other types of cluster orchestration applications are the most common usage for Go, but the team that owns this service felt more comfortable using Java. It’s important to allow teams to own their own components; you can’t force people to do things they don’t want to do. While Go has some limitations that we’ve had to work around, we’re very pleased with the results. And Go continues to improve. They’ve addressed some of the weakness in their compiler by moving it to a static single assignment form, which makes it easier to make fancy optimizations. Garbage collection is getting more efficient, and often the compiler is smart enough to perform escape analysis to detect when variable values can be cheaply allocated on the stack instead of the heap. As a developer, if you want to write highly performant code in any language, you need to be familiar with how the compiler works. That’s not packed into the language. Go has a very simple reference—just two pages! But knowing about the compiler requires gathering up all this tribal knowledge scattered about [editor’s note: Stack Overflow has a product for that, you know], things that detail all the optimizations you can use in the specific version of Go you’re using. After these ports, our team has built up some expertise with Go and its compiler quirks. But you can still get burned. For example, you can very easily write data that you want to place on the cheaper stack instead onto the much more expensive heap. You won’t even know this is happening by reading over your code. That’s why, as with any new language that you require high performance from, you need to monitor processes closely and create benchmarks around CPU and memory use. And then share what you learn with the community so that this knowledge becomes less tribal.

Conclusion

It can be a gamble to choose a newer language and introduce it into an enterprise company. Fortunately, the Go ecosystem has grown with us. Google continues to back the language and it’s been adopted at other large companies. Now we have a team of engineers working on Go full time, and we continue to see positive results. We look forward to growing with the Go community and sharing more of what we learn from our experiences. Salesforce believes that supporting open source technologies like Go drives our industry forward, kick-starts new careers, and builds trust in the products we create. We contribute to thousands of open source projects every year, from key technologies powering our innovation to community projects that make our world a better place. Read more about open source at Salesforce here. The Stack Overflow blog is committed to publishing interesting articles by developers, for developers. From time to time that means working with companies that are also clients of Stack Overflow's through our advertising, talent, or teams business. When we publish work from clients, we'll identify it as Partner Content with tags and by including this disclaimer at the bottom.