In the early days of Stack Overflow, we were just one website running a fast and lean operation. Stackoverflow.com was built for developers by developers as a small startup. Like all startups, we prioritized the quality attributes that mattered most to us and let many others fall by the wayside, including unit testing according to best practices. The site was made for developers, and we found that a lot of users were happy enough to report bugs and work around them while we fixed them.

Fast forward to a few years back when we launched Stack Overflow for Teams Enterprise. We suddenly had a paid product that big companies were using. Unlike our community site users, they didn’t want to find bugs in production. We had integration test suites in place, but our testing infrastructure—in particular, our unit tests—lagged far behind the maturity of our product.

We’re now working to change that. End-to-end and integration tests are fine and part of a balanced testing program, but they can be slow. If you’re looking to enable test-driven development (and we are), as well as quickly test new features, then you should be writing unit tests. I’ve been singing the praises of unit testing for good while now, and I’m excited to bring them to Stack Overflow.

This article will cover what we’re doing to ramp up our unit testing program.

A refresher on test types

Before we dive into how we’re adding unit tests to our dev cycle, let's go over the common test types. Here's how we define the different categories of tests and their benefits and shortcomings.

Exploratory testing: This type of testing lets QA engineers and testers focus on what they're good at: finding edge cases and bugs. You give them early builds and let them bang on it until something breaks. Testers shouldn't have extensive manual regression tests plans that they follow for each change/release. If you have a mature set of e2e, integration, and unit tests that cover the regression part, then you want your testers to find bugs by using their creativity.

End-to-end (e2e): These tests simulate how a real user would interact with your application, and therefore require a complete application setup, including networking, databases, and dependencies. You can set up mock version of these, but often they’ll use the real thing. When e2e tests pass, you can have a high degree of confidence that the application works as expected—at least for happy path actions, edge cases and errors take a lot of work to test in e2e. On the downside, because e2e tests span the entire application, they can be both slow and flaky.

Integration: These test how a feature works with its dependencies. These don’t cover the whole application and are automated. Like with e2e tests, you can use mocks and stubs to prevent actions like sending emails to customers, but the point of an integration test is to test how a feature works with dependencies, so consider using the real thing when you can. Integration tests let you test actions like SQL queries that cannot be tested without accessing dependencies, and they do it without all the baggage that comes from running a full setup. But anything that tests dependencies can be slower and flaky.

Unit: There's some debate about what exactly a unit test is. So we’re on the same page, we consider a unit test an automated test that doesn’t talk to out of process dependencies; it tests the smallest piece of code to ensure that it functions correctly. It just tests a single process and nothing else. Unit tests are fast and operate independently of anything else in the application. On the downside, they only look at a single piece of functionality, so you could conceivably have all your unit tests pass while the feature as a whole is broken. They can be tedious to maintain if the test is too close to the implementation of the feature.

The big downside for us, though, is that our historical architecture has made it difficult to write unit tests.

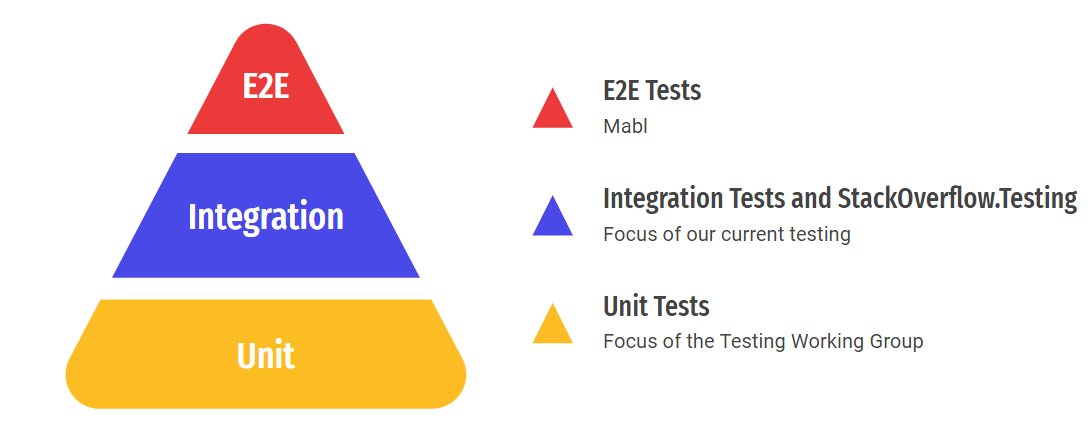

Best practices for testing suggest that we should have a large number of unit tests, a medium number of integration tests, and only a few e2e tests.

As we had nearly no unit tests, we had to get moving.

Why do we need unit testing anyway?

You may wonder why we’re adding unit testing now—we’ve made it this far and done pretty well for ourselves, right?

As we’ve mentioned, we’re maturing as an engineering organization. We have paid products that large enterprises pay good money for. We have a lot of new tech investment on our roadmap for the next few years, so we’ll need a resilient codebase that lets us refactor code when necessary. To paraphrase, it lets us move fast without breaking things. Plus, refactoring the code for the tests lets us create a baseline of clean code and enforce the “clean beach rule” for future code: leave the code as clean or cleaner than you found it.

Besides the benefits for the code, it makes our overall testing program better and take less time. We used to spend a lot of time on manual regression testing and manual testing during pull request reviews. Automating these tests as unit tests will free up a lot of developer time. And it gets us closer to test-driven development, which will let us continue to ship new features to all three editions of our Stack Overflow for Teams product and our community sites even when those features require changes to existing code.

A good testing program leads to a better engineering program, so the effort we spend creating unit tests would make our lives easier (and more productive). Clean, well-written tests serve as a form of documentation; you can read through the tests and learn exactly what the associated code is doing. To encourage our engineers to work on test code when they work on product code, we wanted everyone to own the tests themselves, to feel free to change and modify them as needed.

There were a number of explicit anti-goals we had for this project; results that we were not trying achieve at all. In building out unit tests, we were not trying to create as many tests as possible, or to reach a magical test coverage percentage, or even to follow the testing pyramid strictly. There was no plan to run testing sprints or create tests for existing code en masse or couple tests to implementation.

In short, we needed to get our code into shape so we could build tests easily, but we weren’t trying to suddenly have test coverage on every piece of code already deployed in production. This is preparation for the future; much of our code has been battle tested by our community of developers.

What we did

In order to create genuine unit tests, we needed to ensure that any piece of functionality could be isolated from its dependencies. And because almost everything that happens on our public sites and Stack Overflow for Teams instances draws data from a database, we needed a way to indicate to tests when to pull mock data. We use both Dapper and the Entity Framework within .NET to manage our database connections, so we created an interface that extends DbContext so that we can treat mocked data as a database connection.

Stack Overflow executes a lot of the same queries over and over. As our site was built for speed, we compile a lot of these queries in the Entity Framework. Compiling queries against our DbContext interface was a bit problematic because EF.CompileQuery expects a concrete instance of a DbContext. We came up with a helper class to make it easy for us to use compiled queries when targeting a real database and use in-memory queries when running unit tests. The query stays exactly the same so we know we test the correct behavior.

Once we were able to connect to mock databases, we needed to provide a way to create the mock data that is part of the test. So we introduced a builder that can create mock site data for tests. We’re using builders instead of constructors so we can change how these mock sites are built without having to rewrite all of our unit tests. Builders construct an object by only explicitly passing the information that you need; everything else uses defaults. Again, we did not want to tightly couple our tests and implementation, so we chose to abstract object construction as much as we could.

Our hundred plus Stack Exchange sites and Teams instances share a lot of code, though the content and design may be different. Those differences are controlled by site settings, a smart configuration store that can scale to tens of thousands of sites without using up too much memory. To do that requires a database connection, so we needed to make some changes there as well. We had a settings mock set up for integrations tests, but it was horribly intercoupled. We set up an async context aware injection step before most of the other code hooks so independently running tests could initialize custom mock settings without using a database. As an additional benefit, this solved a bit of flakiness we saw from tests running in parallel, as they were no longer changing the same set of mock settings.

We also want to be able to test our front-end code. For that, we implemented Jest, one of the most popular testing libraries in the JavaScript/TypeScript ecosystem. There are other solid testing libraries for JS/TS, most notably Mocha, but we’ve had good experiences using it in our Stacks editor, so we decided to bring it in for all of our front end-code. Jest is feature-rich—it’s fairly opinionated and batteries included, so we could get started with it quickly.

At point, we can start writing tests. Based on these changes, we set up a testing cookbook in our Stack Overflow for Teams instance with details on how to write good unit and integration tests, mock data from databases, and cache testing data. As a proof of concept, we created our first real-world test using in-memory dependencies. Now we just have to write more tests.

Good tests make for better code

Writing a good unit test is not all that hard. Writing good, testable code is. The best ways to achieve testable code include writing pure functional code without dependencies. That’s not exactly possible in a modern web application. The second best way is to inject dependencies deliberately. In the past, we accessed a lot of objects from static contexts instead of passing them deliberately, which made it very difficult to create a testable version of that code.

With this, we’re committing to testability, to writing resilient code, and more importantly, moving quickly to implement new features that our customers and community want. We’re growing as well, which means our code quality becomes ever more important. Automated unit tests and testable code help in all these areas.