Quantum computing may be the next big breakthrough in computing, but the general conception of it is still in the realm of hype and speculation? Can it break every known crypto algorithm? Can it design new molecules that will cure every disease? Can it simulate the past and future so well that Nick Offerman can talk to his dead son?

We spoke with Dr. Jeannette (Jamie) Garcia, Senior Research Manager of Quantum Applications and Software at IBM Quantum, about their 433 qubit quantum computer and what the real life applications of quantum computing are today.

The Q&A below has been edited for clarity. If you’d like to watch the full conversation, check out the video of our conversation.

Ryan Donovan: How did you get into quantum computing?

Jamie Garcia: I am actually a chemist by training—I hold a PhD in chemistry. I came to IBM because I was very interested in some of the material science work that was going on there at the time and started doing some research in that space. I think most experimentalists will tell you if you get a weird outcome from an experiment, one of the first things you need to do is try to figure out why, and that involves a lot of the theory. I was running down the hallway to talk to my computational colleagues to help elucidate what was going on in my flask that I couldn't actually see.

As a part of that process, I got very interested in computation as a whole and the simulation of nature and trying to use computation towards that end. I realized that there were some real challenges with using classical computers for certain reactions. I would ask my colleagues and they would tell me it was impossible. And I was like, why ?

RD: Can you give an example?

JG: For me, they were surprising examples—small molecules that were really reactive.

You think of radicals, for example, that wreak all sorts of havoc in our bodies, but also turn up in batteries too, which I was studying at the time. The reaction was so high energy and there were so many different things that had to happen with the chemistry that classical computers couldn't model it even though they were small molecules. It's just O2 size.

When I was at Yorktown Heights one day walking down the hallway, I saw one of my colleagues had a poster and it had chemistry on it, which caught my eye. You don't see that all that often at IBM . It turns out that he was using quantum computers to study a certain property of a molecule.

It stopped me in my tracks, and I realized this is a whole new tool for chemistry. Now we've expanded beyond chemistry. We're looking at all sorts of different things, but that was what got me hooked and interested from the very beginning.

RD: We've talked to a few folks in quantum computing, but I think it's valuable to kind of get the basics here. What exactly is a qubit?

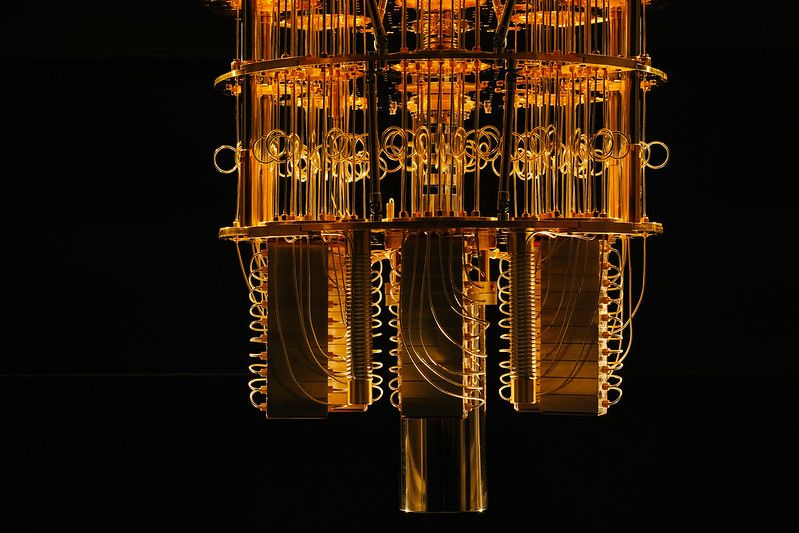

JG: A qubit is our analog to a classical bit. At IBM we use superconducting qubits. These have to be cooled down to around 15 millikelvin. You may have seen photos of our big dilution refrigerators that cool our qubits down to that level. They're made out of superconducting materials.

What you're doing when you're programming a qubit is you're using the materials properties of those superconductors, you're able to move electrons into different energy states. That basically allows you to program a quantum computer. One of the biggest challenges is keeping them in those states. And I have a feeling we'll talk about that.

RD: Especially with your material science background.That seems like that's a big part of the ball game.

JG: But they're fundamentally a kind of different beast too, because we're now using and leveraging quantum mechanics to program the qubits and the quantum computers and be able to perform algorithms on them. So it has a different flavor to it than a classical bit.

In fact, you can use quantum mechanical properties such as superposition and entanglement. Those are new knobs to turn when you're thinking about algorithms. In certain instances, it can be complementary to classical devices. But it really is a whole new area to explore.

RD: I've heard that cubits aren’t exactly stable. You have them super cooled and are trying to keep them in this particular state. To produce one qubit, do you need a lot of redundancy and error correction?

JG:When we're talking about 433 qubits, it's all on one chip, right? So when you program them, a lot of times, we leverage two qubit gates where you need to entangle two qubits together.

You set it up and map your circuit onto the qubits in a very specific way in order to get an answer. Now, the stability piece that you're referring to—qubits are inherently sensitive. We have to cool the qubits that we use down to 15 millikelvin because of exactly what you said.

You're trying to basically hold the qubit in this state for as long as possible so you can run the calculation that you need to run. Basically, you need to have enough time to perform the gate operations for your circuit.

Qubits are susceptible to noise. Sometimes we know where that noise comes from and sometimes we don't. When we think about how we arrange the qubits on the chip, we're doing it in a way that minimizes noise most of the time. We use what's called a heavy hex architecture. That limits the crosstalk between qubits to minimize the noise so you're able to have as long coherence times as possible to run the circuits and do a practical calculation within hours, not in a lifetime.

We've also developed a lot of other techniques to manage the noise. Error correction is something that our teams are working towards and developing out the theory for certain error correction that will include having a fault tolerant device and error rates low enough that we can actually run some of those codes.

But we're also looking at error mitigation, which leverages classical post-processing methods and can capture the noise regardless of whether we know where it comes from or not, to be able to account for the noise and then correct for it so that we can get out as accurate results as maybe even in an error corrected regime.

There's active research ongoing and software tools that are being developed so that we can leverage these techniques as they are developed in real time and use them for our applications research and run algorithms and circuits that are interesting to us.

One of the things that we've recently released, which you can actually access through Qisket runtime is something called probabilistic error cancellation. What this essentially does is when you run a circuit, it runs the inverse of certain parts of the circuit, and you effectively are able to learn where the noise is that way. Then the post post-processing divides it into smaller circuits and you can pull it all back together and account for the noise.

There are opportunities for machine learning, certainly. We're thinking very seriously about how AI and quantum intersect. Especially since we just announced our System Two and the plans for that. We're thinking very carefully about how all these things will play together and where AI can help quantum and where quantum can help AI.

RD: What's the rough equivalent of 433 qubits to classical computing?

JG: This is a tough question to answer. We think of the qubits in terms of state. If you just do a rough back of the envelope calculation, people will usually say it's two to the n. So two to the 433 [states] is a lot. Huge. I think two to 275, that's more than the number of atoms in the universe. So it's absolutely massive.

But there's a lot of nuance that goes into that, especially when we're talking about actually programming a quantum computer and using it to look at a chemistry problem or a problem in finance or anything like that. In addition to that, you have to take into account the noise that you have present in the system.

So it's hard to say about what the computing power today is of a device that has 433 qubits. If you project out to where someday we have error rates that are as close to zero as possible, then that's where you start talking about this two to the n and harnessing the power of the universe. You know, all these things.

That's the potential that it brings to us in terms of compute.

RD: That two to the n is what exactly?

JG: It's basis states.

You can use the examples of molecules. Water might use somewhere around 14 qubits. If you have 14 qubits, then that's 10 to the four classical bits, right?

You can calculate it out that way. But again, there's a lot of nuance here. We need to carefully consider the types of problems that quantum will be good for. It's not necessarily all the same problems that you can think of classical being good for. That's my caveat, but it kind of gives you a rough idea.

RD:Some crypto algorithms are trying to be quantum safe, while others like Shor's Algorithm are uniquely suited for quantum computing. Why is that?

JG: Shor's is an algorithm that is in that long-term error corrected regime, right? You would need to use error correction for it. A lot of the famous algorithms that you've heard of that show exponential speed up with quantum computers, typically what we're talking about are in that regime. There are some algorithms that are famous for chemistry, like quantum phase estimation.

That said,we're, we're doing a lot to bring bring algorithms closer to near term and error mitigation—and maybe even error mitigation combined with error correction—in these early days will allow us to start solving problems that I don't think we would've thought that we would've been able to solve before as early as as this.

Shor's algorithm definitely leverages quantum devices that have these sort of ancilla qubits. When you think of the back of an envelope calculation for what you would need to be able to run Shor's algorithm or crack RSA or something like that, you'll see numbers that are in the millions of qubits. You have to account for that overhead that comes with the error correction.

The asterisk is we're doing things earlier than we thought. I think that that's part of the reason that we're talking about quantum safe now. We don't know what the timeline is exactly, but we do have methods to address this that are available today. For example, our zSystems are quantum safe systems already. It's definitely something to start considering now. If you had asked me the same question like two years ago, I would've said that's so far away.

And now I'm like, Hmm. Start planning now.

RD: What other tasks or applications is quantum computing suited to?

JG: We think about it in three big buckets. The simulation of nature is one of them. That includes not just molecular simulations, but physics falls into this category. Material science falls into this category. You can think of this as being a space that's interesting because nature is quantum mechanical. So if you are then leveraging a device that is also quantum mechanical—there's some obvious connection there. In addition to that,there's been theoretical proofs that show that there should be at least more than polynomial speed up possible with quantum computers with certain problems such as dynamics, energy states, ground states, and things of that nature.

The second category is generally mathematics and processing data with complex structures. This is where quantum machine learning comes in. We talked about Shor’s and factoring. That fits into this category. There are algorithms that have been shown for quantum machine learning that imply that there should be an exponential speed up possible in certain cases.

We try to focus on these two areas in particular we think hold a lot of promise because they have this greater than polynomial potential associated with them for using a quantum computer. Those are really obvious areas to look at.

The last category is search and optimization. So Grover's falls into this category. These are areas that we don't necessarily have theoretical proofs yet that there could be super polynomial speed up or greater than polynomial or exponential speed up. But we know that it promises probably somewhere around quadratic, maybe more. We're still researching and looking, so you never know what you're gonna find.

There are certain algorithms like amplitude estimation and amplification that we think could act as accelerators for the other two areas that I talked about. Regardless of what kind of speed up, we would expect that it could still help in these other areas as well.

You can imagine it's almost two to the n number of use cases that map onto those areas and it encompasses a lot of different things. We're exploring a lot of different areas with partners and coupling it and tying it to things that are really valuable and hard classically.

That's key, right? If something's really easy classically, you could argue why look at quantum for it. Something that's hard classically is where we think that quantum can lend some kind of advantage or some kind of speed up. In the long run, those are the areas that we're exploring.

RD: Speaking of hypothetical use cases, have you seen the TV show Devs?

JG: No, what was the use case?

RD: Simulating the past and future.

JG: Oh my goodness. Okay...Well, there is prediction, right?

RD: Sure. I mean, simulating nature, right?

JG: No, it's not that far.

RD: Okay. Oh, no.

Because you are helping people process quantum jobs, are there any adjustments they need to make for their algorithms or data to be suitable for quantum computing?

JG: It depends on how you want to use quantum computers, right? A lot of our discussions are around—as we're pointing to the next generation of these quantum-centric supercomputing centers and where you really have classical HPC next to a quantum device—how do you best leverage the workloads between those?

There's a lot of things that we've been thinking about in terms of how you ideally would approach a problem. How would you set it up in such a way that you have the right parts of the problem being addressed classically and then other pieces with a quantum computer.

But the algorithms that we do and the circuits that we run are inherently different from classical ones. Again, it really comes down to how you divvy up the problem, and which pieces you want to put where. At a very high level, that’s what would need to be taken into consideration.

Something to point out here is that quantum computers aren't big data types of devices. That's another area that we think that there's a lot to be done from the classical standpoint. But if you want to look at something that has a high complexity, high interconnectivity, or is by virtue dynamic, those are the kinds of things that the quantum computer handles really well.

If you were to run something on a quantum computer, you want to make sure that it's the right circuit that's going into it and the algorithm that you're using.

RD: Is there anything else you wanted to cover that we didn't talk about?

JG: In general, thinking about the different use cases and the different areas is really important to do as a field, right? This is a very multidisciplinary area, and we need to have folks that are coming from all points of view. Whether it's software development, engineering, architects, and even those that are on more of the classical side.

Learning about quantum and bringing that lens has really pushed us forward in a truly unique way for this field. It has to do with the fact that it's an emerging area. It's all hands on deck and we're all kind of learning together.