SPONSORED BY QUALCOMM

One of my favorite things to hear from clients is “You can’t do that…” Over the years, I’ve invited dozens of clients and developers to bring me a challenge. Got a piece of software you built but are certain it would never run on a smartphone? Let my team and I see what we can do.

In recent years, my team has increasingly focused on how to run computationally expensive AI on consumer devices. It began with machine learning algorithms that added effects to the images you capture, like the bokeh effect to your portrait photos or a filter on your videos. Consumers didn’t think about these features as “AI,” but in the background it required a lot of smart planning to execute this magic without overtaxing the supercomputer that sits in your pocket.

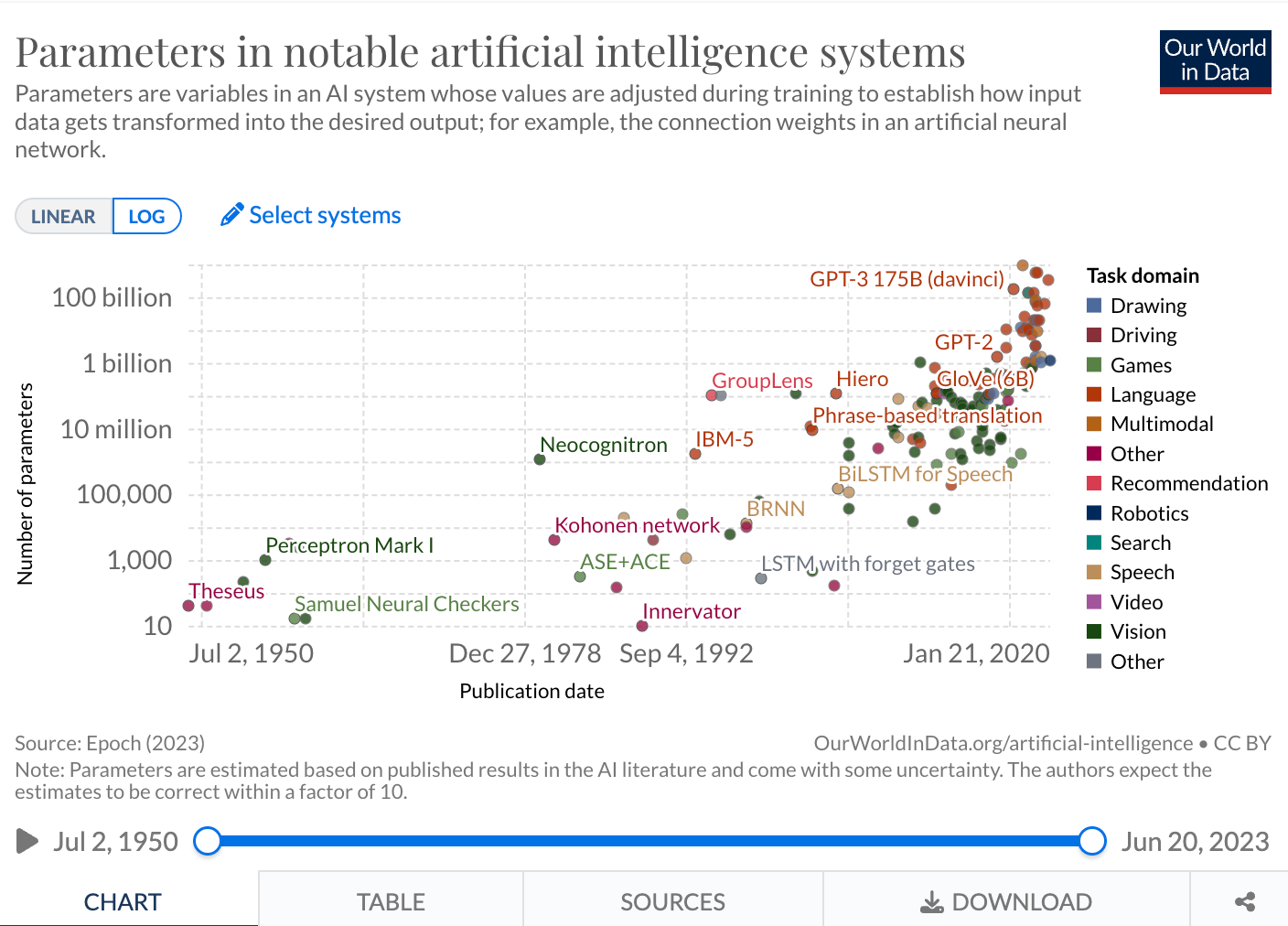

Of course, you ambitious programmers had to keep pushing the limits of innovation. The latest trend to capture the industry’s attention is large language models (LLM), emphasis on the LARGE. What started with millions of parameters quickly grew to billions. ChatGPT has around 175 billion parameters. As these models scale up, they require more memory and compute, both during training and during inference, meaning the time at which its capabilities are put to work.

My title may say product management, but I come from a technical background. I cut my teeth in the 90s working in C and Assembly. I built a distributed embedded security system managed by a Linux workstation. After that I did some literal rocket science at NASA’s Jet Propulsion Lab. And for the last six years I’ve been with Qualcomm Technologies, Inc., where I helm product management responsibilities for the software enablement of various Hexagon DSP cores and NPU cores in all devices with Qualcomm technologies.

Over the last year, in a series of demos, my team has demonstrated how you can quantize and accelerate these LLM and text-to-image AI models so that they run locally on your mobile device. We got Stable Diffusion, ControlNet, and Llama 2 to work so far, and we’re working on other models in our pipeline. Not only is this a fun little feat, it also allows for a big improvement in speed—a critical factor when it comes to adoption of a mobile app.

Now, I love building these demos, but the real point of this work isn’t just to show off toy examples. It’s to demonstrate what developers like you can do. If you have been thinking about adding GenAI capabilities to your own apps and services but weren’t sure how, read on. I’ll break down some of the tools and techniques we use to fit these models on a smartphone and explain how you can play along at home.

How quantization works

Most people interact with generative models through APIs, where the computational heavy lifting happens on servers with flexible resources. That’s because these models put a heavy strain on hardware. One effective way to reduce this computational demand is to increase power efficiency through quantization. Quantization is an umbrella term that covers a lot of different techniques, but what it boils down to is a process that allows you to convert continuous infinite input values from a large set to discrete finite output values in a smaller set.

You can think of quantization through the following analogy. Someone asks you what time it is. You’d look at your watch and say “10:21” but that’s not 100% accurate. Hours, minutes, and seconds are a convention that we use in order to quantize, or approximate, the continuous variable that is time. We simplify time into discrete numbers.

Another example is capturing a digital image by representing each pixel by a certain number of bits, thereby reducing the continuous color spectrum of real life to discrete colors. For example, a black and white image could be represented with one bit per pixel, while a typical image with color has twenty-four bits per pixel (see GIF below). Quantization, in essence, lessens the number of bits needed to represent information. All digital information is quantized in some way, but as this example shows, it can be performed at several levels to lessen the amount of information that represents an item.

Getting back to AI, artificial neural networks consist of activation nodes, the connections between the nodes, a weight associated with each connection, and a bias value to affect the activation value. It is these weight and bias computations that can be quantized. Running a neural network on hardware can easily result in many millions of multiplication and addition operations. The industry standard for weights is 32-bits, which can strain mobile device hardware. But if you quantize those values to lower bit values, like 24-bit or less, that will result in faster operations and result in large computational gains and higher performance.

Besides the performance benefit, quantized neural networks also increase power efficiency for two reasons: reduced memory access costs and increased compute efficiency. Using the lower-bit quantized data requires less data movement, both on-chip and off-chip, which reduces memory bandwidth and saves significant energy. Plus, it makes the whole model smaller so it can fit on the smaller hard drives of your phone. Lower-precision mathematical operations, such as an 8-bit integer (INT8) multiply versus a 32-bit floating (FP32) point multiply, require fewer CPU cycles, thus reducing power consumption.

For our demos, we quantized Stable Diffusion and Meta’s Llama 2 so that they could run on smartphones. For Stable Diffusion, we started with the FP32 version 1-5 open-source model from Hugging Face and made optimizations through quantization, compilation, and hardware acceleration to run it on a phone powered by Snapdragon 8 Gen 2 Mobile Platform. To shrink the models from FP32 to INT8, we used the AI Model Efficiency Toolkit (AIMET), which includes post-training quantization, a tool developed from techniques created by Qualcomm AI Research.

What’s the catch of using low-bit networks? Typically, the accuracy of the quantized AI model tends to drop. Naturally, if you reduce the information contained in a parameter, the resulting mathematical calculations won’t be as precise. As with any compression techniques, many of them are lossy, in that they lose information. However, there are lossless and minimally lossy compression techniques in other fields.

As a leader in power-efficient on-device AI processing, Qualcomm constantly researches how to improve quantization techniques and solve this accuracy challenge. We are particularly interested in quantizing 32-bit floating point weight parameters to 8-bit integers in neural networks without sacrificing accuracy. Outside of our ongoing research in Bayesian deep learning for model compression and quantization, our two accepted papers at ICLR 2019 focus on the execution of low-bit AI models.

The “Relaxed Quantization for Discretized Neural Networks” paper showcases a new method that better prepares the neural network for quantization during the training phase. This allows the neural network to adapt to the quantized computations that will happen during the deployment of the model. The method produces quantized models that perform better and retain more accuracy than alternative state-of-the-art approaches.

The "Understanding Straight-Through Estimator in Training Activation Quantized Neural Nets" paper contributes to the theoretical understanding of the straight-through estimator (STE), which is widely used in quantization-aware model training. The paper proves that with a properly chosen STE, a quantized network model converges to a critical point of the training loss function, while a poor choice of STE leads to an unstable training process. The theory was verified with experiments—go check the paper and see for yourself!

Model compression (including Bayesian learning, quantization, and decomposition) is just one example of the research directions that Qualcomm AI Research is currently focusing on. Other topics include: equivariance of convolutional neural networks, audio to speech compression, machine learning for autonomous vehicles, computational photography, and model training optimized for low power devices. Our goal is to make fundamental AI research breakthroughs so that we—as well as our customers—can scale the technology across industries. Find out more about Qualcomm AI Research and see our list of published papers here.

Our state-of-the-art AIMET quantization techniques, such as Adaptive Rounding (AdaRound), were able to maintain model accuracy at this lower precision without the need for re-training. These techniques were applied across all the component models in Stable Diffusion, namely the transformer-based text encoder, the VAE decoder, and the UNet. This was critical for the model to fit on the device.

One of the things I’m most proud of is how our AIMET system is adaptive. It’s not a one-size-fits-all compression. An approach like that would create a lot of problems for many of today's AI algorithms. Instead, we do multiple passes on the model, tweaking and pruning areas where it’s safe to convert F32 to INT16 to INT8 all the way to INT4. Using an adaptive process allows us to greatly reduce the burden on your device’s memory and CPU while avoiding the introduction of any new headaches for the developer.

Now that we’ve explained how it works, I want to emphasize that many of the tools and techniques we used are open source and were built to easily fit into existing developer workflows. We open sourced the AI Model Efficiency Toolkit (AIMET) on GitHub to collaborate with other leading AI researchers and to provide a simple library plugin for AI developers to utilize for state-of-the-art model efficiency performance.

It’s not the AI, it’s the application

With all the hype surrounding AI, it’s easy to forget that it’s the applications of this technology that will win over consumers, not its raw capability.

Generative AI has some amazing promise. Imagine a role-playing game where all the dialog with computer-generated characters is crafted on the fly. Rather than a menu of options, you get an open-ended conversation that feels as sharp and responsive as talking to a person. It’s worth remembering, however, that generative AI is just one flavor of the progress we’ve been seeing in the world of neural nets and machine learning.

We talk about chatbots and conversational interfaces because we can all relate to them on a human level. But powerful AI models will be even more prevalent behind the scenes. One of my personal favorites is super resolution. Imagine a world where a streaming service is able to deliver your favorite TV show in 720p and a machine learning service running locally on your device can convert that to a 4K image. There would be huge energy savings, both for your battery and the global environment.

Our AIMET is open source and we welcome contributors. And of course, if you have been working with popular tools like PyTorch and Tensorflow, the Qualcomm AI Stack will fit right into your existing workflow.

[Ed. note: Some of this previously appeared in the Qualcomm OnQ blog:

- How on-device AI is enabling generative AI to scale [The future of AI is hybrid] | Qualcomm

- How our on-device AI leadership, global scale and ecosystem enablement are making hybrid AI a reality [The future of AI is hybrid] | Qualcomm

]

Legal Disclaimer

Snapdragon branded products are products of Qualcomm Technologies, Inc. and/or its subsidiaries. AIMET is a product of Qualcomm Innovation Center, Inc. Qualcomm AI Research is an initiative of Qualcomm Technologies, Inc.