When Stack Overflow first arrived 15 years ago, it was a huge advance for a lot of programmers. Suddenly, programmers could share knowledge about quirks, bugs, and best practices complete with code ready to copy and paste. I know plenty of engineers who visit multiple times per day. This targeted resource changed how programmers work.

There’s another change happening right now. Unless you’ve been in a coma for the past year, you know that generative AI has taken the programming world by storm. Multiple chat interfaces and plugins are generating code that is going into production systems. GitHub Copilot, ChatGPT, and more have given developers the power of nearly instant code.

But anyone thinking that these tools can make them a super software developer overnight is in for a rude awakening. Being an effective coder with a code generation (codegen) tool still requires you to be an effective coder without one. Codegen tools will undoubtedly bring new people into software development, but they’ll still need to do the work of understanding the code that their chat interfaces produce.

In this article, we’ll talk about the real gains that programmers are seeing today, while also exploring the ways that not even codegen can help an unproductive team.

How codegen can improve productivity

Codegen tools are trained on existing code. Lots and lots of existing code. They know the ways that code has been written and how it has solved problems in the past. So if you’re writing something that solves common problems—type definitions, connections to databases or SDKs, API definitions—codegen can whip that up easily.

There’s a concept in some languages called scaffolding. You build a template that compilers or other language features then use to create the actual code. Codegen tools are great at scaffolding. I asked our in-house AI tool for a template that connects to a Postgres database:

import psycopg2

from psycopg2 import OperationalError

def create_conn():

conn = None

try:

conn = psycopg2.connect(

database="your_database",

user="your_username",

password="your_password",

host="localhost",

port="5432",

)

print("Connection to PostgreSQL DB successful")

except OperationalError as e:

print(f"The error '{e}' occurred")

return conn

connection = create_conn()Normally, you’d search the web for the connection library for your language of choice, then copy and paste the example code and modify it. The AI serves as a more advanced copy and paste in that it tailors the code to paste to your particular problem. No more fiddling with variables and manually rewriting, which more often than not introduces a bug when we forget a closing parenthesis or semicolon.

In a blog post, two employees at Figma talk about how codegen solves the blank page problem. It doesn’t give you a finished product, but it does get you closer—not 0 to 1, but 0 to 0.5. If you have a new API passing massive JSON blobs to your TypeScript code, you can get an AI to set up the type definition in seconds, instead of manually typing it out.

If you know what you want, codegen can be a shortcut. In the above-linked blog, the Figma developers say that “codegen offers the most leverage when you apply it in a specific way, for your specific team, company, and workflow.” Codegen is doing the work of documentation and example code scaffolded to build code that applies to your particular situation.

Of course, coding was never the hard part; it’s requirements. Knowing what you want from software in a specific enough way that codegen can create the code is a skill in itself. If you look at my example above, I didn’t have a clear sense of my requirements, even which language to use. In my conversations with engineer friends, they’ve told me that thinking through the problems to the point where they can write effective codegen prompts helps them understand their own requirements and the design needed better. They even have local scripts that help them write more effective prompts before they spend money on LLM cycles.

Engineering orgs today want to create reliable software more than ever. Look at the pushes to create more and more automated unit tests, to implement test-driven coverage, or to get your test coverage near 100%. Plenty of companies are going a step beyond automated tests—they’re bringing AI into the picture to suggest tests as you write the code. Codegen can write your tests as well, but you’ll need a clear sense of what the success and failure conditions are.

These AIs can do more than just create code; they can also understand it. If you’ve trying to get up to speed on a code base, you can pass the code to an AI chatbot and have it tell you how the code works. If you don’t have reliable documentation on your internal code, codegen is a nice shortcut. Once you have the answers, you can add them to your internal knowledge base so other people can learn from your research.

But AI responses aren’t completely reliable

Being able to complete a coding project faster using AI is great. But it doesn’t solve certain issues, and introduces thorny new ones. Hallucinations are a well-known side effect of LLMs and their chatbot interfaces. Whether LLMs have knowledge or are merely stochastic parrots is up for debate. While they can give very authoritative pieces of code or answers to coding questions, they can also make things up from whole cloth. One coder found ChatGPT suggested that she use features of libraries that did not exist.

For that reason, developers have been slow to trust AI chatbots. In our 2023 Developer Survey, only 3% of those surveyed highly trusted the output of AI tools, with one quarter of respondents not trusting them. Some may say that a healthy distrust of any code you come across is healthy (even your own), but the fact is that plenty of people will take AI’s word for it. That’s a recipe for security vulnerabilities—it happened with one of the most popular code snippets on Stack Overflow, and it’ll happen with generated code too.

Researchers from Purdue University found that ChatGPT came up short when compared with human-created answers on Stack Overflow. They found that, when asked a set of 517 questions from Stack Overflow, ChatGPT got less than half of them correct. The research found that, in addition to being often wrong, 77% of the answers were overly verbose. But users preferred those answers about 40% of the time due to their comprehensiveness and articulate writing style.

That’s dangerous for anyone not double-checking their answers. The easy errors are syntax ones, and those aren’t the mistakes that codegen makes. They don’t understand the context of the questions, so they may end up making conceptual mistakes, focusing on the wrong part of the question, or using the wrong or non-existing APIs, libraries, or functions.

In the end, participants mostly preferred the Stack Overflow questions for their conciseness and accuracy. While most LLMs have used Stack Overflow as part of their training data, the research shows that the originals are generally more accurate than the AI output.

If you’re using AI-generated code, you’ll need to work harder on documentation. An organization’s long-term success can be hamstrung by tech debt, and a poorly written or understood code base is a form of technical debt. A junior engineer who turns in his project five days early but can’t explain how it all works in the documentation is actually planting a time bomb for future productivity losses.

Features added to your codebase with the help of an AI system also contain legal and regulatory risks. At this point, cases are working their way through the judicial system to determine if an AI system trained on open-source code is allowed to produce copies or close look-a-likes of that code without proper attribution and licensing.

Imagine a scenario in which your organization has the happy outcome of being acquired or going public. The investors or acquirers performing due diligence will want guarantees that any intellectual property they are purchasing is wholly owned and properly copyrighted and licensed by your firm. If large chunks of your code base were not written by your engineers, but rather by AI systems trained on a vast swathe of publicly available code, it will be much harder to satisfy investors’ concerns.

The long-term health of your tech stack isn’t just dependent on how quickly engineers can file their PRs. It’s also essential that different parts of your organization are able to clearly communicate and collaborate, working to find the big-picture solutions to architectural challenges that will lay the bedrock for future expansion and growth.

One solution to these challenges is tying your AI system to a dataset you know and trust as your ground truth. At Stack Overflow, for example, we use retrieval augmented generation (RAG) to ensure that queries sent to OverflowAI look only at information from our public platform or from the Teams instance the user belongs to. By limiting the surface area the AI uses for inference, you reduce the chance of errors and hallucinations. You also enable the AI responses to include citations, allowing the developer to understand the provenance and license of the code it’s creating.

AI and the conversational interfaces that they enable may ultimately be a game-changing new user interface style. Their ability to find and summarize information is unmatched. But having the original information available for reference, especially if it’s not public information, will be the key to productivity. And real organization-wide productivity gains don’t just come from faster code; they come from improving how your organization works together.

Real gains will come from improving org as a whole, not just faster code

There’s a reason why any organization talking about how productive it is talks about development velocity, developer experience, and platform engineering. These are all big umbrella efforts that improve the productivity of an organization as a whole, not just the productivity of one developer. A software development team produces software together, so their productivity should be measured together. There are a few things that effective organizations are all working on.

Automate wherever possible

First of all, they are looking to automate any manual processes that they can. They automate their software delivery pipeline with an effective CI/CD process that takes committed code from build to deploy with minimal effort. They automate as much of their testing as they can. They focus on platform engineering to make the tools and libraries everyone uses better. In fact, the codegen productivity can be seen as part of this: automating boilerplate code so coders can focus on solving more important problems.

Share knowledge company-wide

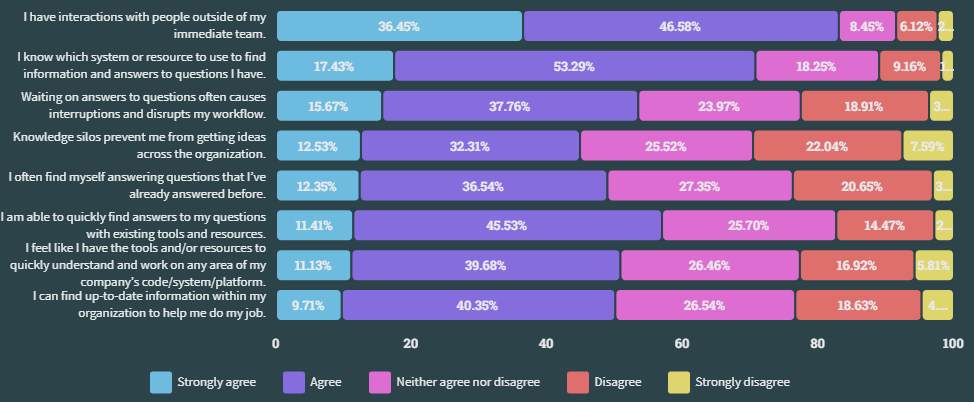

Second, they share knowledge with everyone in the company who needs it. In knowledge-heavy work like writing code, this is even more important. Stack Overflow is a great knowledge-sharing resource for general coding questions, but for questions about a proprietary codebase, devs need to turn to their teammates. Our survey found that about 50% of respondents agreed that waiting on answers caused interruptions in their flow. A solution like Stack Overflow for Teamsthat preserves and surfaces knowledge across silos can reduce these interruptions.

People first, technology second

Third, effective organizations think a lot about the process of building software, not just writing code. Bad processes are easy to spot, and we’ve seen plenty of people complain about scrum and Agile. But these processes were created to improve the lives of developers, so successful organizations ensure that the process works well, not that it’s followed rigidly. They also have good design, planning, and requirements processes. We’ve said that requirements are the hard part, and requirement volatility might be the defining problem of software engineering. You can’t hit a target if someone keeps moving it.

Empower, don’t micromanage

Finally, effective organizations focus on enabling their developers to do work, rather than monitoring and micro-managing them. The days of negative management—seeing your employees as troublemakers who need to be guided and controlled and using management to reduce their negative impact—are over. Managers look to support their employees, give them the breaks and safety that they need, and get out of their way when they work.

There’s more to productivity than code

Faster code created by AI tools may help individuals be more productive. But real gains will come from improving your whole organization. You’ll need to know what you’re doing when you write code—whether manually or through codegen—or you could be doing more harm than good.

Want to explore Stack Overflow for Teams on your own time? Get started here for free or connect with one of our experts.