There are many variants of LoRA you can use to train a specialized LLM on your own data. Here’s an overview of all (or at least most) of the techniques that are out there.

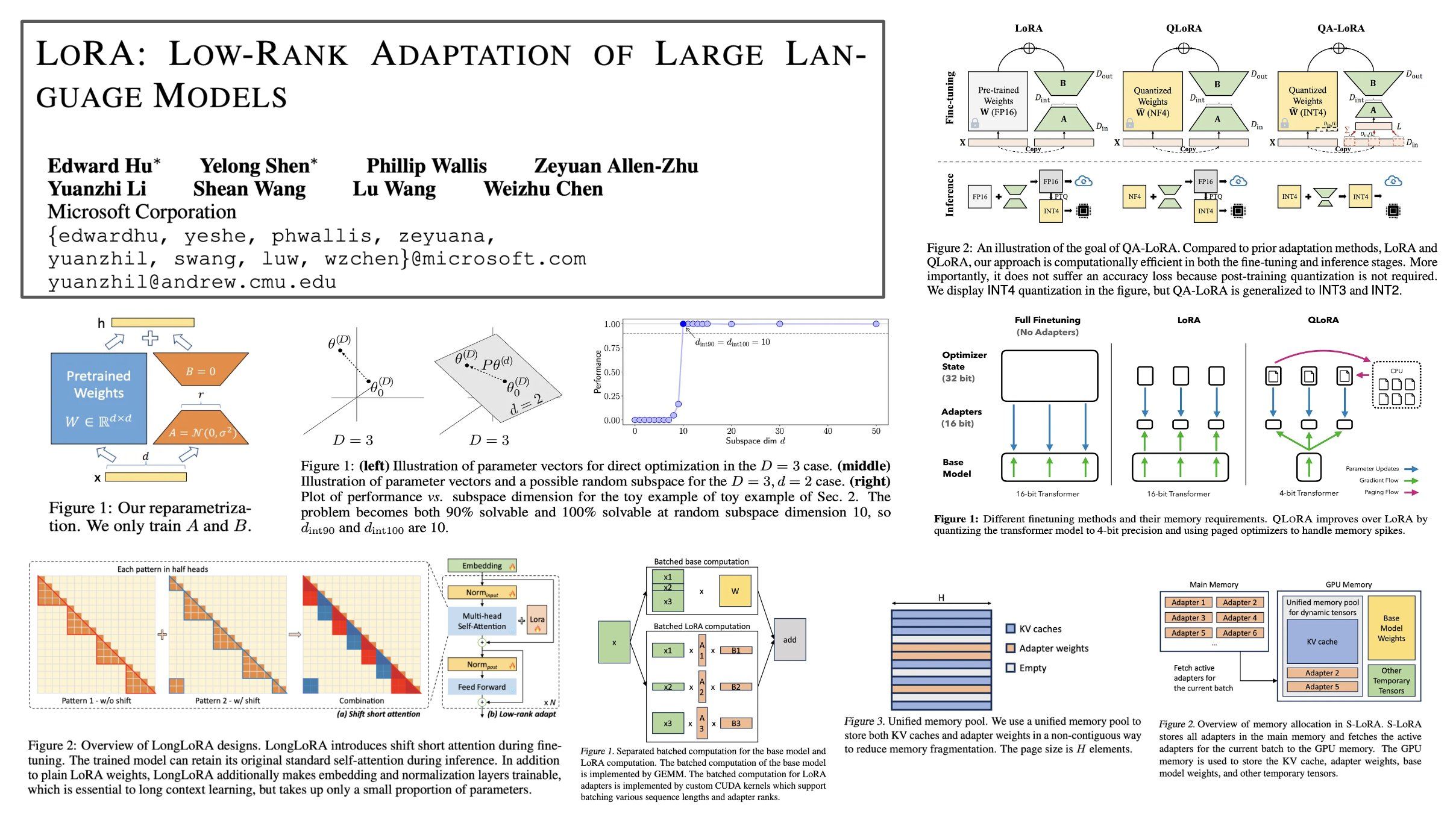

LoRA models the update derived for a model’s weights during finetuning with a low rank decomposition, implemented in practice as a pair of linear projections. LoRA leaves the pretrained layers of the LLM fixed and injects a trainable rank decomposition matrix into each layer of the model.

QLoRA is (arguably) the most popular LoRA variant and uses model quantization techniques to reduce memory usage during finetuning while maintaining (roughly) equal levels of performance. QLoRA uses 4-bit quantization on the pretrained model weights and trains LoRA modules on top of this. In practice, QLoRA saves memory at the cost of slightly-reduced training speed.

QA-LoRA is an extension of LoRA/QLoRA that further reduces the computational burden of training and deploying LLMs. It does this by combining parameter-efficient finetuning with quantization (i.e., group-wise quantization applied during training/inference).

LoftQ studies a similar idea to QA-LoRA—applying quantization and LoRA finetuning on a pretrained model simultaneously.

LongLoRA attempts to cheaply adapt LLMs to longer context lengths using a parameter-efficient (LoRA-based) finetuning scheme. In particular, we start with a pretrained model and finetune it to have a longer context length. This finetuning is made efficient by:

- Using sparse local attention instead of dense global attention (optional at inference time).

- Using LoRA (authors find that this works well for context extension).

S-LoRA aims to solve the problem of deploying multiple LoRA modules that are used to adapt the same pretrained model to a variety of different tasks. Put simply, S-LoRA does the following to serve thousands of LoRA modules on a single GPU (or across GPUs):

- Stores all LoRA modules in main memory.

- Puts modules being used to run the current query into GPU memory.

- Uses unified paging to allocate GPU memory and avoid fragmentation.

- Proposes a new tensor parallelism strategy to batch LoRA computations.

Many other LoRA variants exist as well…

- LQ-LoRA: uses a more sophisticated quantization scheme within QLoRA that performs better and can be adapted to a target memory budget.

- MultiLoRA: extension of LoRA that better handles complex multi-task learning scenarios.

- LoRA-FA: freezes half of the low-rank decomposition matrix (i.e., the A matrix within the product AB) to further reduce memory overhead.

- Tied-LoRA: leverages weight tying to further improve the parameter efficiency of LoRA.

- GLoRA: extends LoRA to adapt pretrained model weights and activations to each task in addition to an adapter for each layer.