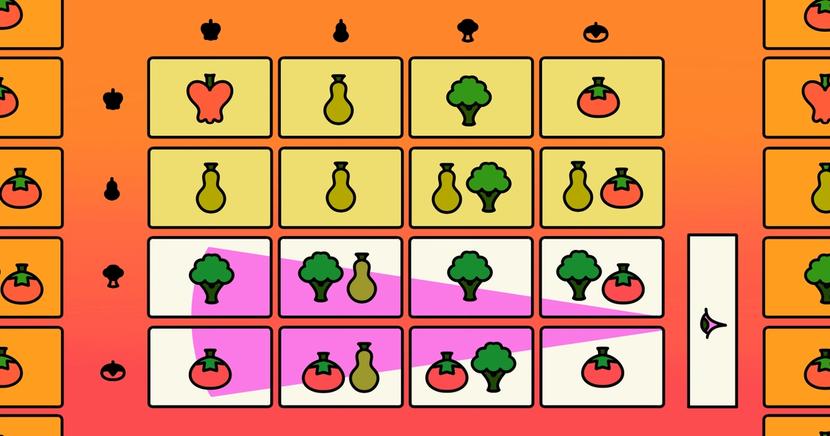

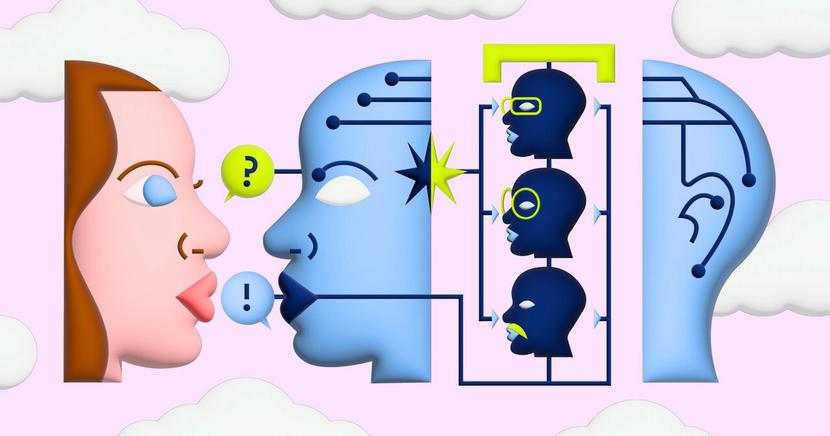

How self-supervised learning revolutionized natural language processing and gen AI

Self-supervised learning is a key advancement that revolutionized natural language processing and generative AI. Here’s how it works and two examples of how it is used to train language models.