[Ed. note: While we take some time to rest up over the holidays and prepare for next year, we are re-publishing our top ten posts for the year. Please enjoy our favorite work this year and we’ll see you in 2026.]

Since October 2010, all Stack Exchange sites have run on physical hardware in a datacenter in New York City (well, New Jersey). These have had a warm spot in our history and our hearts. When I first joined the company and worked out of the NYC office, I saw the original server mounted on a wall with a laudatory plaque like a beloved pet. Over the years, we’ve shared glamor shots of our server racks and info about updating them.

For almost our entire 16-year existence, the SRE team has managed all datacenter operations, including the physical servers, cabling, racking, replacing failed disks and everything else in between. This work required someone to physically show up at the datacenter and poke the machines.

We’ve since moved all our sites to the cloud. Our servers are now cattle, not pets. Nobody is going to have to drive to our New Jersey data center and replace or reboot hardware. Not after last week.

That’s because on July 2nd, in anticipation of the datacenter’s closure, we unracked all the servers, unplugged all the cables, and gave these once mighty machines their final curtain call. For the last few years, we have been planning to embrace the cloud and wholly move our infrastructure there. We moved Stack Overflow for Teams to Azure in 2023 and proved we could do it. Now we just had to tackle the public sites (Stack Overflow and the Stack Exchange network), which is hosted on Google Cloud. Early last year, our datacenter vendor in NJ decided to shut down that location, and we needed to be out by July 2025.

Our other datacenter—in Colorado—was decommissioned in June. It was primarily for disaster recovery, which we didn’t need any more. Stack Overflow no longer has any physical datacenters or offices; we are fully in the cloud and remote!

Major kudos to the SRE team, along with many other folks who helped make this a reality. We’ll have a few blogs soon to talk about migrating the Stack Exchange sites to the cloud, but for now, enjoy the pictures.

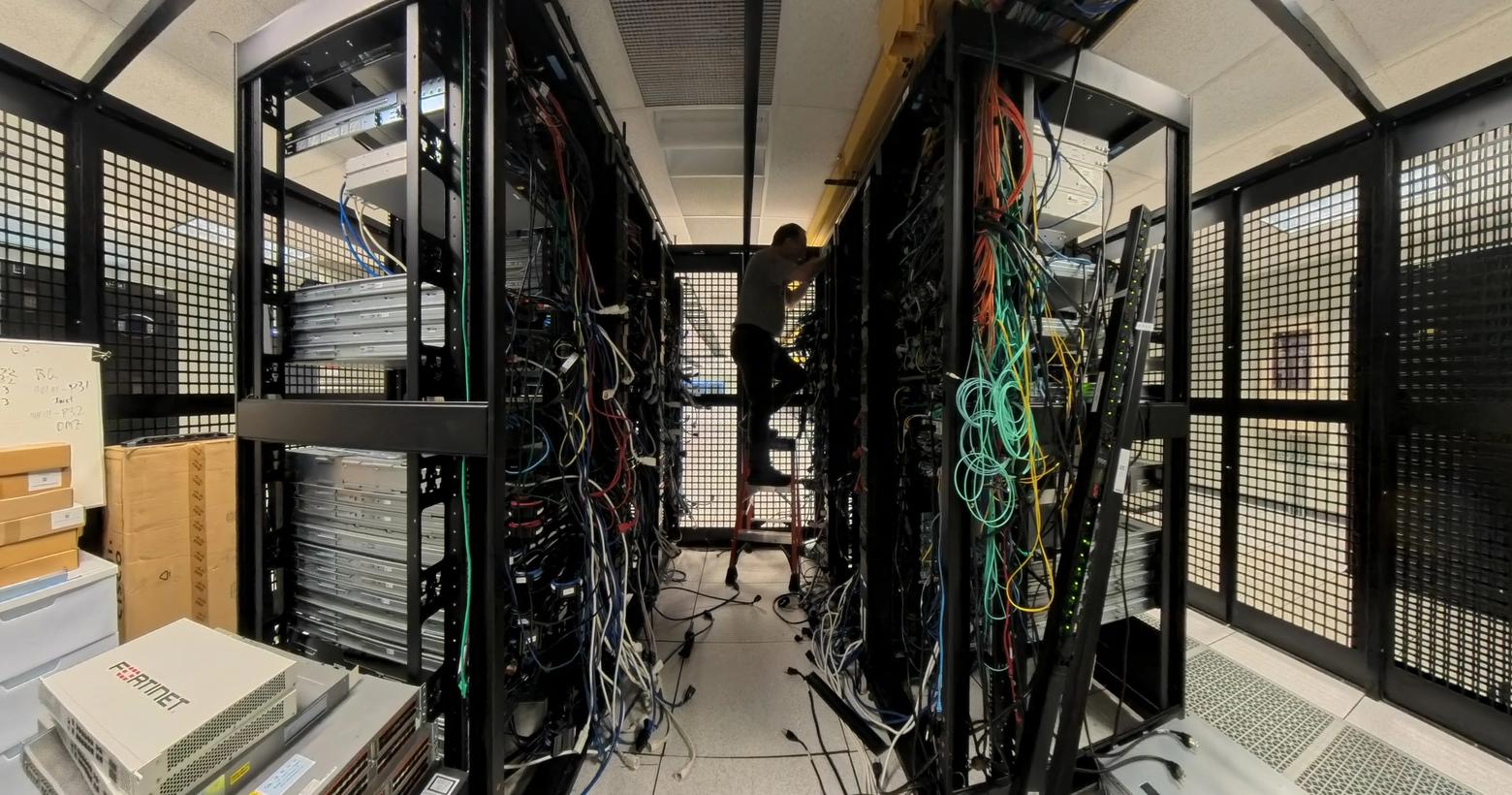

We had about 50 servers all together in this location. Here’s what the servers looked like at the beginning of the day:

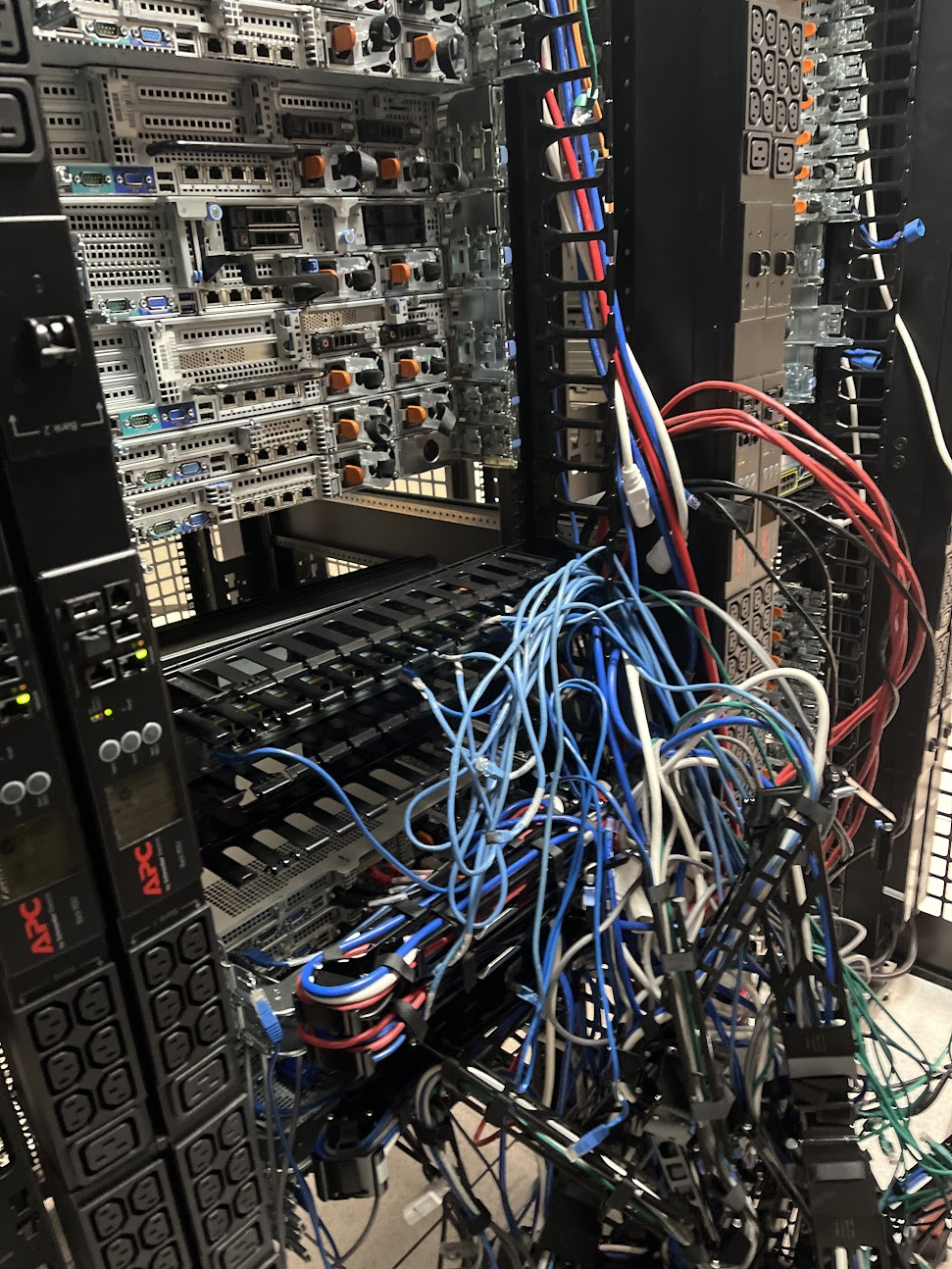

Eight (or more) cables per machine multiplied by over 50 machines is a lot of cables! In the above picture you can see the large mass of cables. Even though they are neatly packaged in a little cage (called an “arm”), one per server, it was a lot of work to de-cable so many hosts.

Why so many cables per machine? Here’s a staged photo that shows the individual cables individually:

- Blue: 1x 1G ethernet cable for the management network (remote access).

- Black: 1x cable that takes the VGA video and USB (keyboard and mouse) signals to a "KVM switch.” From the KVM switch we could connect to the keyboard/video/mouse of any machine in the datacenter. It was expensive but worth it. In an emergency, we could always "be in front of the machine" without leaving our home.

- Red: 2x 10G ethernet cables to the main network.

- Black: 2x more 10G ethernet cables to the main network (only on machines that needed extra bandwidth, such as our SQL servers).

- White+blue: 2x power cables (each to a different circuit, for redundancy).

The hardware nerds out there should appreciate these. But then came time to disassemble them. Josh Zhang, our Staff Site Reliability Engineer, got a little wistful. “I installed the new web tier servers a few years ago as part of planned upgrades,” he said. “It's bittersweet that I'm the one deracking them also.” It’s the IT version of Old Yeller.

We assume that most datacenter turn-downs involve preserving certain machines to move them to the new datacenter. However, in our case, all of the machines were being disposed of. This gave us the liberty of being able to move fast and break things. If it was in our cage, it was going to the disposal company. For security reasons (and to protect the PII of all our users and customers), everything was being shredded and/or destroyed. Nothing was being kept. As Ellora Praharaj, our Director of Reliability Engineering, said, “No need to be gentle anymore.”

Clearing out a rack has two steps: First we de-cable all the machines, then we un-rack them. Here's some racks being de-cabled. Anything salvageable had been removed. Thus, we didn't have to be neat, nor careful. Here you see racks in various stages of being de-cabled. After that the mass of cables were lobbed over to the big pile.

Ever have difficulty disconnecting an RJ45 cable? Well, here was our opportunity to just cut the damn things off instead of figuring out why the little tab wouldn't release the plug.

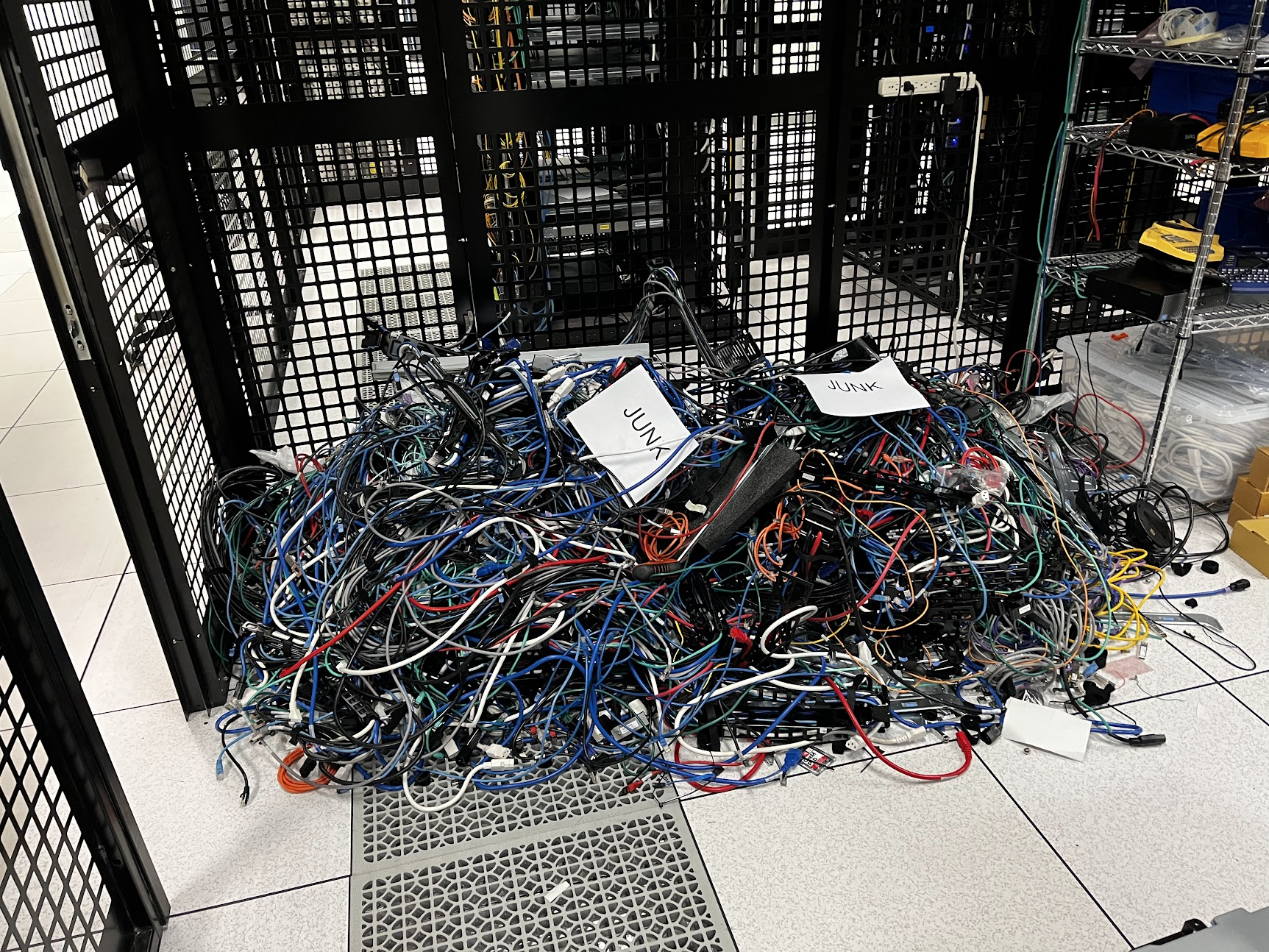

The junk pile. Our de-cabling process involved throwing everything into the corner of the room until we realized we may be blocking our only exit. Then we piled higher, not wider.

All the servers and network devices were put in piles on the floor. Seven piles in total.

Is this the "before" picture from 2015-ish when we built all this or the "after" picture when we decommed it all? We'll let you guess!

Big thanks to Ellora Praharaj, Tom Limoncelli, and Josh Zhang for the pictures and info. And for doing the heavy lifting.