Welcome to this month's installment of Stack Overflow research updates! Since November of last year, my colleague Donna Choi and I have been posting (over on Meta) bite-size updates about the quantitative and qualitative research we use to make decisions at Stack Overflow. As of this month, we are posting these updates here on the blog. 🙌

In March of this year, we launched the Ask Question Wizard, the biggest change to the question asking experience on Stack Overflow in our ten years' existence. People have been using the wizard for about six months now, so let's explore how many people have used it, what its impact has been, and where we're going from here. We have seen some encouraging improvements in question quality, and we can use what we've learned to improve question asking more broadly.

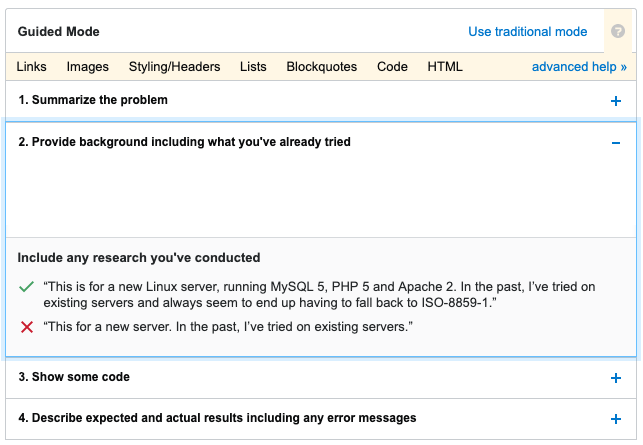

What is this wizard?

The wizard presents an alternate question-asking mode that we call guided mode, offering askers detailed instructions and direction about how to ask a question and what to include. We kept the original question-asking page intact, calling it traditional mode.

Before launching the wizard, we tested a final version of it in an A/B test. We found that question quality, which we define and explain in detail here, improved in an absolute sense by modest amounts for askers with reputation less than 111 (the asker population we included in that test). There was a 5.12% decrease in bad quality questions, and a 1.12% increase in good quality questions.

When the wizard launched more broadly, it was the default option for users with reputation less than 111, but such users could opt out if they wanted. Also, users with reputation above that threshold could opt in.

Who has used the wizard?

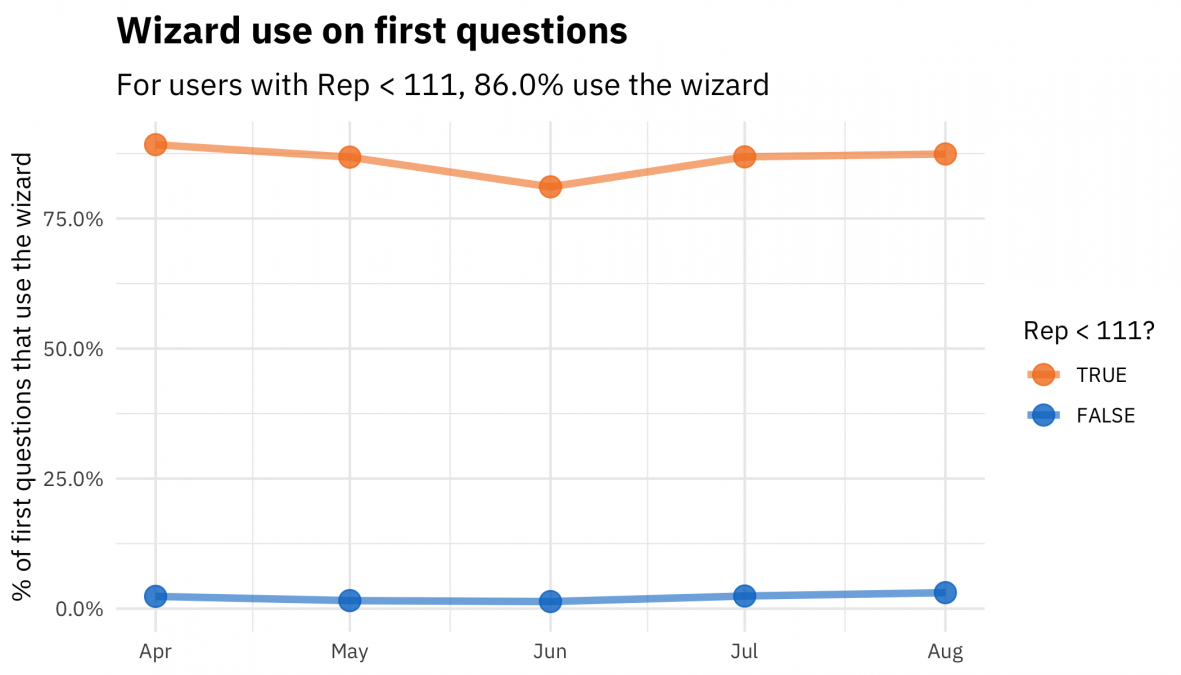

Between when the wizard was launched and the beginning of this week, 777,644 questions have been asked using guided mode. What level of opting in/out do we see, specifically on people's first questions?

A few percent of higher rep question askers opted in, and about 15% of users with reputation below 111 opted out. In this time period, 99.1% of first questions were asked by users with reputation less than 111, so using the wizard has been the main new question-asking experience over the past several months. Users are largely not opting out.

Confounders and models

It is quite challenging to measure what effect an intervention like this wizard has because users can opt in/out of it. We expect that the very characteristics that lead a user to have a hard time writing a high-quality question may make them more likely to opt in. A difference we see between using the wizard vs. not may be caused by a factor that predicts intervention (the wizard) rather than the intervention itself. This is an example of confounding, and is exactly why we typically use A/B tests when planning changes for our site. However, we would still like to see what we can learn from the last six months of data, which means we need to use methods appropriate for observational data. My academic background is astronomy, so this is a pretty familiar situation for me! (There are not a lot of randomized controlled trials in space.) I'll focus on a few very important factors in this blog post, but predictors we explored included reputation, account age, user location, and more.

For this analysis, I am going to share results using Bayesian generalized linear multilevel modeling to understand the impact of the question wizard. Why use a Bayesian approach for this data? The main reasons are that only a very few users with higher rep ever used the wizard, and we'd like to explore whether the wizard only helps lower rep users, among other questions about when and for whom the wizard is helpful. Bayesian modeling provides a framework well-suited to those kinds of questions.

Question quality

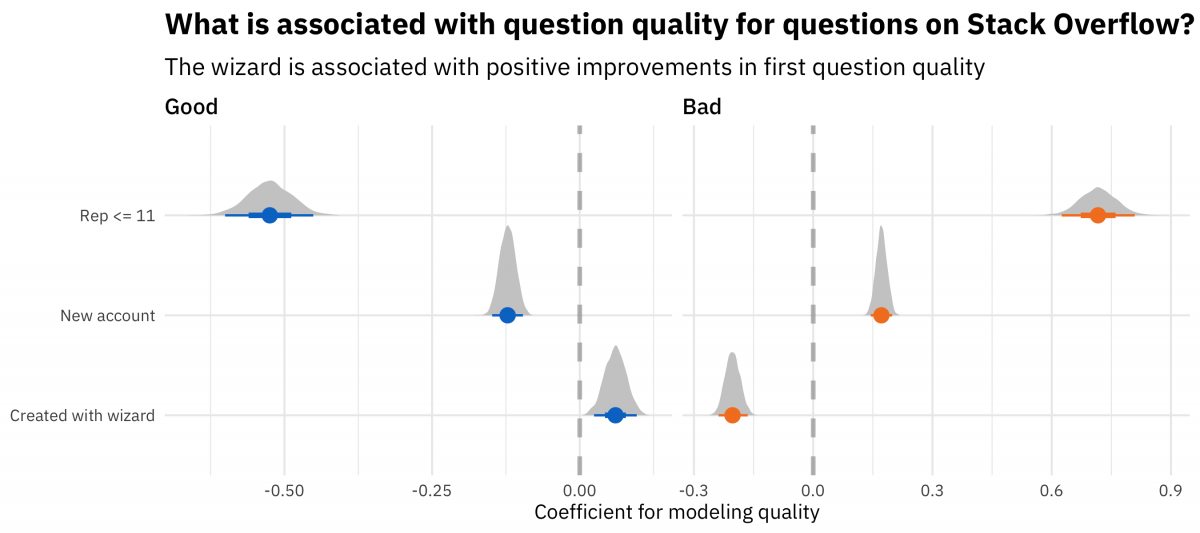

First, let's look at model results for question quality.

This plot shows results for a straightforward classification model predicting whether a question is good or bad trained using brms and Stan. I chose the reputation threshold at 11 (rather than, say, at 111) because the median reputation of a first time question asker is 8.1. A "new account" is one created within the past day. The size of these effects does change with the thresholds but these predictors having an impact at all is robust to such changes.

How can you interpret this plot? Reputation above the threshold, older accounts, and using the question wizard are all associated with positive improvements in question quality. Specifically, because of the modeling approach used here, we see that, for example, people with accounts older than one day write better first questions, controlling for other factors like reputation and using the wizard.

[table id=12 /] Let's walk through how to interpret these risk ratios, which are relative changes.

- Someone with rep <= 11 is about 0.6x (~40% less) likely to ask a good quality first question, and about 2x (100% more) likely to ask a bad quality first question, than someone with higher reputation, controlling for other factors.

- A first time question asker who has a new account (has created their account within the past day) is about 0.9x (10% less) likely to ask a good quality first question and about 1.2x (20% more) likely to ask a bad quality first question than someone with an older account.

Controlling for these confounders, when a first time question asker uses the wizard, their question is about 6% more likely to be good quality and 20% less likely to be bad quality. We consider this a real success of the wizard, because when people ask better quality questions, they are more likely to get answers and have an overall more positive experience. We also know people who ask good quality questions are more likely to ask a question again. Confirming what we found during the A/B test, the wizard has a bigger impact on bad question quality than good question quality.

I explored whether we see evidence that the wizard only helps users with lower reputation or first-time question askers, and we don't see evidence for that. We don't have as strong evidence that the wizard can help users with higher reputation (we don't have much data on this), but we can put some limits on how large the difference for higher and lower rep question askers would need to be for us to see a difference with the data we have.

Comments galore

We know from multiple sources of feedback and research that the comment section of Stack Overflow can be a pain point in using our site. Putting aside the issue of unfriendly or otherwise problematic comments, they are intended to be a venue for clarifying questions to improve the quality of a question. This means that from our perspective, in general and on average, the fewer comments, the better.

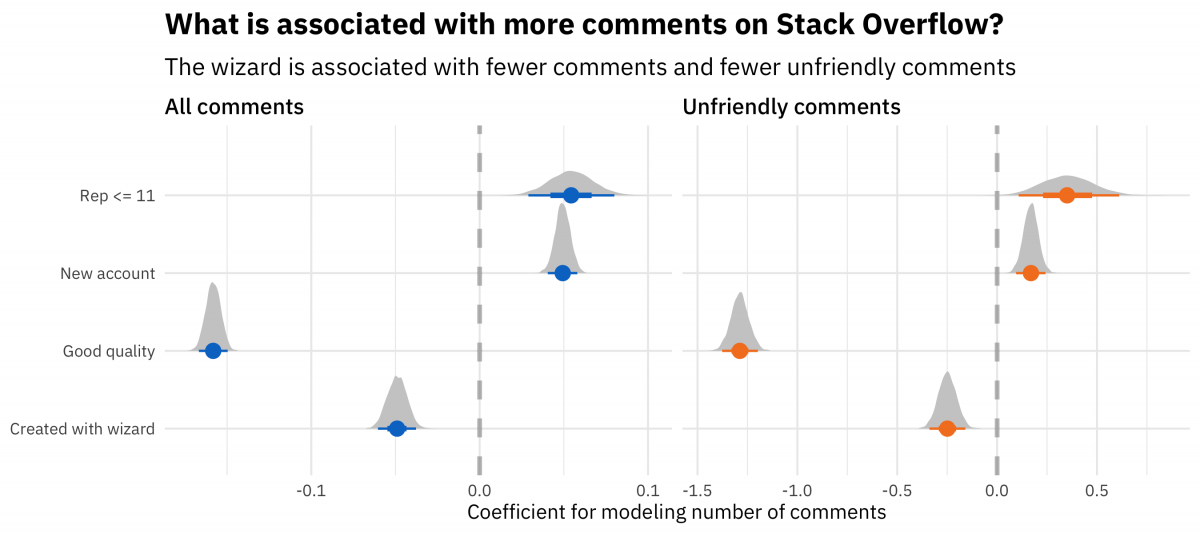

How has the question wizard affected the number of comments on first-time questions, and the number of unfriendly comments? Instead of a classification model, this uses a Poisson regression model.

People use several different categories to flag comments that are inappropriate for our site, such as rude/abusive and unfriendly/unwelcoming. Recently, my team, especially my colleague Jason Punyon, has used the human-generated flags to build a deep learning model to automatically detect unfriendly comments on Stack Overflow. We'll share more soon about this model, how it works, and how we're using it on our site to make Stack Overflow a more safe and inclusive community. For this analysis, I used the unfriendly comment model (not comments flagged by human beings, which we know are dramatically underflagged) to understand what impact the wizard has on unfriendly comments.

[table id=11 /] What do these coefficients mean? They are multiplicative factors, because this was Poisson regression.

- Having rep <= 11 is associated with receiving 6% more comments and about 40% more unfriendly comments.

- Having a new account (less than one day) is associated with receiving 5% more comments on a first question and about 20% more unfriendly comments.

- Writing a good quality first question is associated with about a 15% reduction in comments on that question and over 70% reduction in unfriendly comments on that question.

Using the wizard is associated with 5% fewer comments and over 20% fewer unfriendly comments. These factors and their impact come from models controlling for the other factors, so think about the wizard reducing unfriendly comments by over 20%, controlling for other factors, including reputation and quality of the question. We consider this another huge success of the wizard.

Next steps

We are really happy to see that the question wizard has been having a positive influence on Stack Overflow, both in terms of question quality and interactions via comments. 🎉 This was the first time we had shipped changes of this magnitude for the question-asking workflow in a decade, and it is great to be able to celebrate its impact.

The question wizard has proven successful enough that we want to iterate on its design and see how we can better scaffold people with coding problems to either write a successful question or realize that they don't need to ask a question at all because the answer is already available. We also need to revisit some of the technical decisions made when launching a two-mode question workflow, as some aspects of the current system are brittle and inappropriate for a growing system, especially when we consider the network beyond Stack Overflow.

What can you expect in the near future if you ask a question soon? We will be using A/B testing, so not every user will experience changes at the same time, but some of the changes we are working on so far include:

- More upfront guidance for first-time question-askers

- Setting expectations about what happens after asking a question

- Improved "how-to-ask" guidance while drafting a question

- Consolidating the many dozens of validation warnings into a single review interface

For both technical and design reasons, we plan for the question workflow to change for all users, not only those with lower reputation. As we move forward, we can use both data and feedback from users to assess how successful changes are. How do we know when we are successful? We use both qualitative and quantitative research in making decisions about our site. This blog post is an example of the kind of quantitative analysis that we use, involving large samples of our users broadly. For more details on what's coming to Stack Overflow soon, check out my colleague Meg's blog post from earlier this week!