Is that allowed? Authentication and authorization in Model Context Protocol

Learn how to protect MCP servers from unauthorized access and how authentication of MCP clients to MCP servers works.

Community-generated articles submitted for your reading pleasure.

Learn how to protect MCP servers from unauthorized access and how authentication of MCP clients to MCP servers works.

From sprawling PDFs to a fast, factual conversational assistant.

Self-supervised learning is a key advancement that revolutionized natural language processing and generative AI. Here’s how it works and two examples of how it is used to train language models.

As with cars, there are few system administration tasks that involve little to no automation.

Data has always been key to LLM success, but it's becoming key to inference-time performance as well.

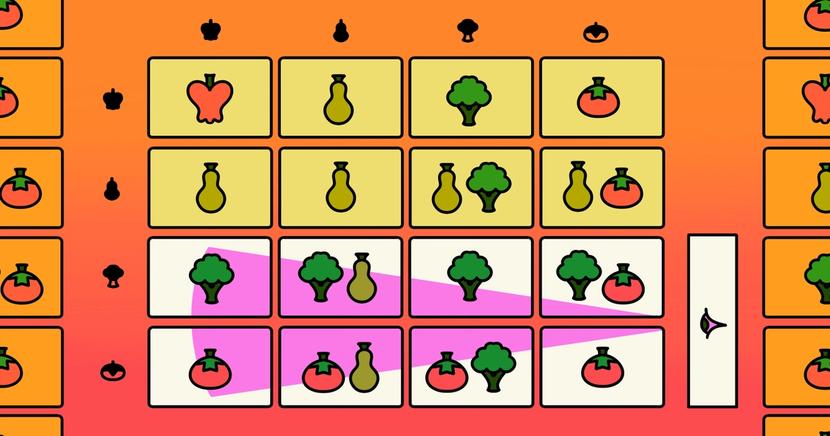

Want to train a specialized LLM on your own data? The easiest way to do this is with low rank adaptation (LoRA), but many variants of LoRA exist.

Is anyone designing software where failures don't have consequences?

It’s easy to generate code, but not so easy to generate good code.

A developer’s journal is a place to define the problem you’re solving and record what you tried and what worked.

Would updating a tool few think about make a diff(erence)?

Wondering how to go about creating an LLM that understands your custom data? Start here.

Masked self-attention is the key building block that allows LLMs to learn rich relationships and patterns between the words of a sentence. Let’s build it together from scratch.

It’s tempting to push projects out the door to woo and impress colleagues and supervisors, but the stark truth is that even the smallest projects should have proper review periods.

In today's data-driven world, Apache Kafka has emerged as a cornerstone of modern data streaming, particularly with the rise of AI and the immense volumes of data it generates.

The decoder-only transformer architecture is one of the most fundamental ideas in AI research.

Retrieval-augmented generation (RAG) is one of the best (and easiest) ways to specialize an LLM over your own data, but successfully applying RAG in practice involves more than just stitching together pretrained models.

Settling down in a new city (or codebase) is a marathon, not a sprint.

More and more of our lives are becoming data-driven. Is that a good thing?

Here’s a simple, three-part framework that explains generative language models.

The key strategies for building a headache-free data platform.

This new LLM technique has started improving the results of models without additional training.

Why replacing programmers with AI won’t be so easy.

Everyone who says "tech debt" assumes they know what we’re all talking about, but their individual definitions differ quite a bit.

With all the advancements in software development, apps could be much better. Why aren't they?